MULE: Multimodal Universal Language Embedding (AAAI 2020)

Donghyun Kim, Kuniaki Saito, Kate Saenko, Stan Sclaroff, Bryan A. Plummer

,Boston University

[Paper] [Code]

Abstract

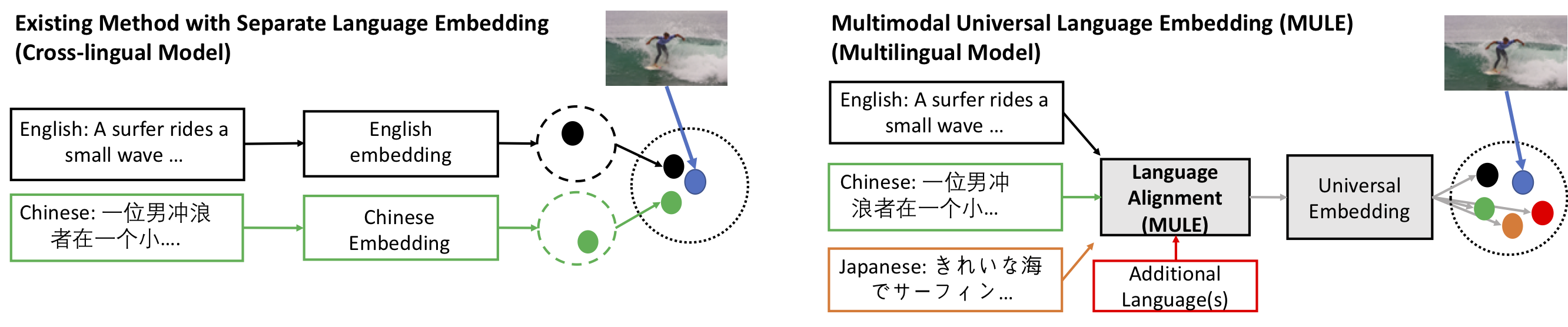

Existing vision-language methods typically support two languages at a time at most. In this paper, we present a modular approach which can easily be incorporated into existing vision-language methods in order to support many languages. We accomplish this by learning a single shared Multimodal Universal Language Embedding (MULE) which has been visually-semantically aligned across all languages. Then we learn to relate MULE to visual data as if it were a single language. Our method is not architecture specific, unlike prior work which typically learned separate branches for each language, enabling our approach to easily be adapted to many vision-language methods and tasks. Since MULE learns a single language branch in the multimodal model, we can also scale to support many languages, and languages with fewer annotations can take advantage of the good representation learned from other (more abundant) language data. We demonstrate the effectiveness of our embeddings on the bidirectional image-sentence retrieval task, supporting up to four languages in a single model. In addition, we show that Machine Translation can be used for data augmentation in multilingual learning, which, combined with MULE, sigificantly improves performaces compared to prior work, with the most significant gains seen on languages with relatively few annotations.

Overview

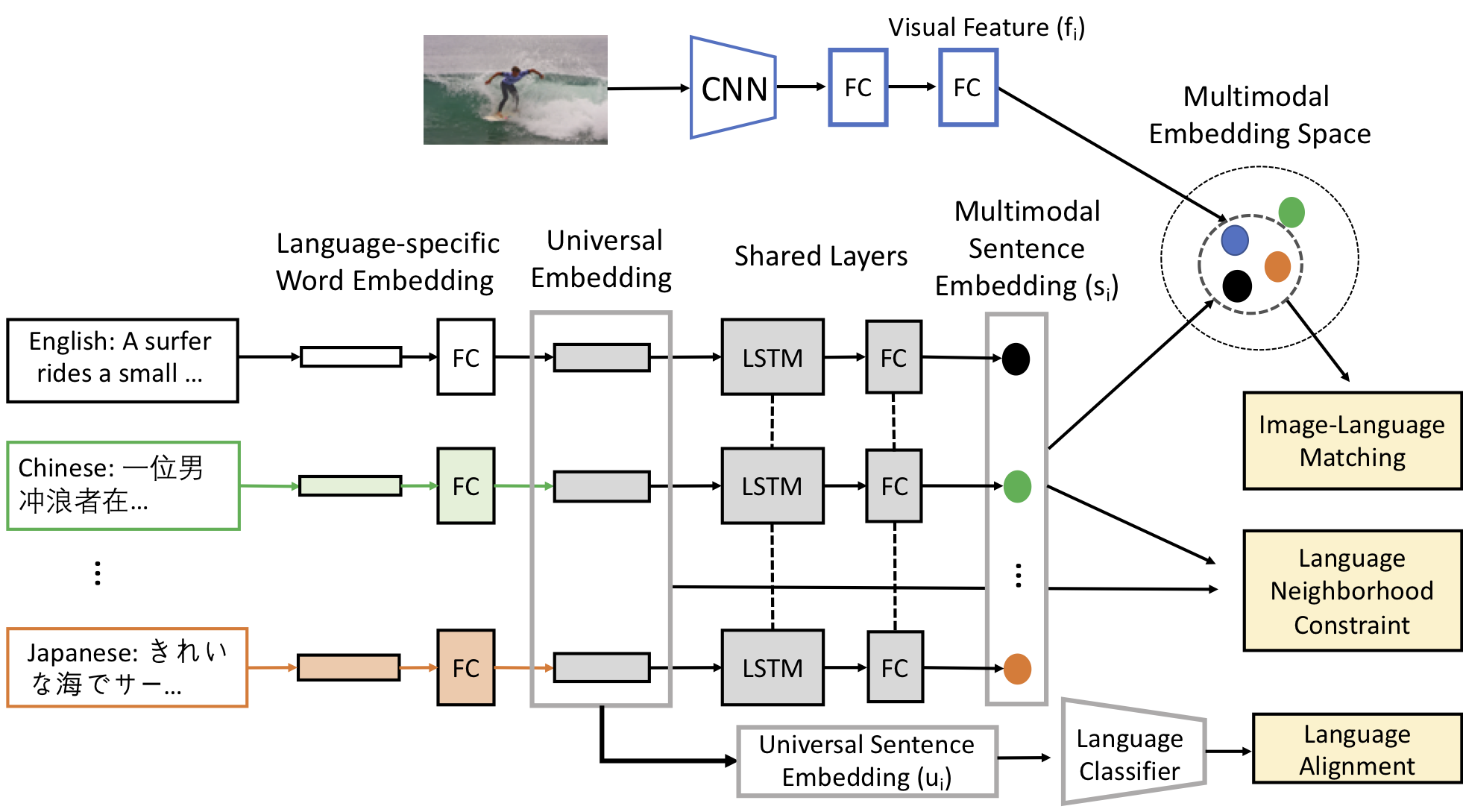

We propose MULE, a language embedding that is visually-semantically aligned across multiple languages (bottom). This enables us to share a single multimodal model, significantly decreasing the number of model parameters, while also performing better than prior work using separate language branches or multilingual embeddings which were aligned using only language data. Training MULE consists of three components: neighborhood constraints which semantically aligns sentences across languages, an adversarial language classifier which encourages features from different languages to have similar distributions, and a multimodal model which helps MULE learn the visual-semantic meaning of words across languages by performing image-sentence matching.

Code

Reference

@inproceedings{kimMULEAAAI2020,

title={{MULE: Multimodal Universal Language Embedding}},

author={Donghyun Kim and Kuniaki Saito and Kate Saenko and Stan Sclaroff and Bryan A. Plummer},

booktitle={AAAI Conference on Artificial Intelligence},

year={2020}

}