RISE: Randomized Input Sampling for Explanation of Black-box Models

Vitali Petsiuk, Abir Das, Kate Saenko

Abstract

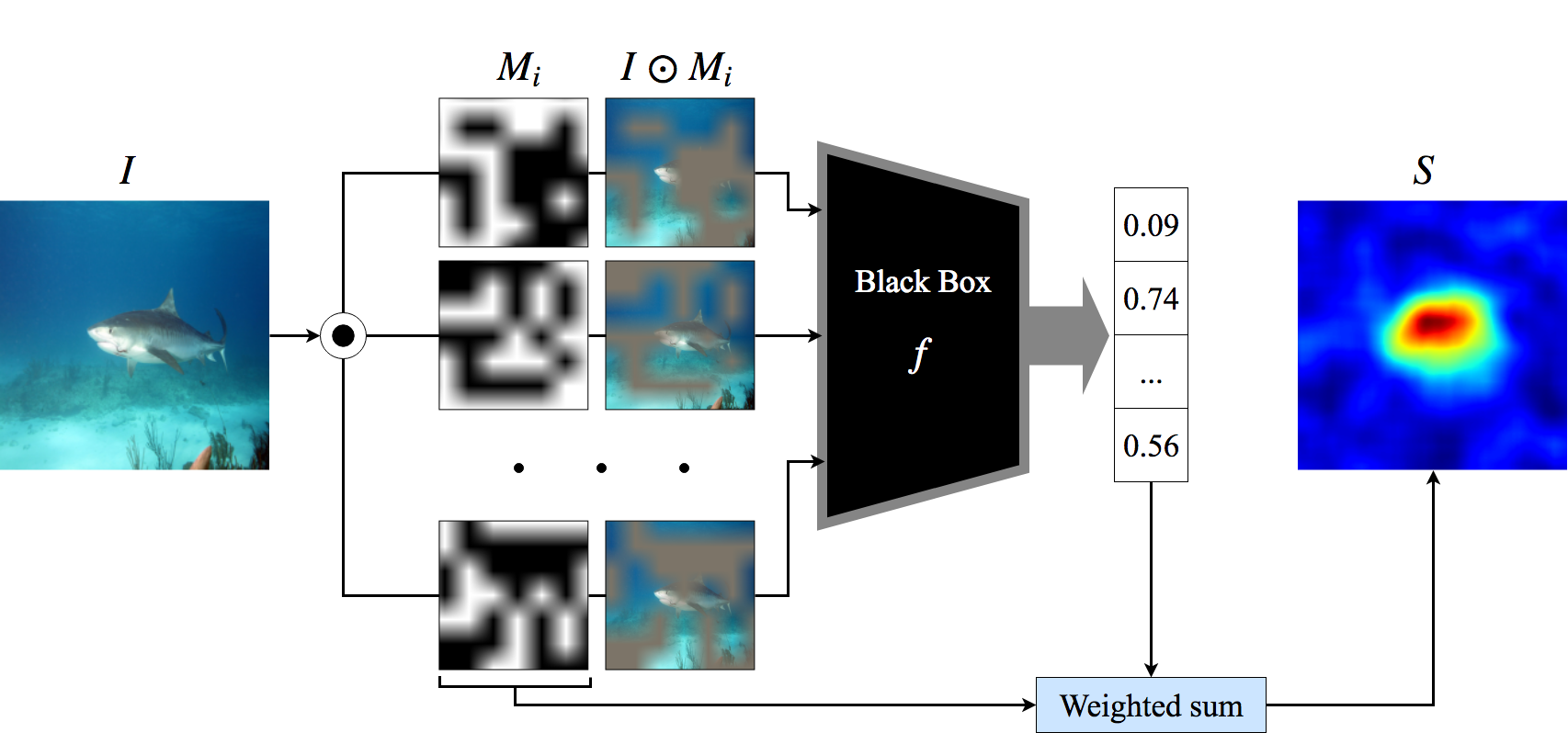

Deep neural networks are increasingly being used to automate data analysis and decision making, yet their decision process remains largely unclear and difficult to explain to end users. In this paper, we address the problem of Explainable AI for deep neural networks that take images as input and output a class probability. We propose an approach called RISE that generates an importance map indicating how salient each pixel is for the model's prediction. In contrast to white-box approaches that estimate pixel importance using gradients or other internal network state, RISE works on black-box models. It estimates importance empirically by probing the model with randomly masked versions of the input image and obtaining the corresponding outputs. We compare our approach to state-of-the-art importance extraction methods using both an automatic deletion/insertion metric and a pointing metric based on human-annotated object segments. Extensive experiments on several benchmark datasets show that our approach matches or exceeds the performance of other methods, including white-box approaches.

Slides

Presentation (Machine Intelligence Conference, 2018)

Bibtex

@inproceedings{Petsiuk2018rise,

author = {Vitali Petsiuk and

Abir Das and

Kate Saenko},

title = {RISE: Randomized Input Sampling for Explanation of Black-box Models},

booktitle = {British Machine Vision Conference (BMVC)},

year = {2018},

url = {http://bmvc2018.org/contents/papers/1064.pdf},

}