Problem Definition

This goal of the homework is to identify at least 4 different hand gestures in a video. The algorithm should be able to recognize one hand gesture, two hands gestures and if hand is waving. The result is important as we can apply this algorithm to situations where only video input is available. Because our algorithm is dependent on skin color detection, we need to make the assumption that the background contains less or no segments of skin color. We also need to make sure the environment is properly supplied with natural light, as the color captured by the camera will deviate if different wavelength of light is projected on to the object.

Method and Implementation

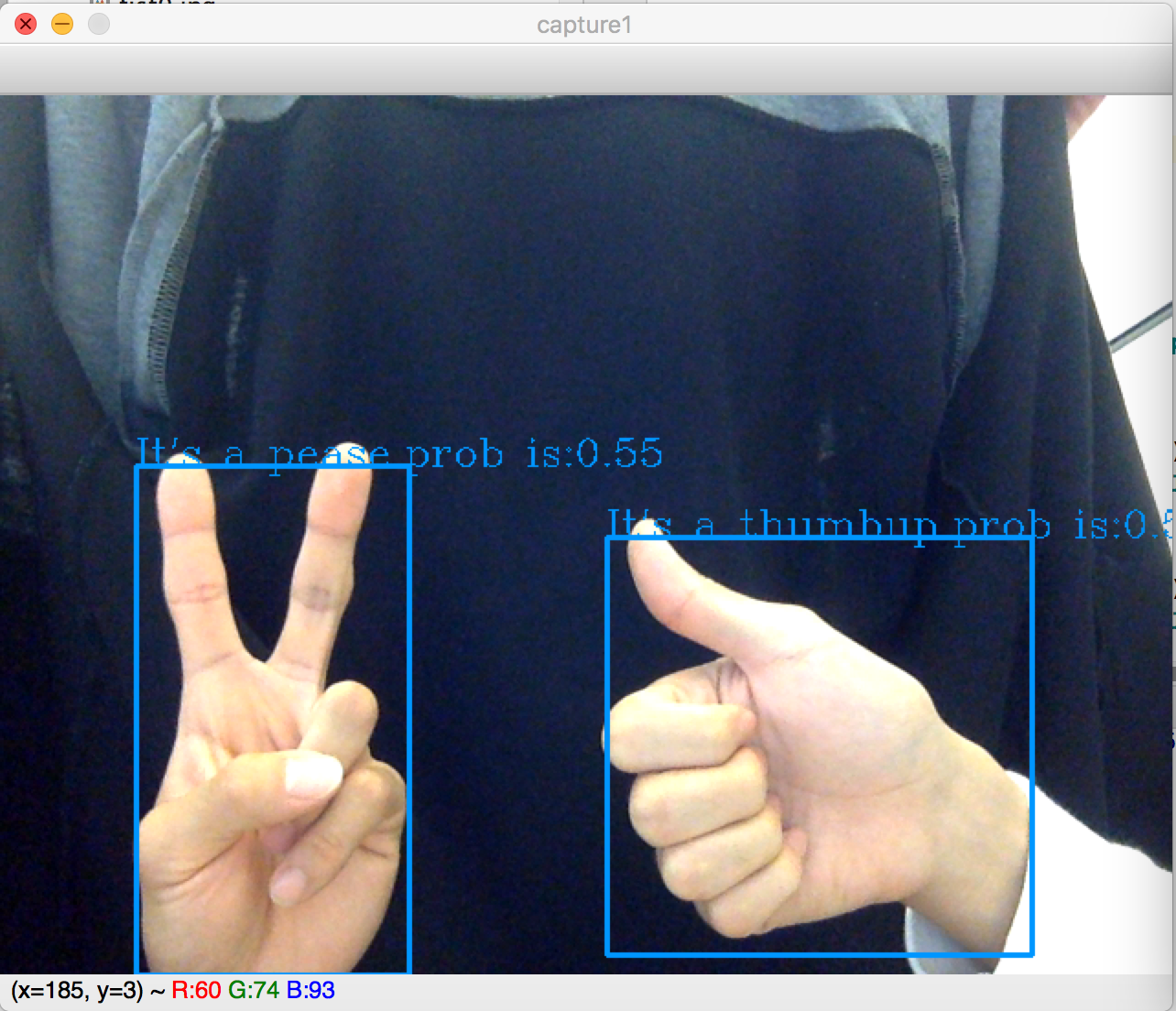

We used skin color detection and template matching for this assignment. First we opened the camera and each frame of the video is analyzed as a single still image. After obtaining the original image, we processed it using skin color detection, eroded and dilated, and have it return a uint8 binary image with the skin as white and background as black. We then used the binary image to find where the hand is. For this step, we used find contours, then we picked the biggest contour, found its bounding rectangle, cropped the bounded area out and showed the bounding rectangle on the original image. After obtaining a cropped image of just the hand, we used image pyramid to resize the templates. Then we converted all the template images to binary using the same skin color detection algorithm. Finally, we compare the pixel values of the template and the cropped image and see how much of the areas match. The one with the highest match (highest NCC output) is printed out on to the terminal. For two hands, we came to the conclusion that the second hand should be similar in contour size as the biggest contour. Therefore, if a contour in the found contours is close to the biggest contour, then draw its rectangle and crop it out for recognition.

Experiments

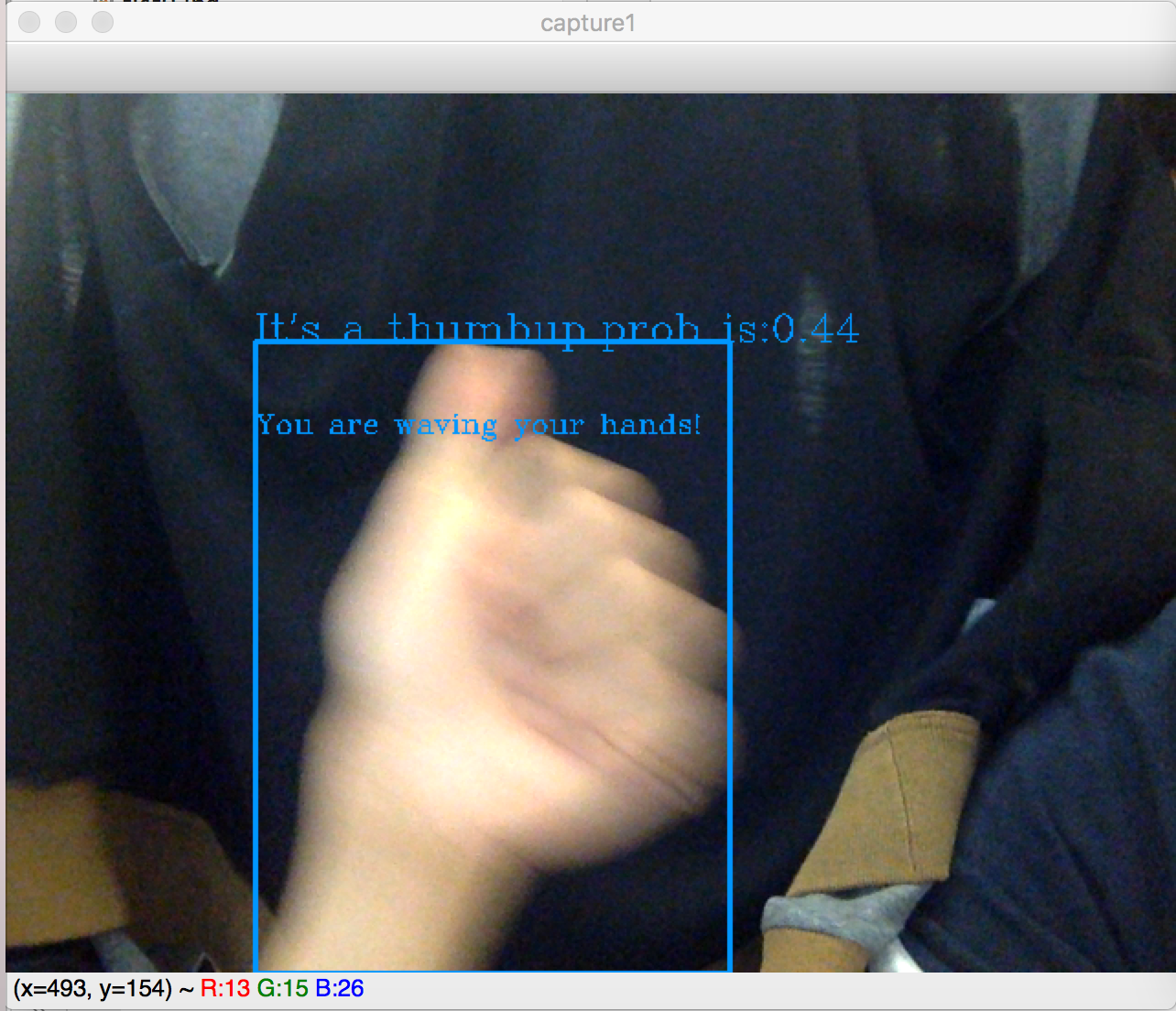

In this system, We used the front camera of our laptop to record the image data of each frame and perform template matching

(static and dynamic operation) on the image of each frame.

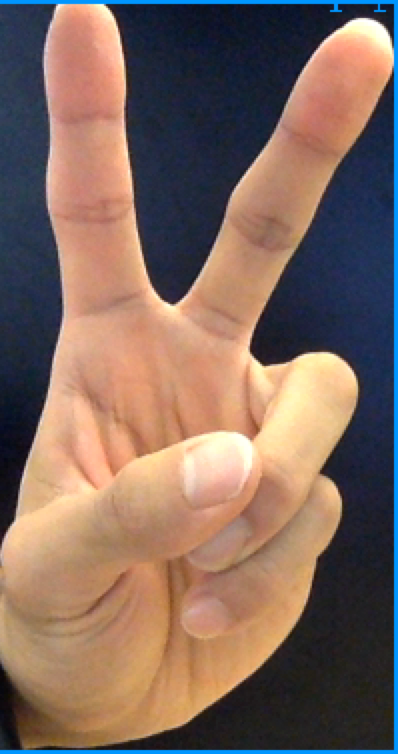

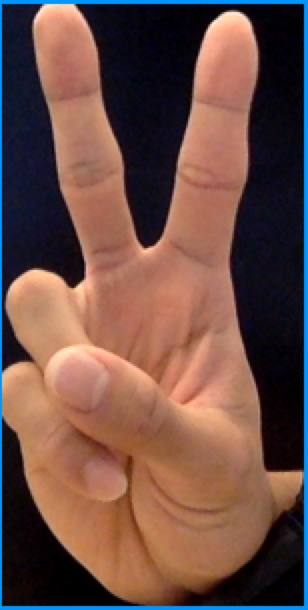

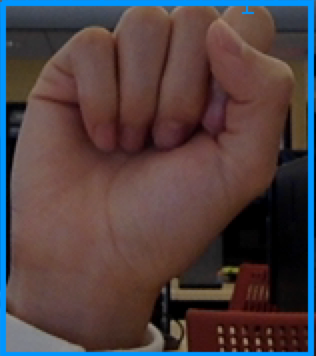

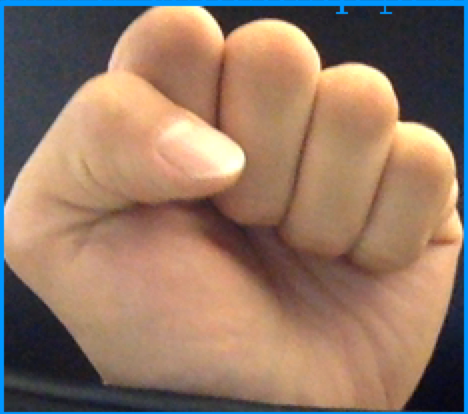

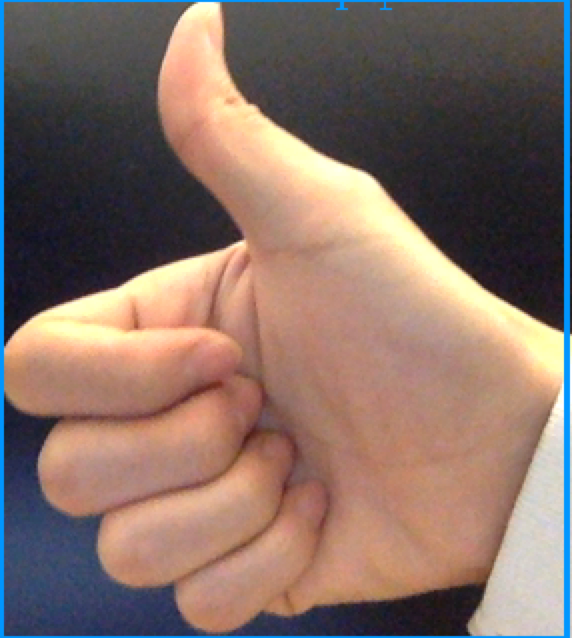

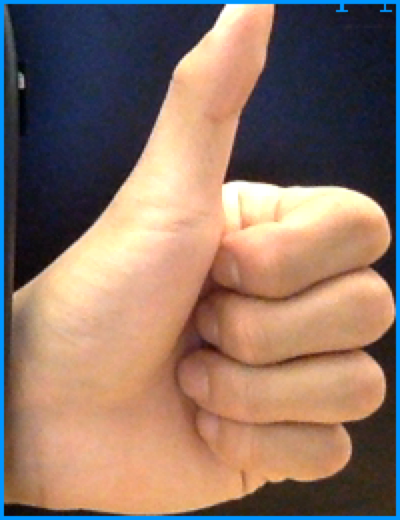

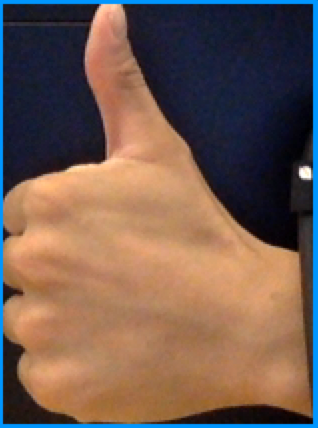

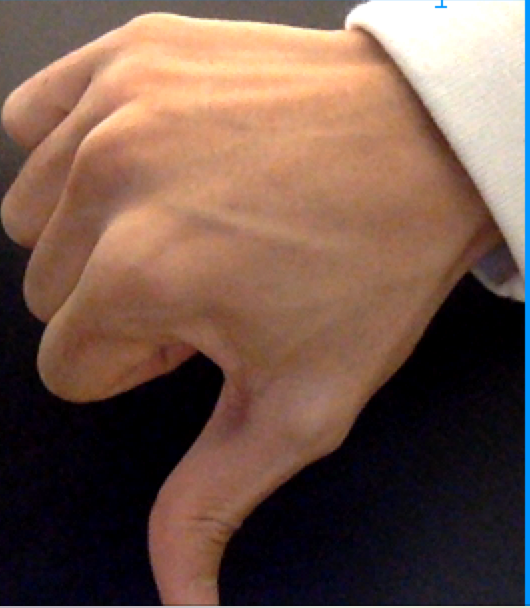

We used three different templates for each gesture and use largest NCC output to identify gesture so that we can enhance the robotness of model. Templates are shown below.

| Peace |  |  |  |

| Fist |  |  |  |

| Thumbup |  |  |  |

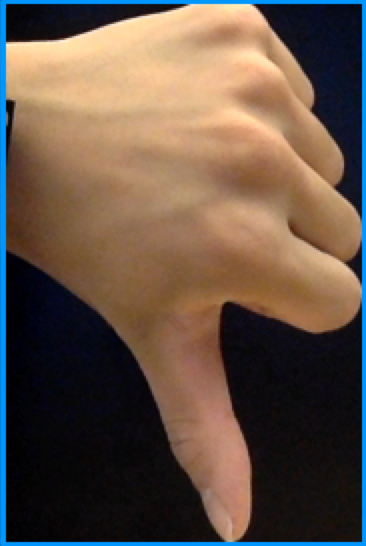

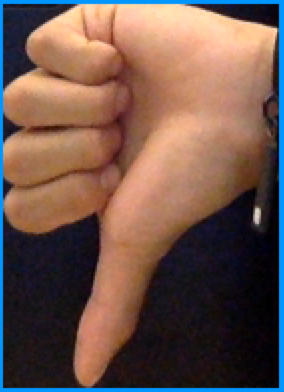

| Thumbdown |  |  |  |

We use the naked eye to visually observe whether the system runs smoothly,

and use the confusion matrix to judge the system performance

Results

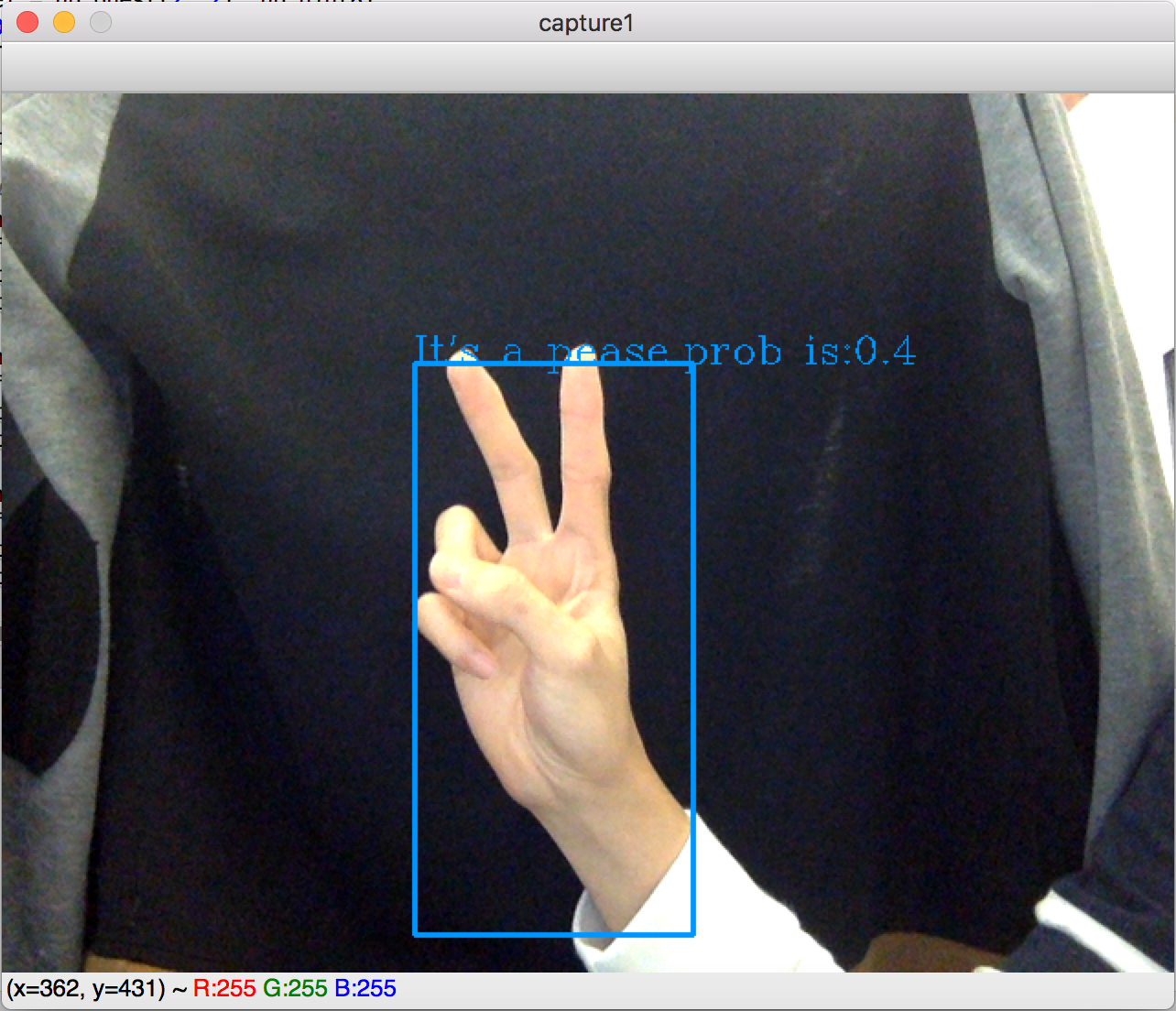

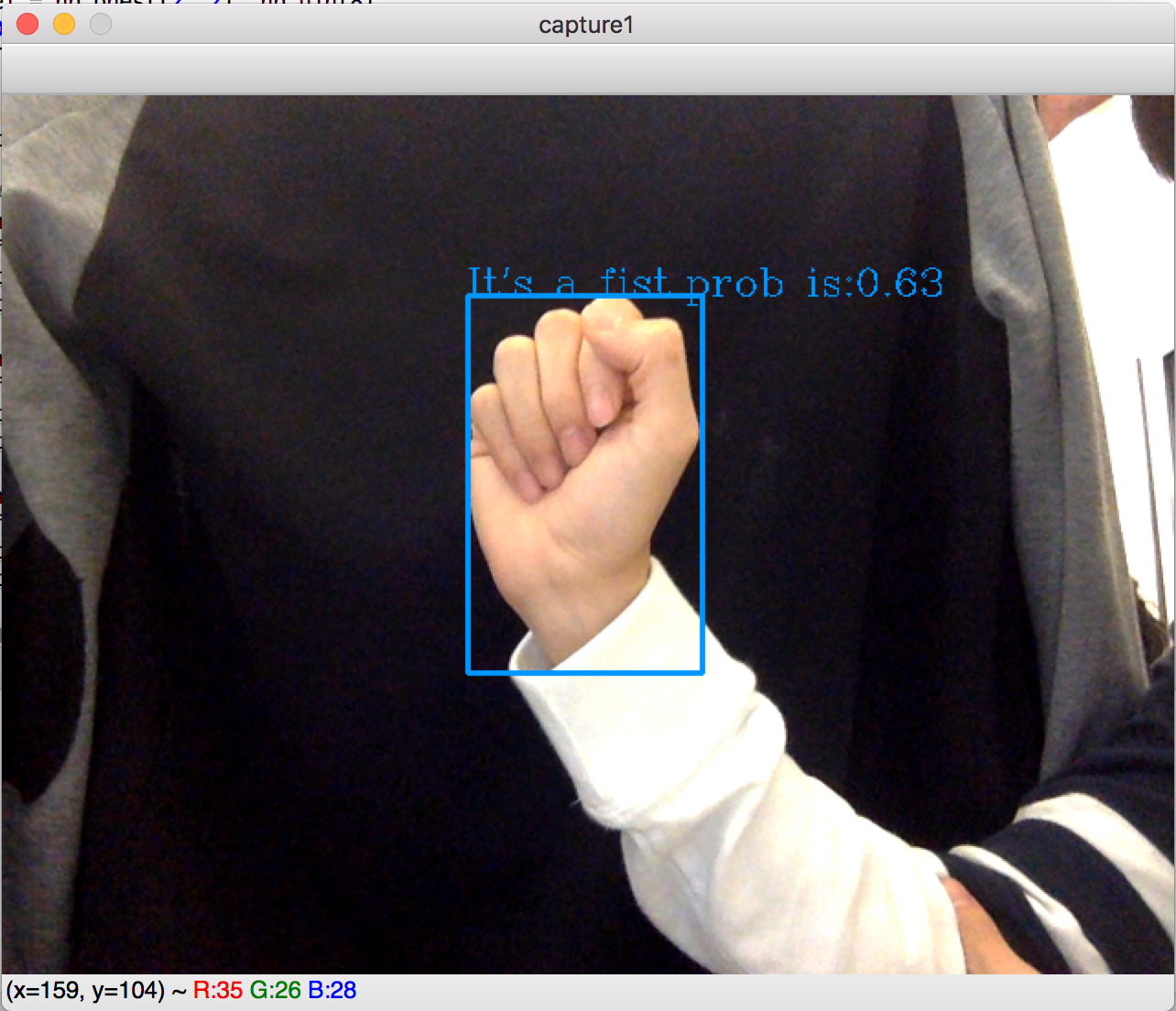

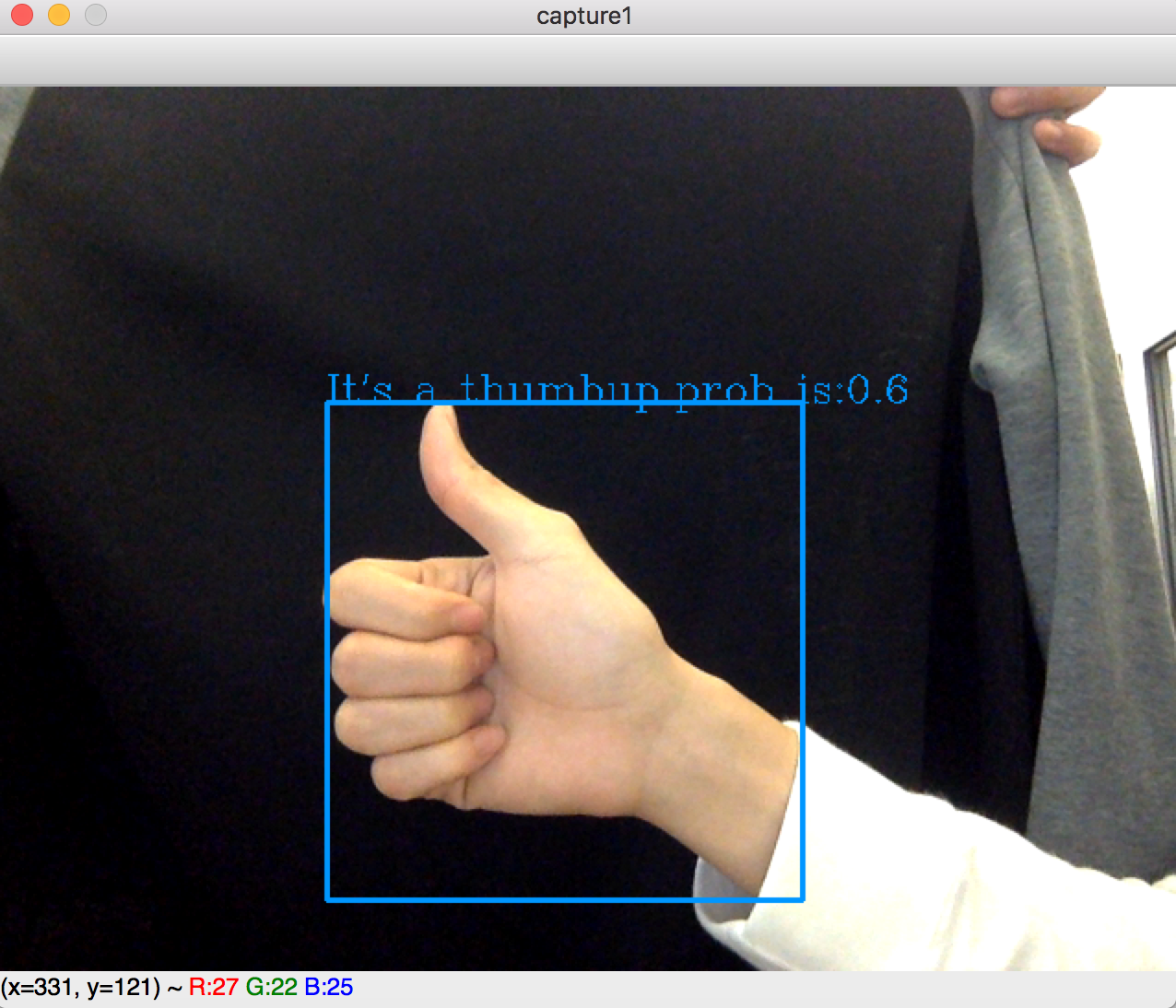

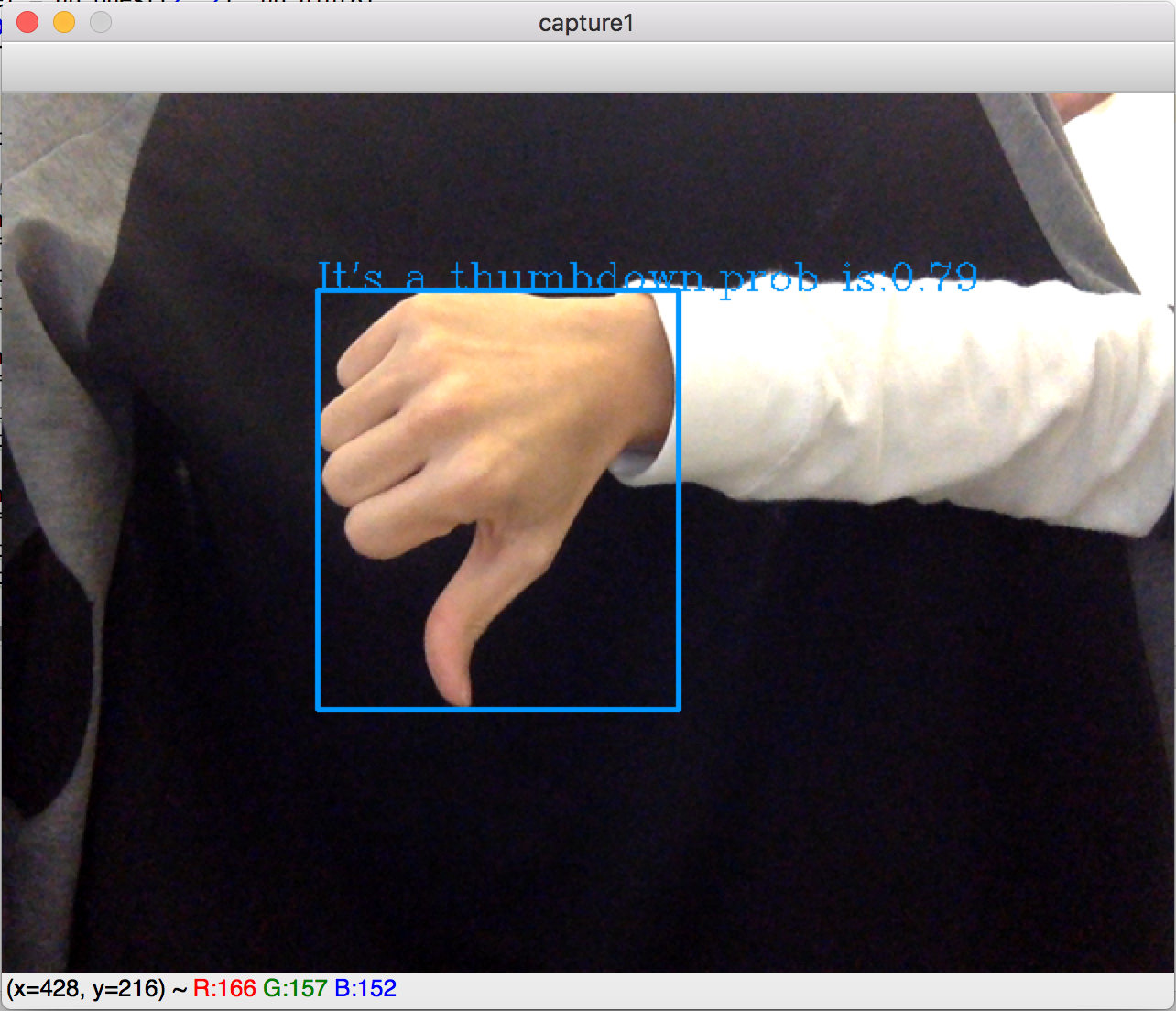

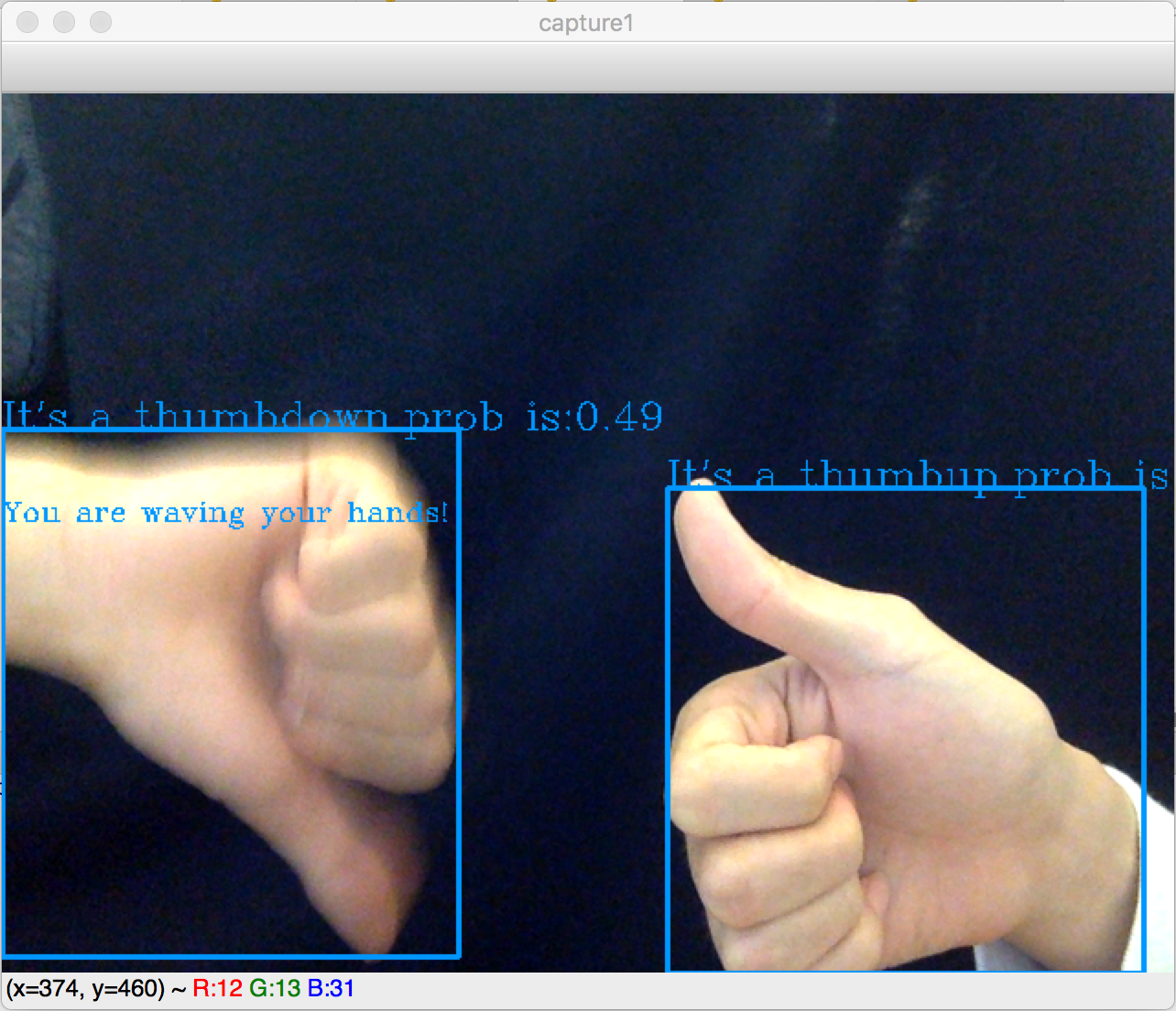

Results of different gesture and motion detection are shown below:

| Hand Shape Name | Result |

|---|---|

| Peace |  |

| Fist |  |

| Thumbup |  |

| Thumbdown |  |

| Seperate detection for gesture |  |

| Motion detection |  |

| Seperate detection for motion |  |

A confusion matrix for gesture recognition is shown below:

| Hand Shape | Peace | Fist | Thumbup | Thumbdown |

|---|---|---|---|---|

| Peace | 5 | 0 | 0 | 0 |

| Fist | 0 | 6 | 0 | 0 |

| Thumbup | 3 | 0 | 7 | 0 |

| Thumbdown | 0 | 2 | 1 | 8 |

| Motion | Waving | Not Waving |

|---|---|---|

| Waving | 8 | 0 |

| Not Waving | 0 | 8 |