Kaihong Wang

Teammate names: Qitong Wang, Yuankai He

Date Dec 7, 2018

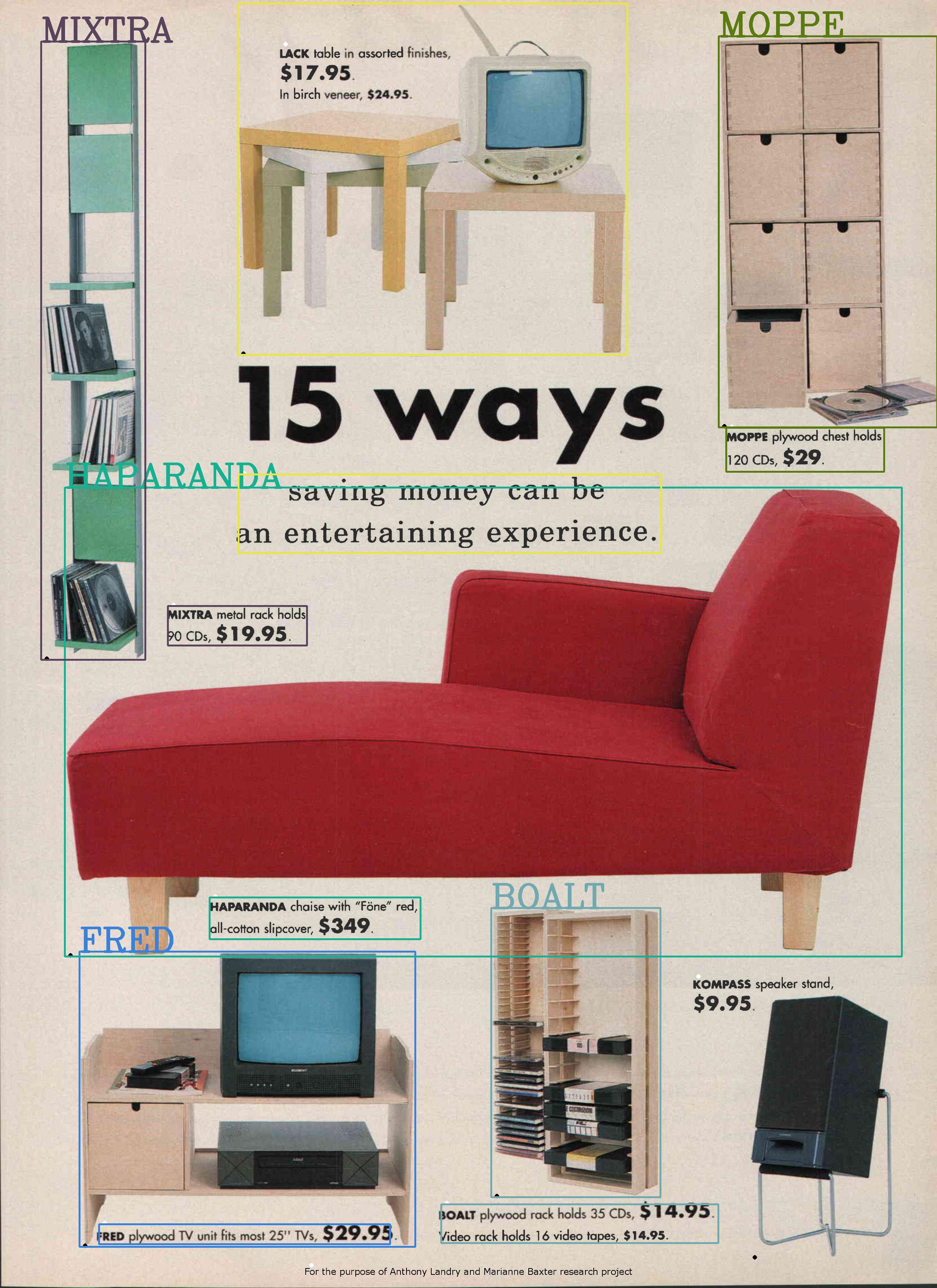

We will be given pictures collected from IKEA catalogs and our goal is to localize, identify both objects and captions of them and to associate corresponding objects and text using their spacial and semantic infomation.

Concretely, we will handle two kinds of image in this program, they are images with subscripts and images without subscripts. For images with subscripts, objects and corresponding texts are conntected with identical subscripts appear in both of them. For images without subscripts, texts are always shown nearby the corresponding images.

The ultimate purpose of the program is to automatically find out the association between corresponding texts and images by highlighting them using bounding boxes with same color.

The input data of the project will be like images shown below:

At the end of the project, we expect the output of our system to be like:

We can basically break down our problem into three parts: Object Segmentation,Text Segmentation, Data Association.

Part 1: Segmentation of Object:

For the first part, we will need localize the position of object so we can highlight its contour and indicate text related with it. The challenge may include: identifying whether a patch is a part of an object or background; identifying whether an object detected is an valid commodity or side bar of the magazine that used to decorate the page; some objects might have similar color with the background so their border will be hard to detected. Here is an example.

Part 2: Segmentation and Identification of Text

For the second part, in order to connect the text and image together, we need to extract the text in Ikea calalogs. We found that Google Vision API was a very useful tool. Here is a result of extracting text.

Part 3: Data association: After having segmentation of objects and texts, we need to associate images with their corresponding texts so that we can tell which text is describing a specific image.

Part 1: Localization and Extraction of Texts

In order to get the content and location of each text paragraph. We need to use Google Vision API to reach our goal. Google Vision API is a powerful tool which can accurately extracting the location and content of texts in image (OCR). After getting each text paragraph's content and location. We store them for next steps.

>Using the contents of texts from Ikea catalogs, we can judge Ikea images' classes. Using the locations of texts from Ikea catalogs, we can drop all the texts from Ikea Images (See Part 2).

Here are some examples using Google vision API to extract texts from Ikea catalogs:

Part 2: Image Classification

Consider that typographic style of Ikea catalog is various, so we firstly judge what kind of Typographic style the input image are. For convenience, we consider 2 most general Typographic style:

The image with subscript

The image without subscript

We need to consider different processing methods for 2 different kinds of images.

Here is the pipeline of 2 kinds of images' processing methods:

Before object detecting, we used OpenCV tool to fill the texts part in Ikea catalog images using background color. The main purpose of doing this step is that the text in the Ikea catalog can cause negative effect in the object segmentation part.

Here is some examples of Ikea catalogs without any testx:

Part 3: Segmentation of Objects

The first challenge of processing these images is that many images have dirty border due to the mistake during the collection of dataset. Since these border have significantly different color from background color of the image, these border are very likely to be recognized as objects in images which will greatly impair the performance of detection of objects in images. To overcome this problem, we detect black pixels at the brim of image and let them white which is similar with the background color. After that, we convert image into grayscale image and binarize it to get a binary image. We use some basic image processing operation to denoise the binary image and to find the contour of every objects in the image so that we can localize them. However, we notice that some images have extremely small gap from each other so for most circumstances, system take them as one connected object. To cope with this problem, we use edge detection to detect gap between objects. Then we use dilated mask of edge to subtract the original binary image so that gap between objects will be enlarged and finally we can have clearly segmented objects in images.

Here is the pipeline of object detection. Notice that different kinds of iamges have all the same object detection processing methods.

Part 4: Template matching and senamic matching:

For the image having subscript. we need to do template matching to recognise the subscripts from object images (Notice that Google Vision API cannot recognise the subscript from object images completely).

We store all the subscripts from object iamges as templates, then using OpenCV template matching function to get all template's matching probabilities. And we pick the max probabilty's template as the number of the object images.

The pipeline of template matching is as fellows:

For Ikea catalogs having subscripts, we haven known object images' subscripts using template matching, and texts' subscripts from Google vision API. We can match objects and texts having ths same subscripts, finishing matching work.

Part 5: Data association

For images without subscripts, we use Hungarian algorithm to match texts and objects according to their position.

Some results of Ikea catalogs:

(1) Image having subscripts:

(2) Image without subscripts:

We pick 105 Ikea catalog images, we pick all the object-texts pair as our basic unit of calculating system's accuracy.

Total numbers of object-texts pair: 650

Correct object-texts pair: 523

Wrong object-texts pair caused by Google Vision API: 34

Wrong object-texts pair caused by Object Detection and Template MAtching: 60

Wrong object-texts pair caused by Data Association: 33

Total Accuracy: 80.46%

(1) Google Vision API's accuracy cannot reach up to 100%, which can cause error when detecting texts' content and location

(2) Some objects' color are quite similar to the background color so that the system cannot locate the whole object images.

Here is an example:

(3) There is little possibility that the closest texts to one object images might not their correct texts.

(1) Improving the objkect detection's accuracy by trying other techniques such as CNN, FCN, etc.

(2) Trying other effective data association methods.

(3) Trying to connect another kinds of Ikea catalogs' objects and their texts.

We bulid a computer vision system which can connect the objects in the Ikea catalogs with their texts, and this system can be applied more than one kinds of Ikea catalogs.

(1) Qitong Wang bulit the pipeline of system and finished the template matching and text detection work.

(2) Kaihong Wang finished object detection work.

(3) Yuankai He finished the data association work and tested our computer system.

We would like to express our thanks to our instructor, Professor. Margrit Betke, and all the classsmates who helped us during the process of taking CS585 courses amd finished our project.