Research Projects

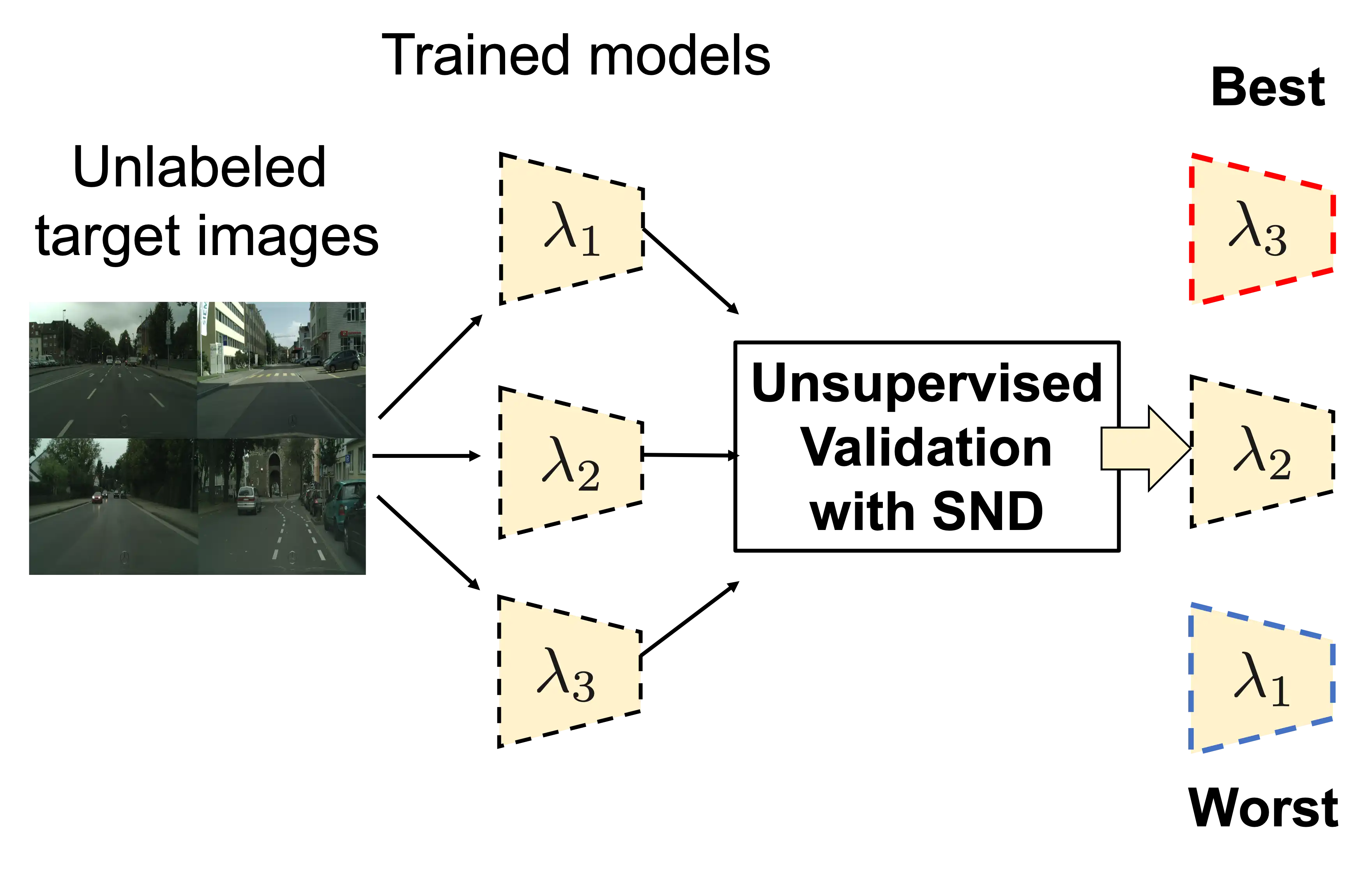

Tune it the Right Way: Unsupervised Validation of Domain Adaptation via Soft Neighborhood Density

Kuniaki Saito , Donghyun Kim, Piotr Teterwak, Stan Sclaroff, Trevor Darrell, and Kate Saenko ICCV 2021

Paper View Project Code

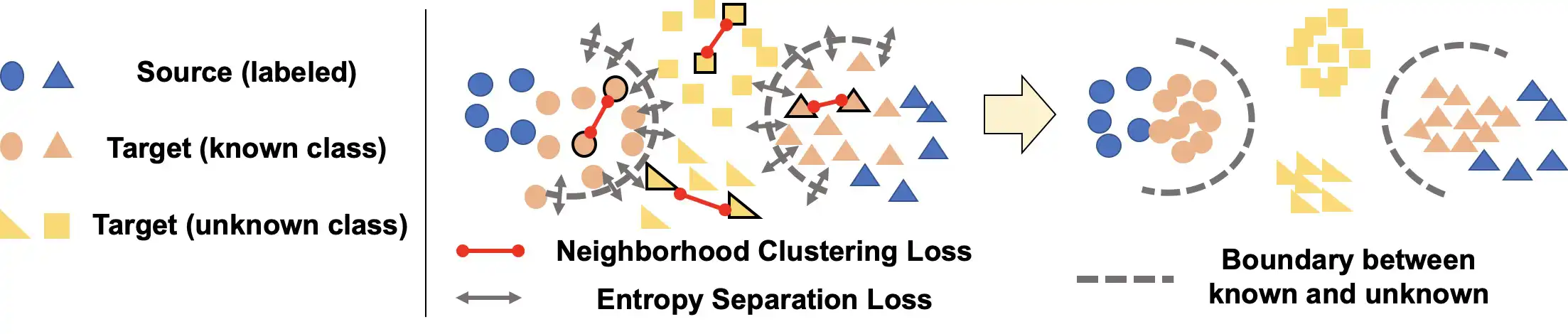

OVANet: One-vs-All Network for Universal Domain Adaptation

Kuniaki Saito and Kate Saenko ICCV 2021

Paper Slides View Project Github

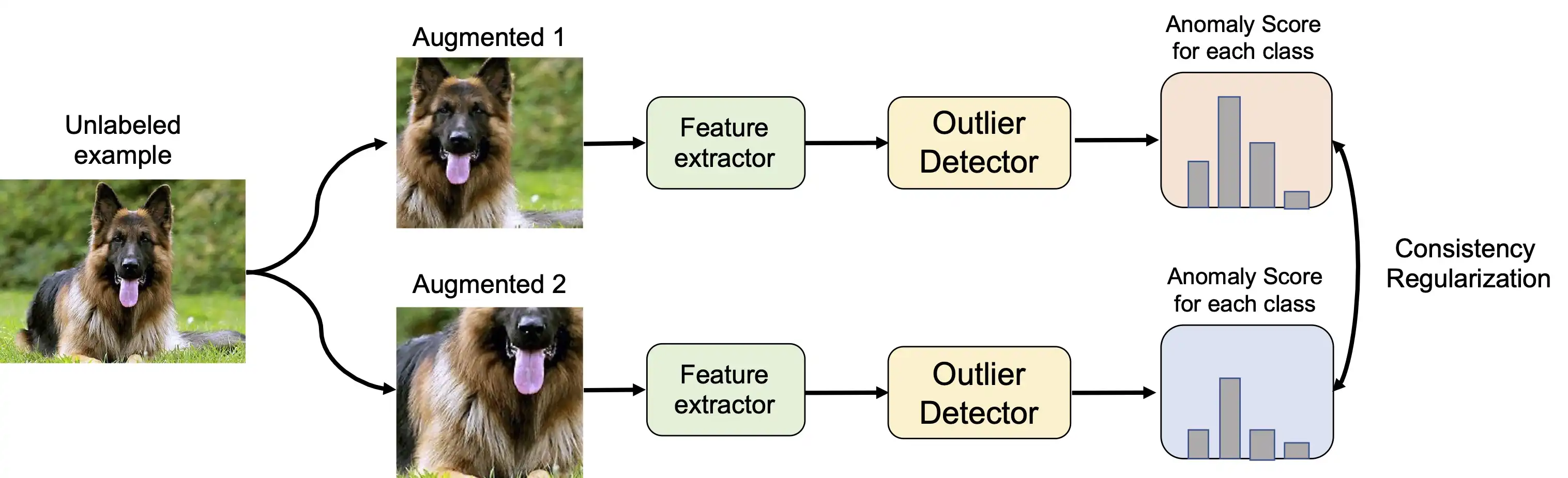

Universal Domain Adaptation through Self-Supervision

Kuniaki Saito, Donghyun Kim, Stan Sclaroff, and Kate Saenko NeurIPS2020

Paper View Project Github

COCO-FUNIT: Few-Shot Unsupervised Image Translation with a Content Conditioned Style Encoder

Kuniaki Saito, Kate Saenko and Ming-Yu Liu ECCV2020

Unsupervised image-to-image translation intends to learn a mapping of an image in a given domain to an analogous image in a different domain, without explicit supervision of the mapping. Few-shot unsupervised image-to-image translation further attempts to generalize the model to an unseen domain by leveraging example images of the unseen domain provided at inference time. While remarkably successful, existing few-shot image-to-image translation models find it difficult to preserve the structure of the input image while emulating the appearance of the unseen domain, which we refer to as the content loss problem. This is particularly severe when the poses of the objects in the input and example images are very different. To address the issue, we propose a new few-shot image translation model, which computes the style embedding of the example images conditioned on the input image and a new architecture design called the universal style bias. Through extensive experimental validations with comparison to the state-of-the-art, our model shows effectiveness in addressing the content loss problem.

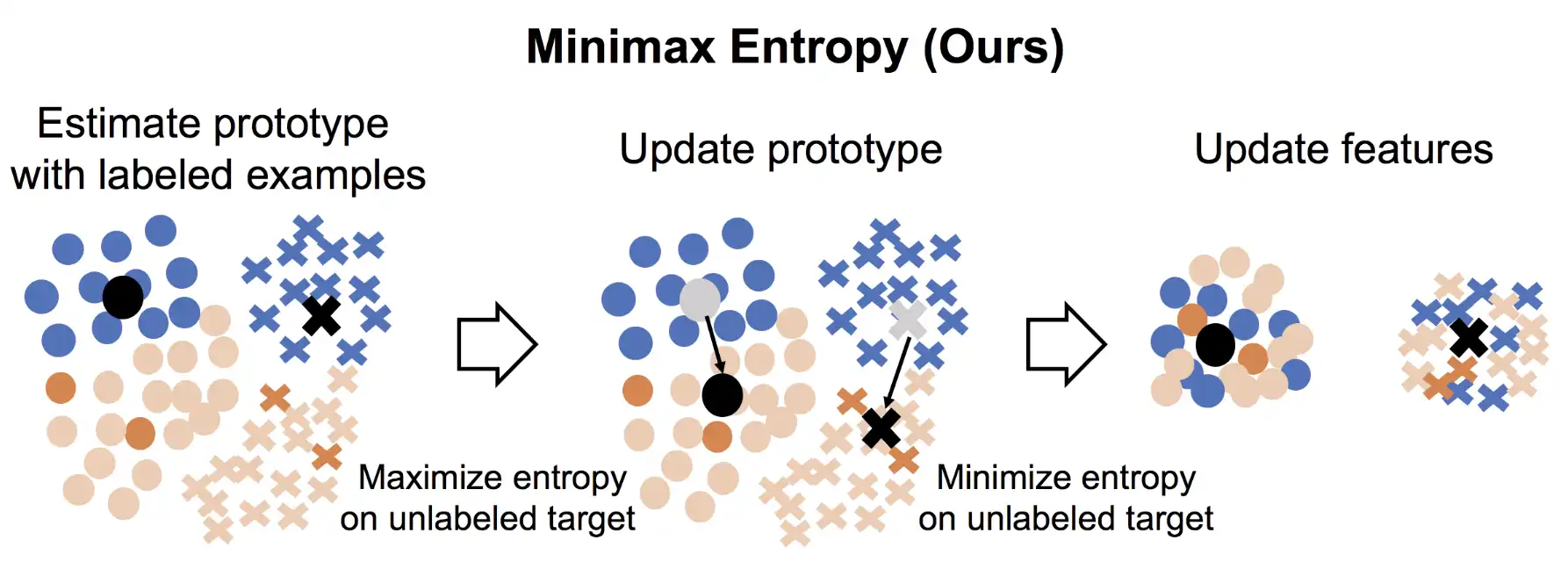

Paper View ProjectSemi-supervised Domain Adaptation via Minimax Entropy

Kuniaki Saito, Donghyun Kim, Stan Sclaroff, Trevor Darrell and Kate Saenko ICCV2019

We proposed a method for Semi-supervised Domain Adaptation where a few labeled target examples are available in addition to unlabeled target examples. We proposed a new adversarial training method to solve the problem.

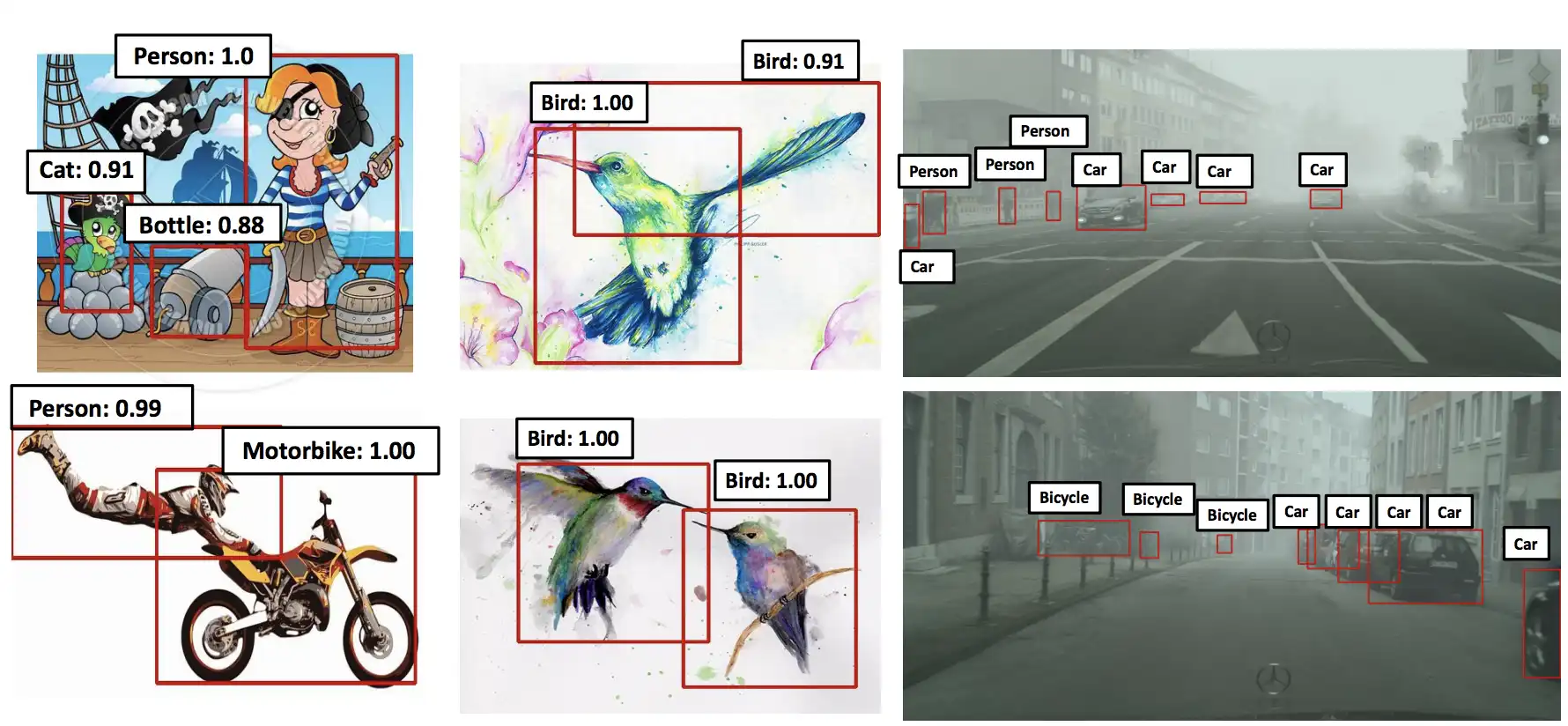

Paper View Project GithubStrong-Weak Distribution Alignment for Adaptive Object Detection

Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada and Kate Saenko CVPR 2019

We proposed a novel method for domain adaptive object detection. We performed weak-distribution alignment for high-level global feature whereas we also performed strong distribution aignment for low-level local feature. Our method outperformed other baselines with a large margin in four adaptation scenarios.

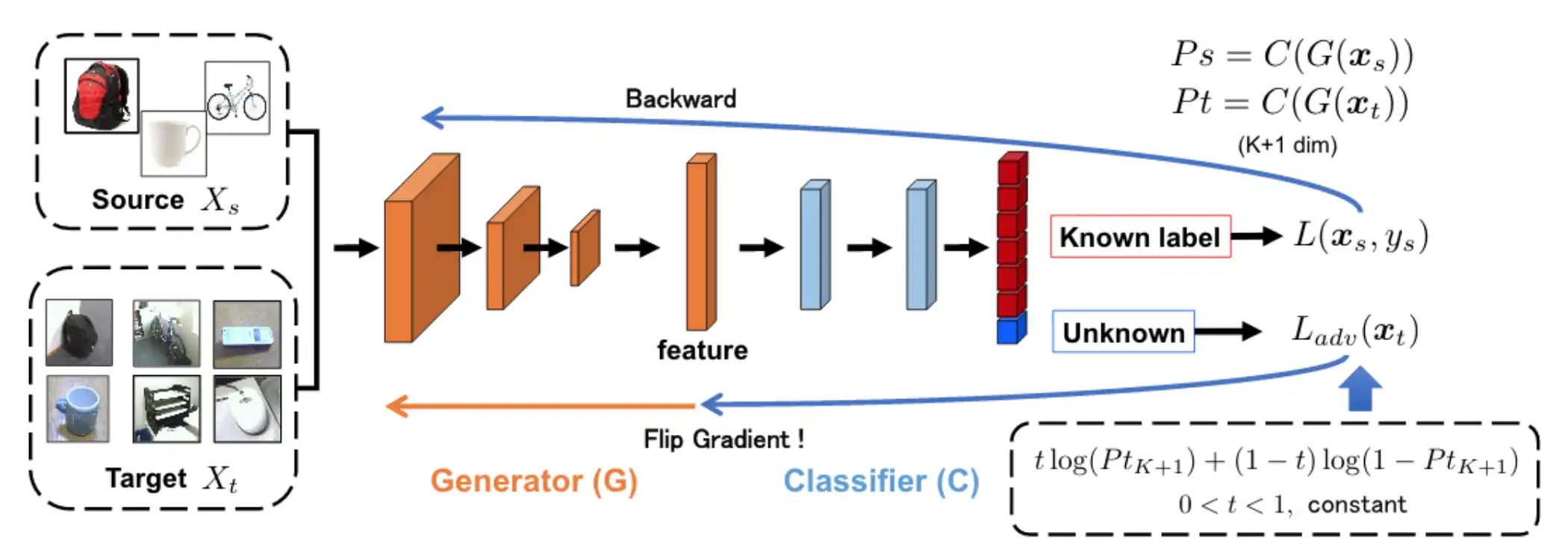

Paper View Project GithubOpen Set Domain Adaptation by Backpropagation

Kuniaki Saito, Shohei Yamamoto, Yoshitaka Ushiku and Tatsuya Harada, ECCV 2018

We proposed a novel method for open set domain adaptation, where a target domain includes the category absent in a source domain. We propose to give a rejecting option to a classifier and achieve it by adversarial learning. Our method outperforms other methods in various tasks with a large margin.

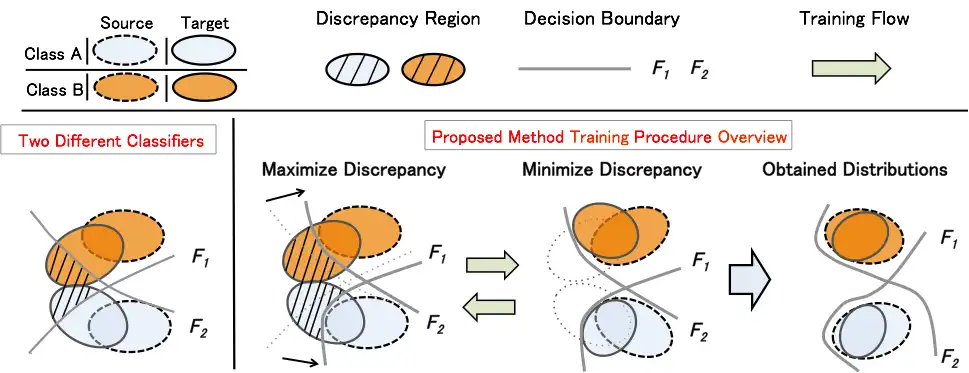

Paper GithubMaximum Classifier Discrepancy for Unsupervised Domain Adaptation

Kuniaki Saito, Kohei Watanabe, Yoshitaka Ushiku and Tatsuya Harada, CVPR 2018 oral

We propose a new approach that attempts to align distributions of source and target domain by utilizing the task-specific decision boundaries. We propose to utilize task-specific classifiers as discriminators that try to detect target samples that are far from the support of the source. Our method outperforms other methods on several datasets of image classification and semantic segmentation.

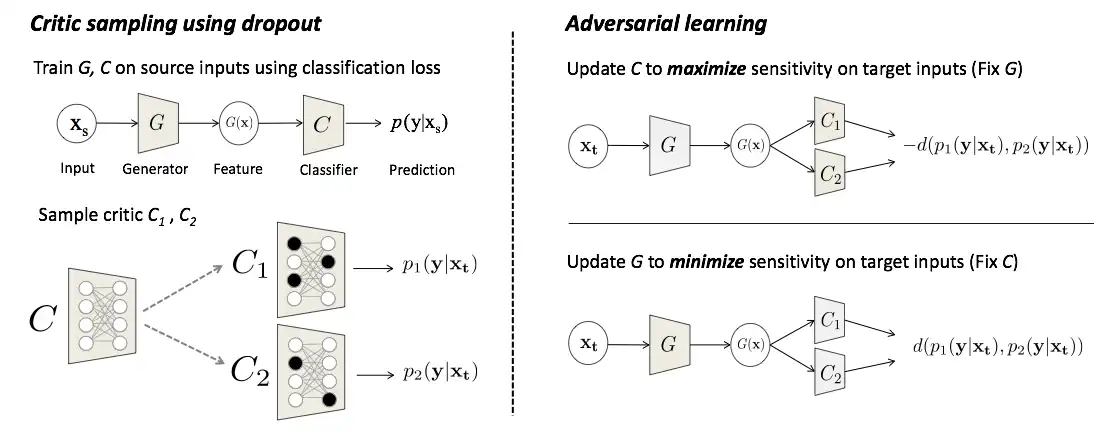

Paper View Project Code SlidesAdversarial Dropout Reguralization

Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada and Kate Saenko, ICLR 2018

We proposed a novel method for unsupervised domain adaptation. The method is based on adversarial learning and effectively utilizes dropout. The method outperforms other methods on digits classification, object classification, and semantic segmentation tasks.

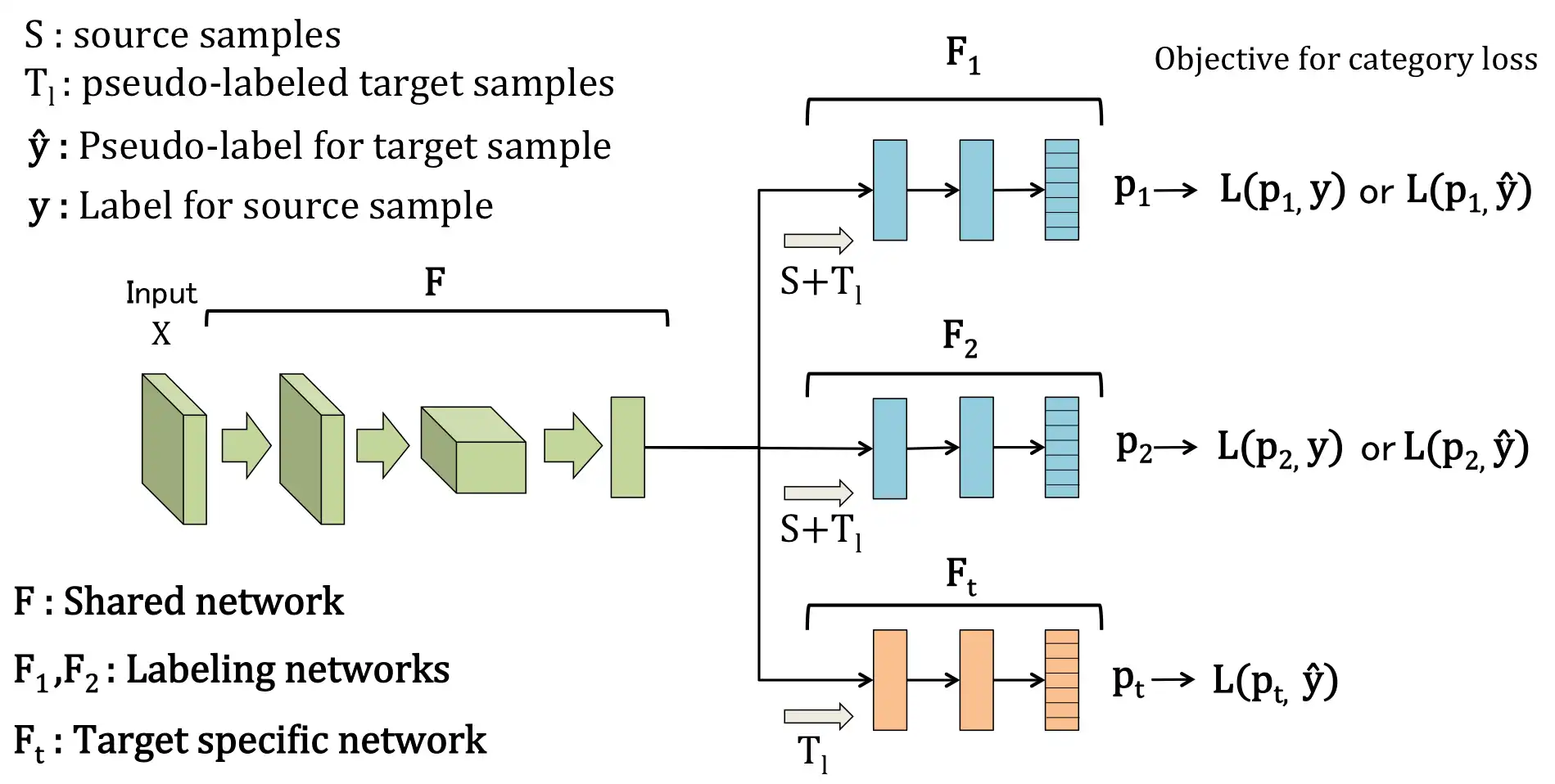

Paper View Project CodeAssymetric Tri-training for Unsupervised Domain Adaptation

Kuniaki Saito, Yoshitaka Ushiku and Tatsuya Harada ICML 2017

We propose the use of an asymmetric tritraining method for unsupervised domain adaptation, where we assign pseudo-labels to unlabeled samples and train the neural networks as if they are true labels. In our work, we use three networks asymmetrically, and by asymmetric, we mean that two networks are used to label unlabeled target samples, and one network is trained by the pseudo-labeled samples to obtain target-discriminative representations. Our proposed method was shown to achieve a stateof-the-art performance on the benchmark digit recognition datasets for domain adaptation.

Paper