Problem Definition

1. In part 1, implement connected component labeling algorithm, implement boundary following algorithm, implement orientation, circularity, area, compactness, boundary length calculation algorithms, implement skeleton finding algorithm. 2. In part 2, implement 2 segmentation algorithms, and convert color image sequence input into binary video output. Use part 1 algorithm to analysis data.

Method and Implementation

Part 1:

1. Apply StackConnectedComponents (Improved DFS like algorithm) to find connected component labeling algorithm. First assign all pixels to a certain value. And find a point to start, push all neighbors that have certain value to a stack. Every time pop a point from stack, and keep push all its neighbors to stack. Keep doing this until all pixels labeled.

2. In all labeled objects, filter objects that have small size.

3. Apply boundary following algorithm, that is: Start from a boundary point on object, start from this object, clockwise find next boundary pixel. Keep doing this until find that the start point again.

4. Calculate average x value, average y value of each object. Then calculate (x-x_ave)**2, (y-y_ave)**2, (x-x_ave)(y-y_ave) value of each object, that is our a, b, c. Than use this and arctan to get alpha orientation. Then use this to calculate E_min, E_max. Then get circularity. All calcualte boundary euclidean full length, times 4 pi, and divide by area of object. Then we get compactness.

5. For each point in object, find boundary pixel that have minimum distance to this point. Then for each bounary pixel, find the largest distance point. Then all these points are the skeleton of this object.

Part 2:

1. First implement absolute threshold. For grayscale, make all gray pixel over absolute threshold as white, others as black.

2. Second implement iterative threshold. First use average gray value of that image, then split images to two sections, and calculate average gray value for each section, then use average of each section's average gray value as new threshold. Then generate new sections. Keep doing this until average gray value of each section don't change much.

3. Also developed a background threshold, this is similar to adaptive threshold. Use average image of all images as background, compare image with this background. If gray value difference is larger than a certain value, make that pixel as white, otherwise black.

Experiments

Part 1:

1. Use self implemented StackConnectedComponents to test. The input image should be binary image with white background, black objects. When objects is small, it takes less than 1s to calculate. But when object is too large, it will cost more time, this is caused by the stack tech I used. The larger the object is, the more pixels in it, and this will take more time in stack push (I used deque here, also I need to make sure there is no duplicate point pushed to that deque, so this takes a lot of time). Running time for this is about 1-4 seconds for given image, for object that are super large (like almost full image), this may takes over 10s to calculate.

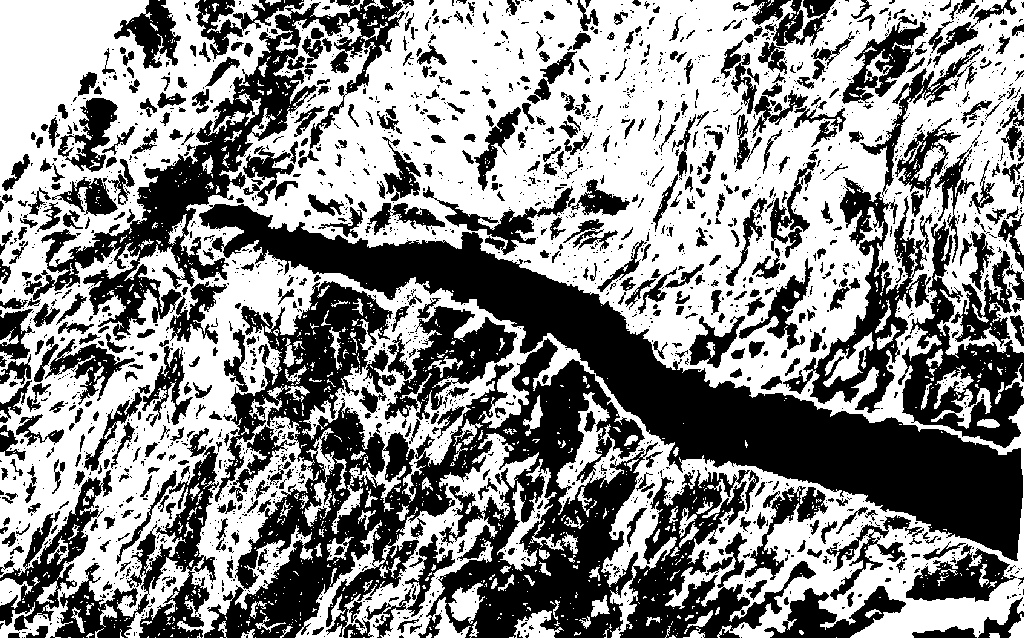

2. Each picture is not similar. For open_fist-bw image, I use size 50 as filter. For open-bw-full, I can just use 10 as filter. For open-bw-partial I use 100 as filter. For tumor-fold image I use 10 as filter.

3. Its very quick to calculate boudnary for an object. Takes less then 1s to calculate it, even for a large object.

4. All math part involved is actually very simple. This part can be done in less than 1s.

5. This algorithm's running time varies. The larger the object is or the longer the boundary is, the more time it will take. For a super large object, this will take more than 10s. Complexity for this algorithm is O(mn) where m is the total pixel number of an object and n is the boundary length.

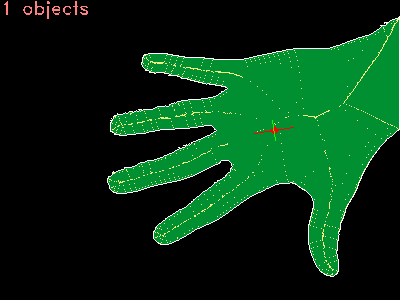

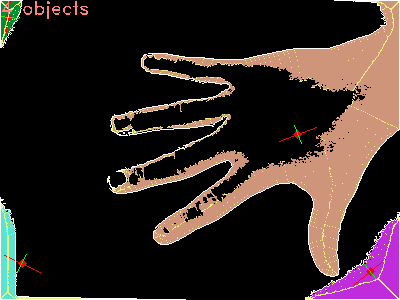

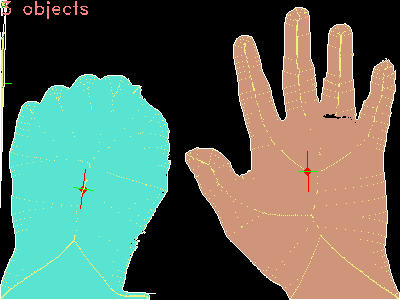

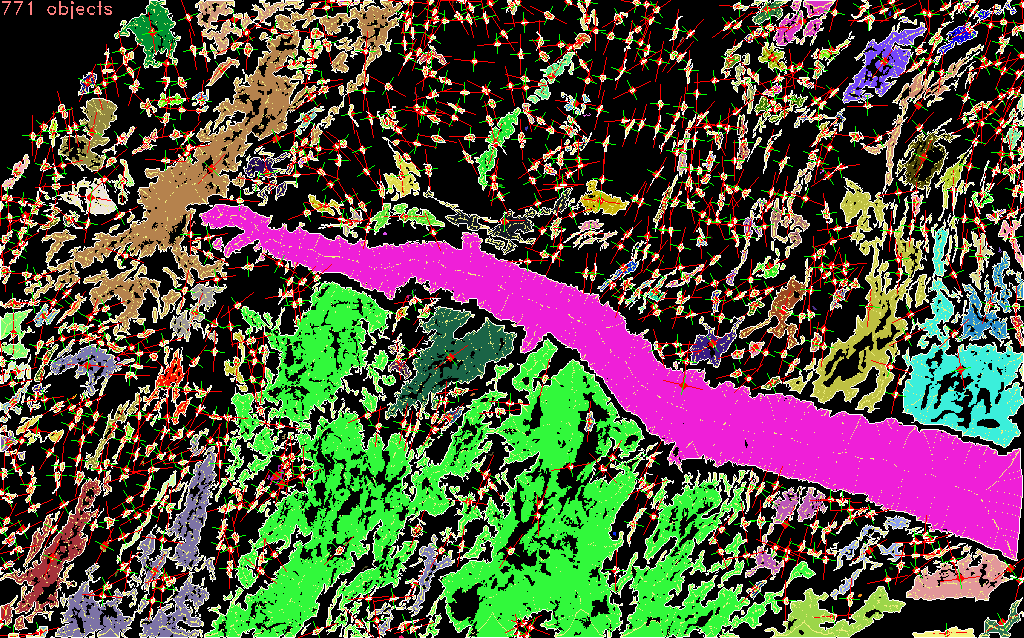

Each object is labeled with a random color, and use a red point to represent the centroid of that object. The green line cross it is the least inertia line, and the red line cross it is the largest inertia line. Boundary is draw in white. Skeleton is draw in yellow points.

Part 2:

1. This absolute threshold algorithm is actually very simple. Will done calculation in microseconds. Tried many thresholds, threshold 140 or larger can give a relativly good segmentation in bat frames, but not good enough. Small bat cannot be recognized. And hand in piano images cannot be segmentated by algorithm, also this don't works well for people frames.

2. Iterative threshold is also not good enough. It will recognize all the bottom part of the image as an object in bat video. This is bad. Also This cannot segment hand on piano or people well.

3. Background threshold (Adaptive threshold). This works well on bat segmentation, even small bats under great noise can be found. But not so good for hand detection. It will still get confused where is piano keys and where are hand (because of the light, the color of keys and color of hands are so similar). For people frames,use next frame as backgound (Similar idea as motion energy), and after background threshold, apply a dilation and erosion will be helpful to get people sections out. But still cannot find out correctly where people is.

Results

Part 1: Output of this part are saved as images:open_fist-bw-labeled.png, open-bw-partial-labeled.png, tumor-fold-labeled.png, open-bw-full-labeled.png

Results | ||

| Trial | Source Image | Result Image |

| trial 1 |  |

|

| trial 2 |  |

|

| trial 3 |  |

|

| trial 4 |  |

|

Part 2: output are saved as videos.

Results | ||

| Trial | Segmentation Video | Labeled Video |

| Piano | Piano_segmentation | Piano_Labeled |

| Bat | Bat_segmentation | Bat_Labeled |

| People | People_segmentation | People_Labeled |

Discussion

Discussion about the algorithms I used:

-

Part 1

- The StackConnectedComponents I implemented will be easy for computer to process, so this can avoid the problem that computer cannot process too depth recursion in DFS. But there also exist a problem that check push a new point to stack, I have to check if it has already been there. If so, I have to avoid push it. This will bring extra cost and very time consuming when dealing with large object. If implement the compare between points, I can just use set or map to store them, the checking will be faster.

- For size filter, it works well. Just filter all the unnecssary part.

- For boundary following algorithm, the one I implemented actually works well, and it don't take much time to calculate it. But it cannot detect inside boundary. Also I used a lot of unnecessary lines to impelment it. If will organized, I can do better.

- For orientation calcualation, and compactness, it actually don't takes long. And it works well!

- For skeleton detection, I have to calculate the distance from a point to every boundary. If the object is large or boundary is complex, this will cost a lot of unnecessary time. If I have use BFS to search for the first boundary point, this will take less time.

-

Part 2

- Both absolute threshold and iterative threshold don't work very well. So I use background threshold (adaptive threshold) to finish the job. But this algorithm require a statistic background all the time and the background used in this algorithm should be very clean and clear. I just used average of all image to generate the background, with is actually not a good way to do this.

- Backgound threshold works very well in bat video, but not so good in piano video and people video. That's because small objects will move a lot in these two videos, which affect our process.

- Used HOG detection to find people, which we didn't discuss yet in class. It use people SVM to detect if there is a people in a certain block. But it don't work very good.

Conclusions

Algorithm implemented in part 1 both works well, but most of them will have long running time when meet a large object.

Backgound threshold works well on images with static background, but not so well on video. Have to combine other methods together to make it works well.

Credits and Bibliography

Materials discussed in class

http://opencv.org/

https://www.pyimagesearch.com/2015/11/09/pedestrian-detection-opencv/