Problem Definition

In this problem, we build a gesture detector. Although the homework version is a bit simple, in real life such a detector could be used for applications such as automated sign-language reading, so the task is well motivated.

Method and Implementation

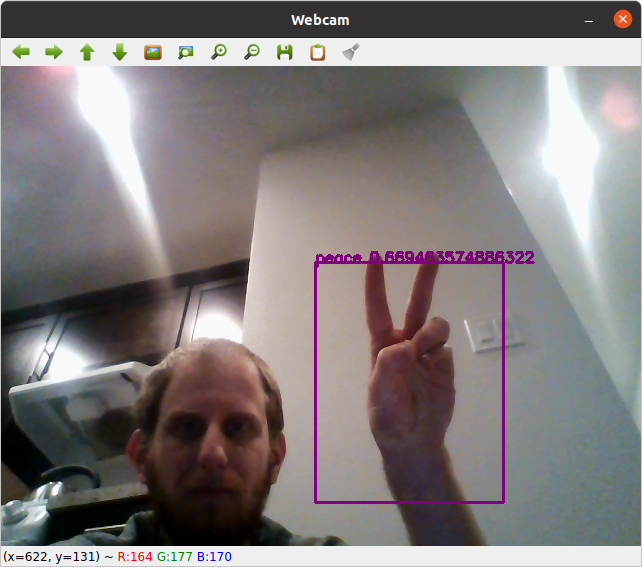

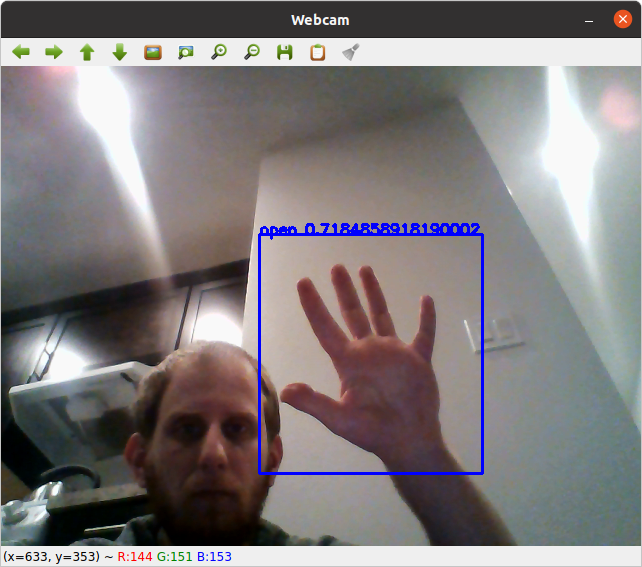

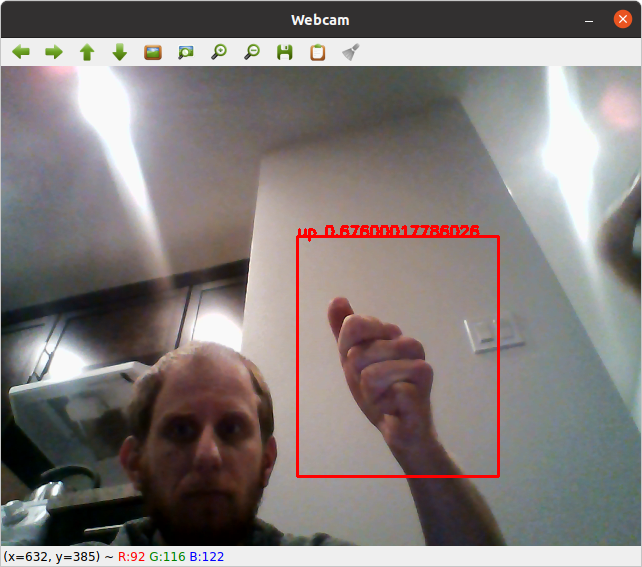

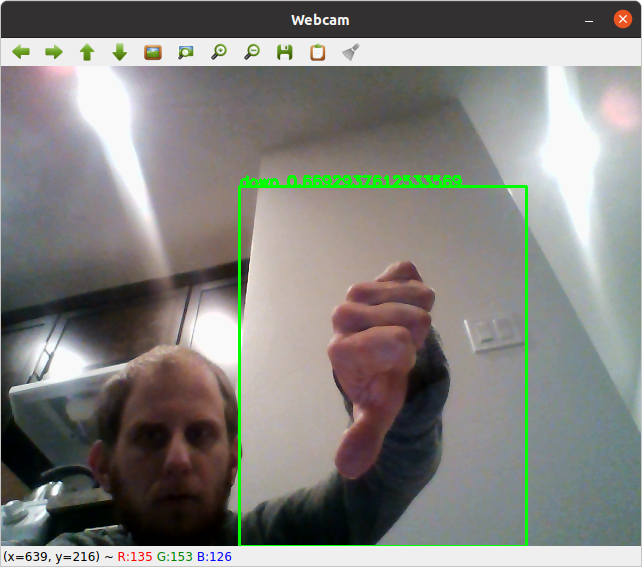

We follow the lab 3 skeleton code as a starter to open a stream of webcam images. For each incoming frame, we perform several steps. First, we remove the background. We do this by subtracting the first frame from the current frame. If the difference is greather than a threshold, then it's set to 0. Next, we detect skin color and set skin colors to 1, and everything else to 0. Last, we have several templates of hand gestures, also binarized. Then, we use do template matching with a normalized correlation coefficient, as we learned in class. The templates are reproduced at several scales,(half the image height, 3/4 the image height, and 85% of the image height) to make a pyramid. The highest match is outputed, and we draw a bounding box and draw text to let the user know what is detected. The gesture we detect are peace, thumbs up, thumbs down, and open hand.

We use a number of builtin function from opencv. We use cv2.videocapture and cv2.imread to use the webcam. We also use cv2.absdiff to remove the background, and cv2.matchTemplates, which implements template matching using the correlation coefficient as described in class.

Experiments

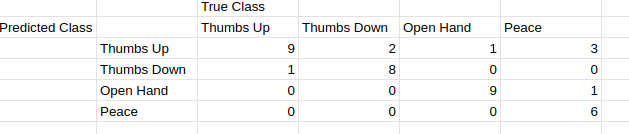

After implementing, we tested accuracy. We first test qualitatively, and then computed a confusion matrix. We did have to manually tune to the skin color detector, it's a bit finicky and needed to have a different masking value for red colors.

Results

We show snapshots and a confusion matrix below. The confusion matrix is manually constructed from me trying to make the gesture. It's a bit biased, as I explicitly use a neutral background. The algorithm does much worse otherwise.

Results | |

| Peace |  |

| Open Hand |  |

| Thumbs Up |  |

| Thumbs Down |  |

.

.

It's clearly not perfect, and makes some mistakes, but it works, at least in the correct lighting conditions. A video demo is here.

Discussion

Discuss your method and results: The method works, and is very simple. This is in stark contrast to deep learning methods, which require tons of data. Nevertheless, it is not very robust. It could be made more robust by adding addtional templates to the template matching, and by using more sophisticated skin color detection. It would be especially good to make the task rotation invariant, it's quite sensitive to rotations.

Conclusions

In conclusion, we made a gesture detector with no learning steps. This simplicty allowed us to have a training set of 4 examples, 1 for each gesture. This is remarkable, and makes this method quite promising.

Credits and Bibliography

OpenCV documentation.

Lab 3 Skeleton

Vezhnevets, Valdimir, Vassili Sazonov, and Alla Andreeva. "A survey on pixel-based skin color detection techniques." Proc. Graphicon. Vol. 3. 2003

My teammates! Our code diverged a little bit at the very end, but we worked together on the trunk.