Problem Definition

In this problem, we build a mutliple object tracker. Although our version is relatively simple, it still works reasonably well. More sophisticated versions of such technology will be critical for autonomous driving.

Method and Implementation

Before we can do object tracking, we need localizations. For the bat dataset, we use the provided localizations. For the cell dataset, we do our own segmentation as follows. First, we construct a manual circle to mask out the outside of the petri dish. Next, we blur the image with 5x5 kernel. Then we dilate with a rectangular kernel, find contours using the opencv countour detector, and we use openCV functionality to draw bounding boxes around the detected contours. Finally we identify the centers for localizations.

With the localization, we can actually do the tracking. The algorithm is as follows:

- Construct a euclidean distance matrix between localizations in a frame and predicted state for each track.

- Use a bi-partite matching algorithm implementation in scipy (scipy.linear_sum_assingment) to do the matching

- We additionally remove a single localization in the current frame, and recompute the optimal match. We repeat this for each localization. If the average cost per match decreases by more than a threshold, we consider this a new object.

- Using these matches, update the state of each track using alpha-beta filtering.

Experiments

There are several parameters to tune in our algorithm. The first is the threshold

for becoming a 'new object'. The others are alpha and beta for the alpha-beta

filter. We hand tune these parameters. We show their importance below.

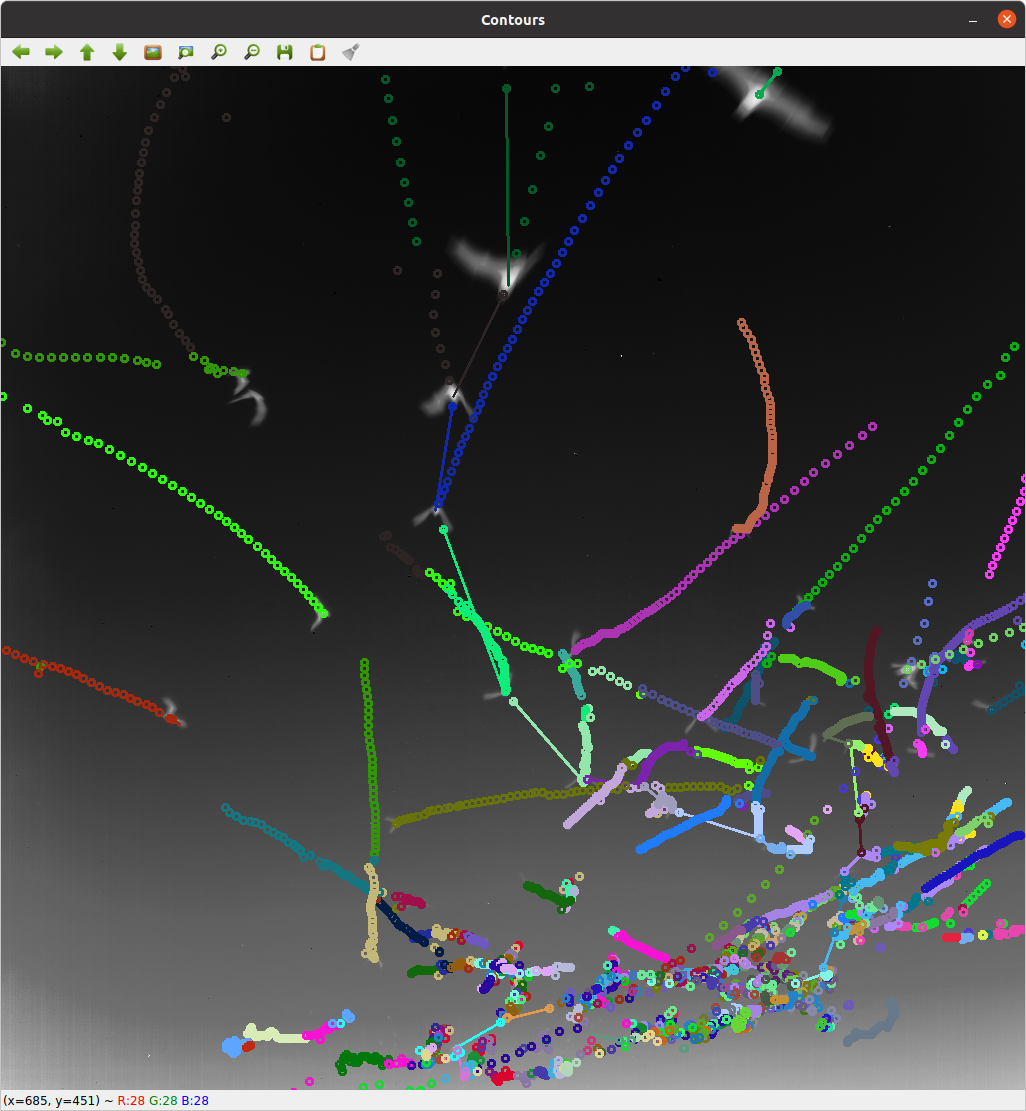

First, we start with showing the importance of our threshold. If it's set too high

and the segmentation shows new objects appearing, you get crazy matching shown by the

lines in the image below:

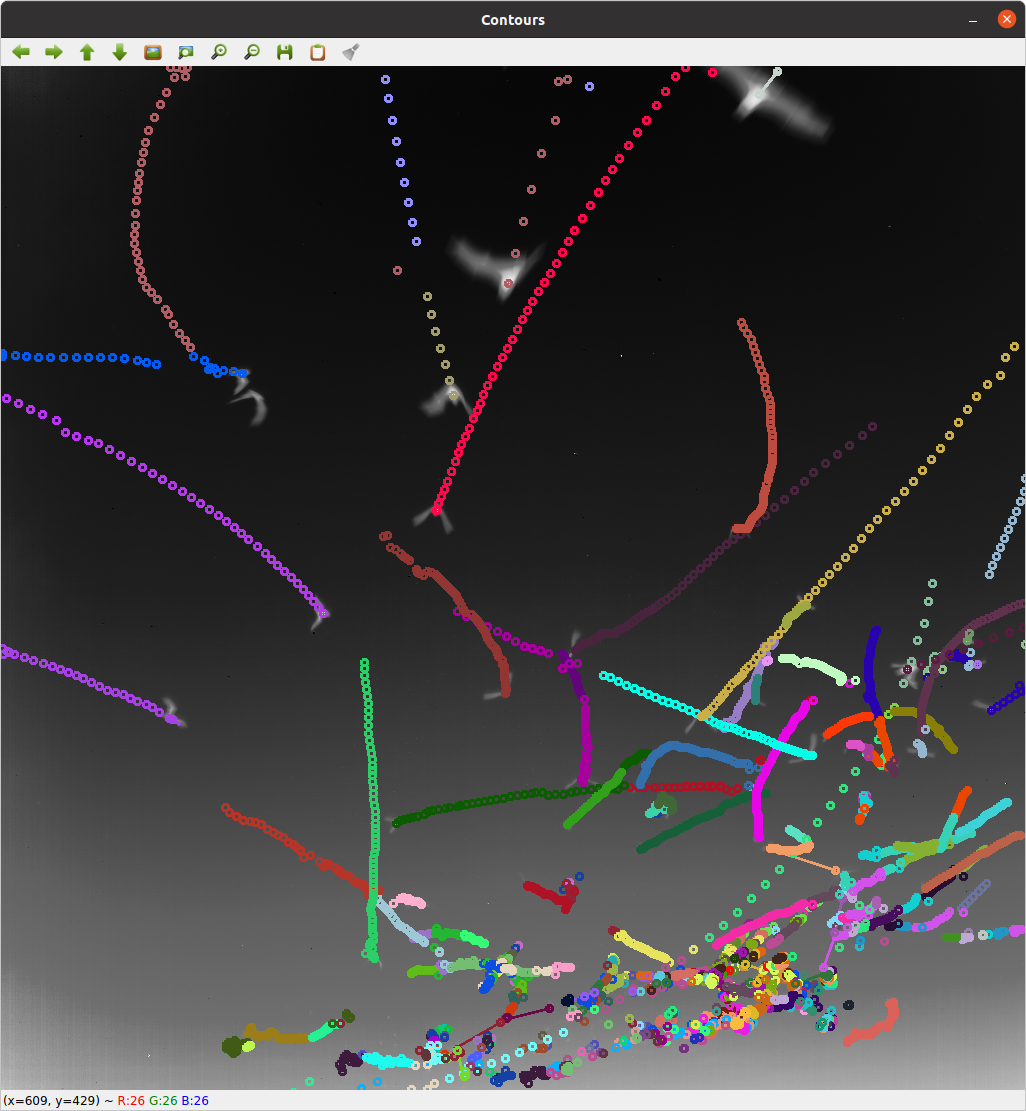

With the threshold carefully tuned to the value of 1, we get the following:

Similarly, changing alpha and beta is important, especially for the cells. If

we try to model velocity by having a non-zero beta, we get mismatches because

the movement of cells is fairly random:

However, if we only model position, the tracks tend to get better on average:

Results

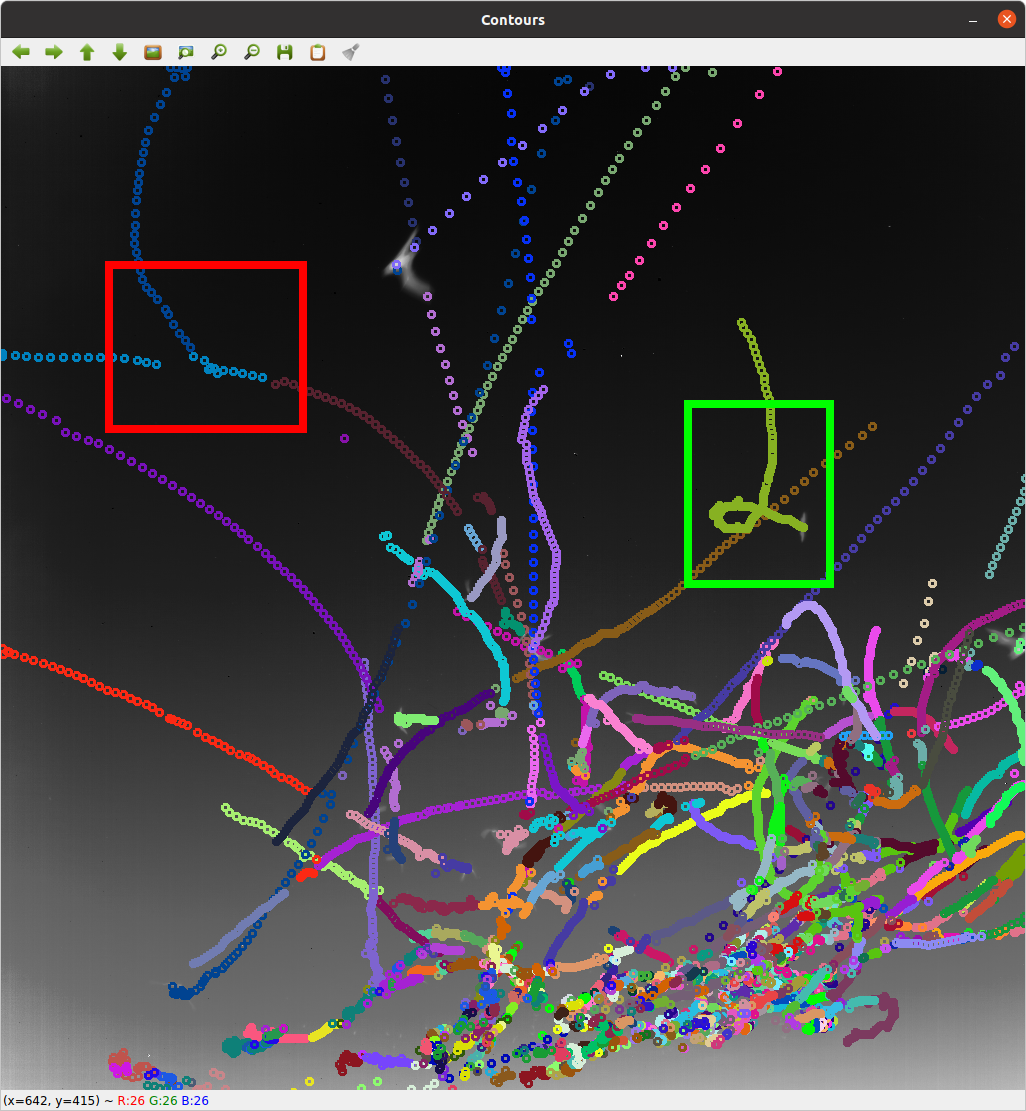

Here, we link to videos and show images of the tracks for both cells and

bats. We highlight challenges for our tracking algorithm in red and

successes in green.

In the bat video, we show that when there is an occulusion, we get undesired

switching, but that otherwise it tends to work fairly well.

Video results are linked here.

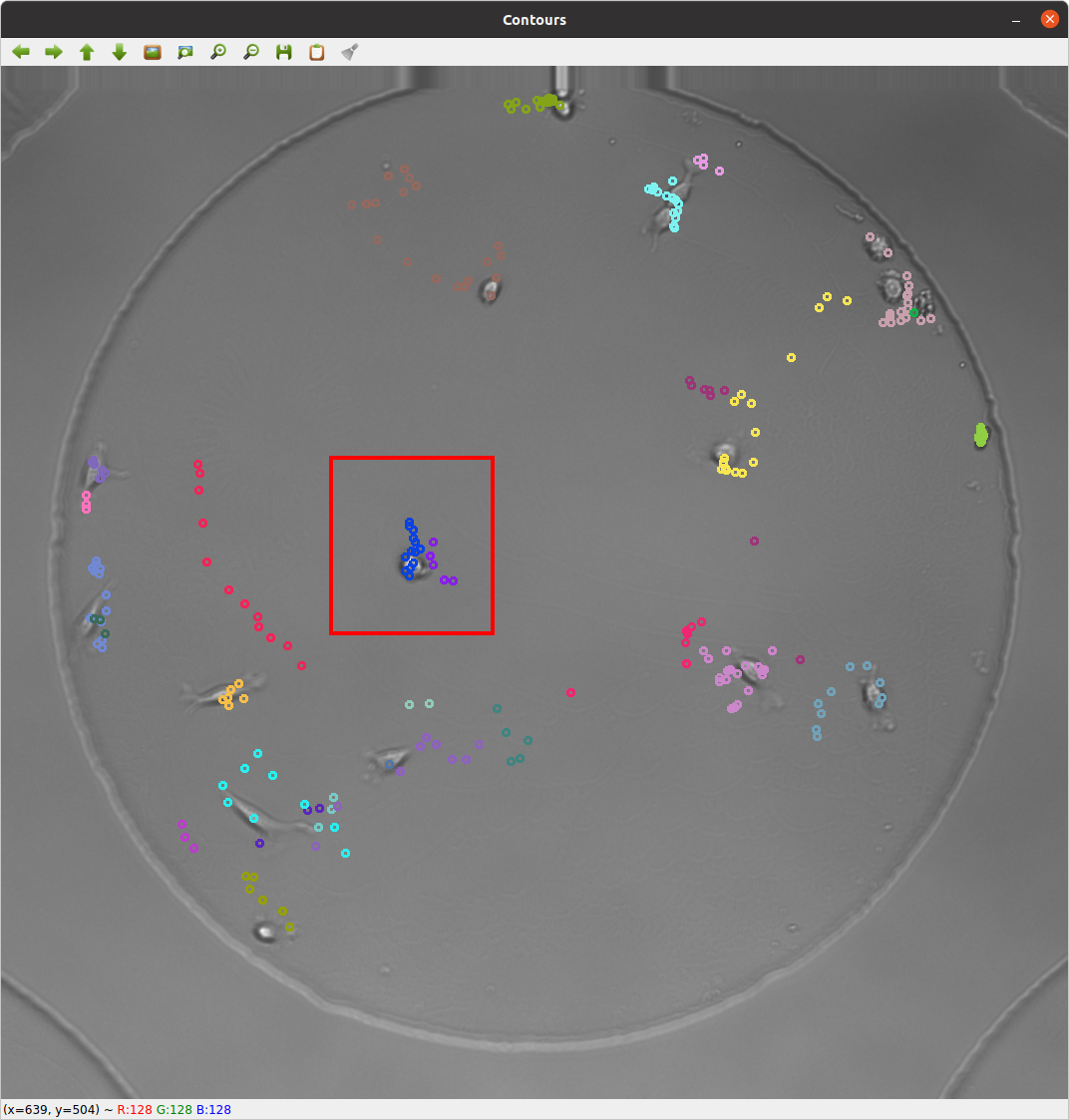

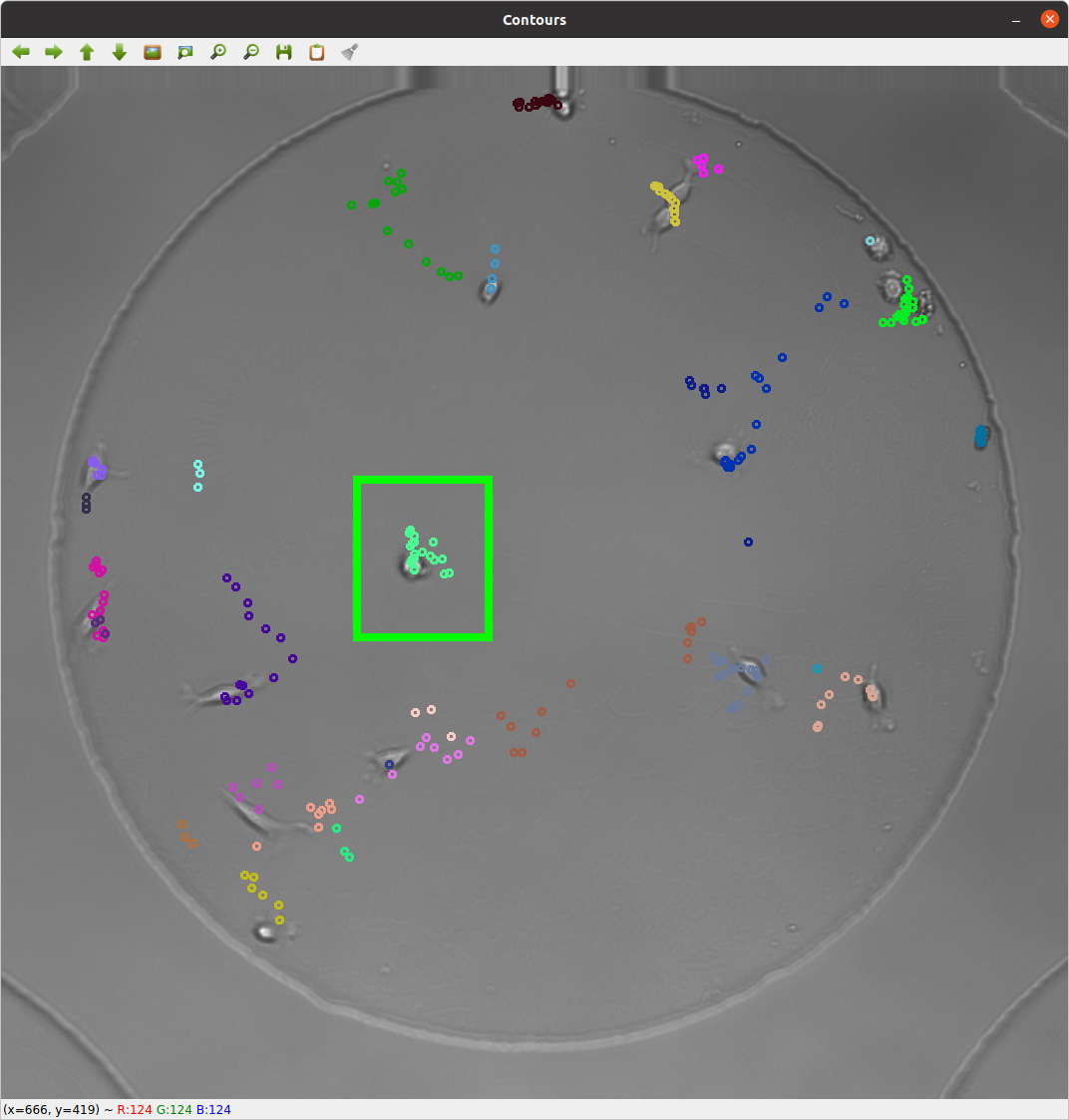

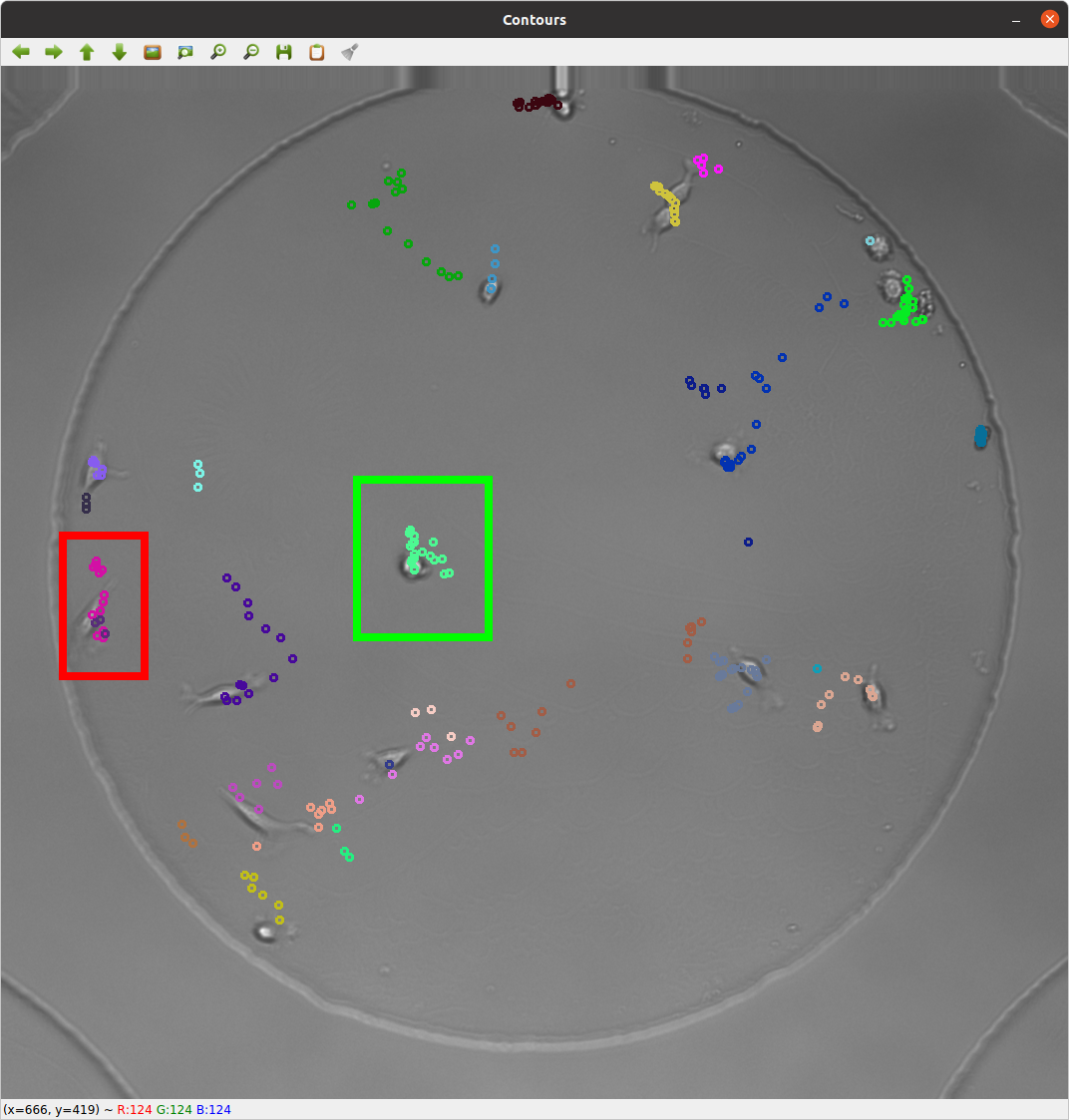

In the cell video, we show where, once again, we get undesired switching in red.

We suspect that this is because the localization is imperfect. We get

a challenging situation correct with the loop in the green box.

Video results are linked here.

Discussion

Discuss your method and results:

The method works, and is very simple. Nevertheless, there are indeed some

challenges, and it's easy to see them in the result video.

Here are answers to the discussion questions:

Show your tracking results on some portion of the sequence. In addition to

showing your tracking results on an easy portion of the data, identify a challenging

situation where your tracker succeeds, and a challenging situation where it fails.

We show videos for both bats and cells above, and annotate images. Red is incorrect,

green is correct. The bat doing the loop is relatively challenging and the tracker succeeds.

How do you decide to begin new tracks and terminate old tracks as the objects

enter and leave the field of view?

If an object leaves the field of view we terminate the track. We tried keeping

old tracks around but this left us matching to old tracks of bats long gone. If

we have a new object we get a new track.

What happens with your algorithm when objects touch and occlude each other,

and how could you handle this as to not break track

This is indeed a challenge for our current implementation. If we had more time,

we could do tracking across multiple time-scales and merge across time scales.

The courses time scale would have occlusion be less likely. You could merge tracks

by selecting the lower frame rate one if two timescales disagree.

What happens when there are spurious detections that do not connect with other

measurements in subsequent frames?

In this case, our algorithm can fail with a high-cost optimal bipartite matching.

We try to account for this case with our 'cost threshold', described in the method section.

If not matching a localization decreases the cost by more than some threshold,

we just start a new track. If this localization then fails to match in the next frame,

it goes away. Nevertheless, our algorithm could be made more robust in this regard,

potentially with variants of multiple hypothesis tracking.

What are advantages and drawbacks of different kinematic models:

Do you need to model the velocity of the objects, or is it sufficient to just

consider the distances between the objects in subsequent frames

It depends! We found that with bats it is useful to model velocity; they are fast!

But the cell motion is more random and modelling velocity mostly harms the model.

Conclusions

We wrote a simple tracker that works reasonably, though it certainly could still be improved.

Credits and Bibliography

OpenCV documentation.

Scipy Documentation

My teammates!