AdaShare: Learning What To Share For Efficient Deep Multi-Task Learning

Abstract

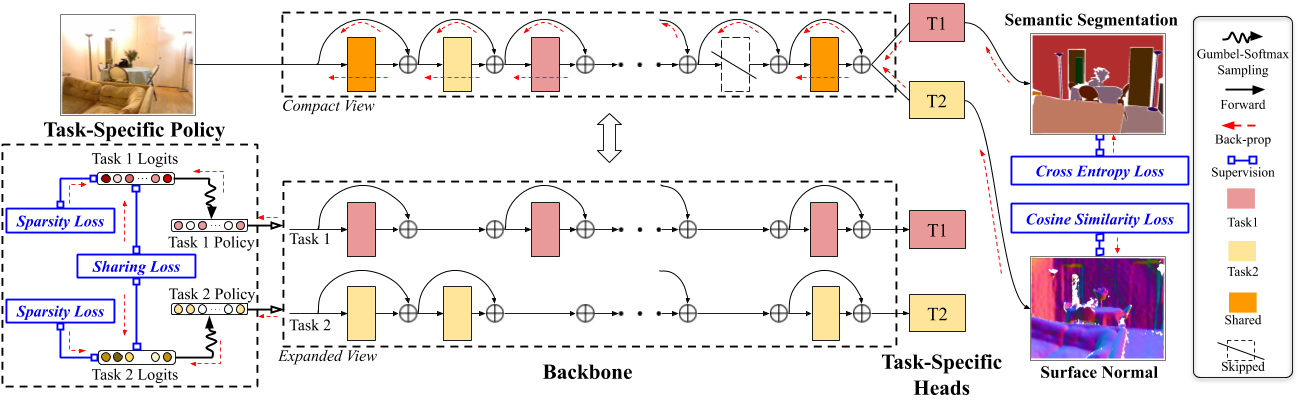

Multi-task learning is an open and challenging problem in computer vision. The typical way of conducting multi-task learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate task-specific networks with an additional feature sharing/fusion mechanism. Unlike existing methods, we propose an adaptive sharing approach, called \textit{AdaShare}, that decides what to share across which tasks to achieve the best recognition accuracy, while taking resource efficiency into account. Specifically, our main idea is to learn the sharing pattern through a task-specific policy that selectively chooses which layers to execute for a given task in the multi-task network. We efficiently optimize the task-specific policy jointly with the network weights, using standard back-propagation. Experiments on three challenging and diverse benchmark datasets with a variable number of tasks well demonstrate the efficacy of our proposed approach over state-of-the-art methods.

Overview

AdaShare is a novel and differentiable approach for efficient multi-task learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible. Our main idea is to learn the sharing pattern through a task-specific policy that selectively chooses which layers to execute for a given task in the multi-task network. In other words, we aim to obtain a single network for multi-task learning that supports separate execution paths for different tasks.

Code

The code to prepare data and train the model can be found in: https://github.com/sunxm2357/AdaShare

Reference

If you find this useful in your work please consider citing:

@article{sun2020adashare,

title={Adashare: Learning what to share for efficient deep multi-task learning},

author={Sun, Ximeng and Panda, Rameswar and Feris, Rogerio and Saenko, Kate},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}