Decision Boundary of Linear Data:

The learning rate: 1.0, number of epochs: 2,

Accuracy: 462/500 = 92.4%

Explanation: When there's no hidden layer, a neural network can be seen as a linear model. Thus, two-layer neural network is able to classify linear data.

The learning rate: 1.0, number of epochs: 2,

Accuracy: 849/1000 = 84.9%

Explanation: Since two-layer neural network is a linear model. The border line of the model can only be a straight line on the plane. Therefore, non-linear data won't be classified correctly due to this limitation.

The learning rate: 1.0, number of epochs: 10, number of nodes in hidden layer: 20

Accuracy: 465/500 = 93.0%

Explanation: A neural network with hidden layer can be used for non-linear modeling. As non-linear model, it can be used for linear modeling. As a result of adding hidden layer, the bordary has a curve in addition to being seemingly straight.

The learning rate: 1.0, number of epochs: 10, number of nodes in hidden layer: 20

Accuracy: 948/1000 = 94.8%

Explanation: As a non-linear model, neural network with hidden layer can be used to classify non-linear data. If the model is well-trained for enough times, it can describe a non-linear boundary well.

Number of epochs: 10, Number of nodes in hidden layer: 20

Learning Rate: 0.01, Accuracy: 873/1000 = 87.3%

Learning Rate: 1.0, Accuracy: 948/1000 = 94.8%

Learning Rate: 2.0, Accuracy: 897/1000 = 89.7%

Explanation: If the learning rate is too small, the convergence of minimum of cost will be slow. The convergence can be so slow that the model has not yet been trained to its optimal weight sets after going through several epochs. If the learning rate is too high, the weight set can be bounce back and forth between the optimal setting, making the convergence slow as well. Thus, a suitable learning rate that is not too big or small will help the convergence being fast and make the training process efficient and accurate.

Number of epochs: 10, Learning rate: 1.0

Number of Nodes: 2, Accuracy: 799/1000

Number of Nodes: 20, Accuracy: 94.8%

Number of Nodes: 100, Accuracy: 964/1000

Explanation: When the number of nodes in the hidden layer is small, the non-linear classification ability of the hidden layer is limited since weights used for creating complex curve is limted. When the number of nodes in the hidden layer is large, the computation time used to train the model will be high due to the number of weights needs to be updated. Also, since the weight matrices for each (layer,layer+1) are randomized, if the randomized condition is not close to the "ground truth" weight set (assuming there is a nearly perfect weight set to classfy the dataset), then overfitting problem can happen (for example, too many unnecessary curves on the boundary).

Overfitting means the classification model loses the ability to successfully classify general data because it pursues to classify "specific" cases in the training dataset.

Possible Reasons of overfitting

Ways of preventing overfitting

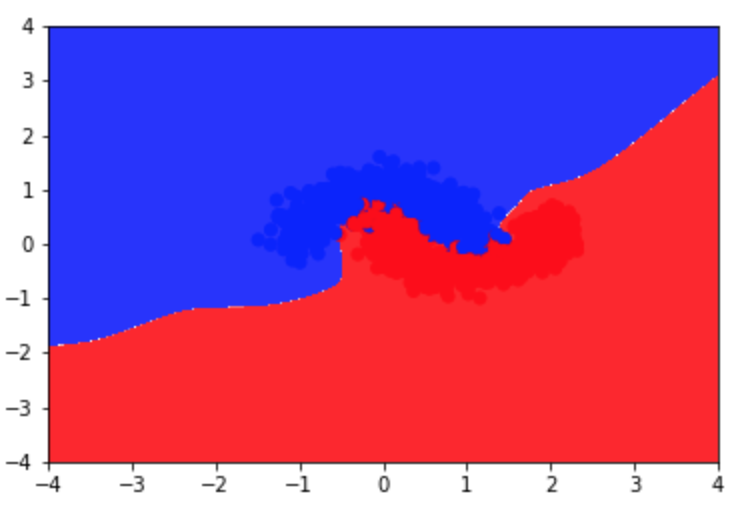

boundary without regularization

The learning rate: 1.0, number of epochs: 10, number of nodes in hidden layer: 20, lambda for regularization: 0

Accuracy: 958/1000 = 95.8%

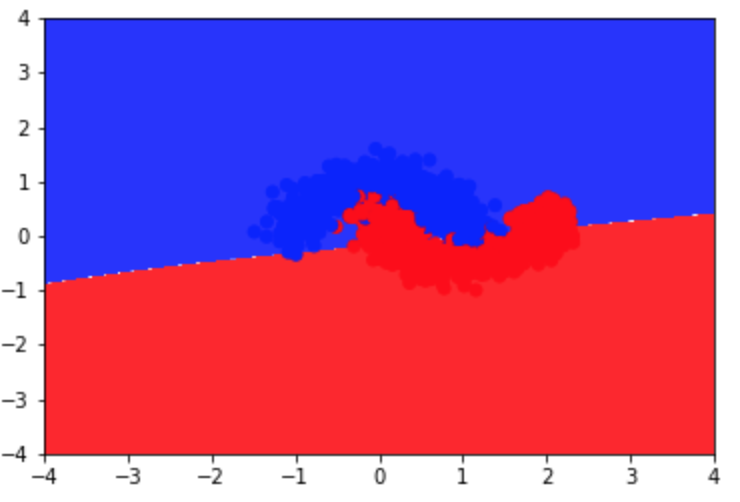

boundary with regularization

The learning rate: 1.0, number of epochs: 10, number of nodes in hidden layer: 20, lambda for regularization: 0.01

Accuracy: 860/1000 = 86.0%

Explanation: After L2 regularization, the model will have less weights that have significant influences on the model, thus the curves of the boundary will be less and the boundary looks more "linear" than the model without L2-regularization. Therefore, L2 regularization is able to prevent potential overfitting, with the possible price to lose some accuracy for speific data (points).