YIDA XIN

-

Part 1. Written Assignment

Link to PDF: hw4_written.pdf -

Part 2. Programming Assignment [Acknowledgment of collaboration with Andrea Burns, Dina Bashkirova, and Vitali Petsiuk]

For the pianist-hands-tracking part, we first reduced the search region to a 100x100 window. To do so, we had to identify the region in all the frames that contains that most movements, with the underlying assumption that the pianist’s hands carry out the most movements. After the max-movement region is identified, because it is rather difficult to find the hands via skin-color detection, we instead (1) identified the piano keyboard; (2) identified a vertical line that goes through the piano keyboard; (3) used the knowledge that hands cast shadow on the keyboard and that hands are white pixels while shadows are black pixels in the final binary mask, so that hands can be found by identifying all the white pixels connected to this boundary; and (4) used the default knowledge that there are only two hands, so only two bounding boxes should be drawn. Below are some examples taken from the actual outputs:

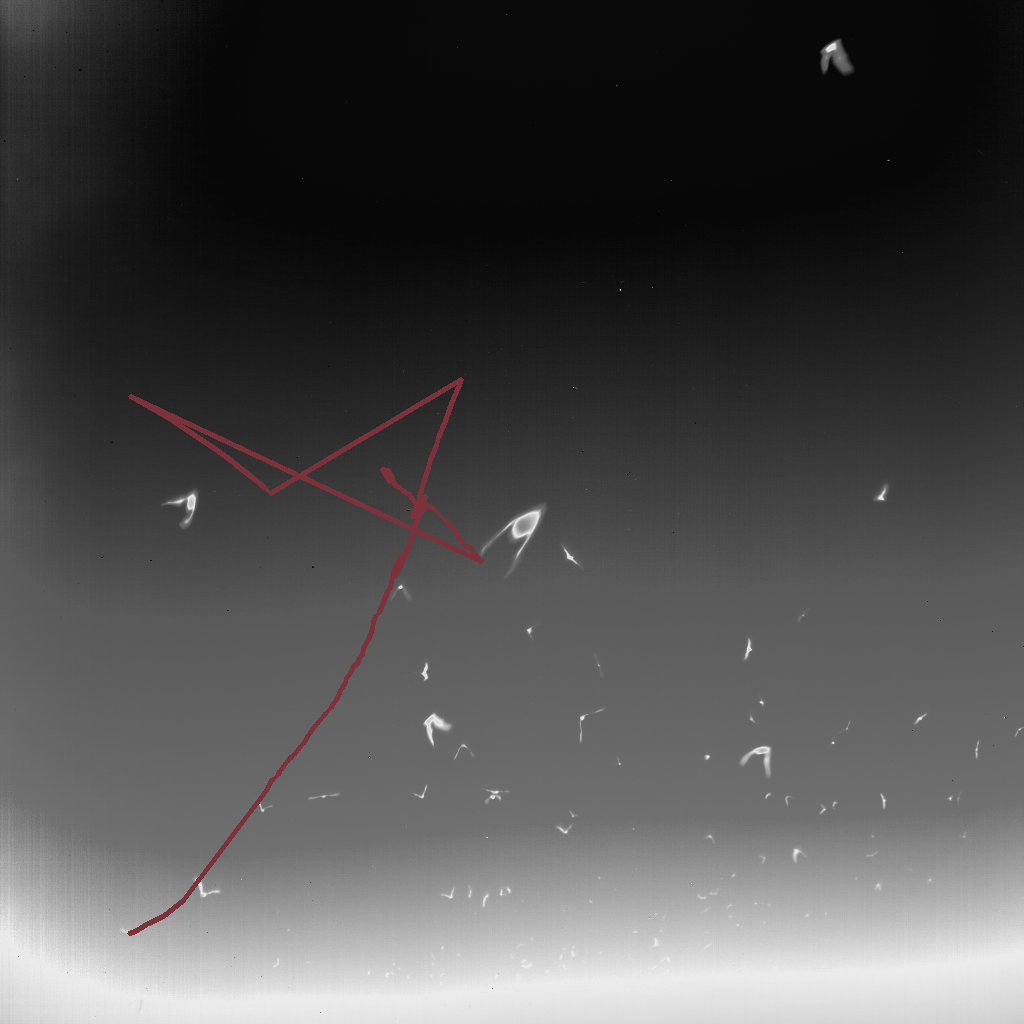

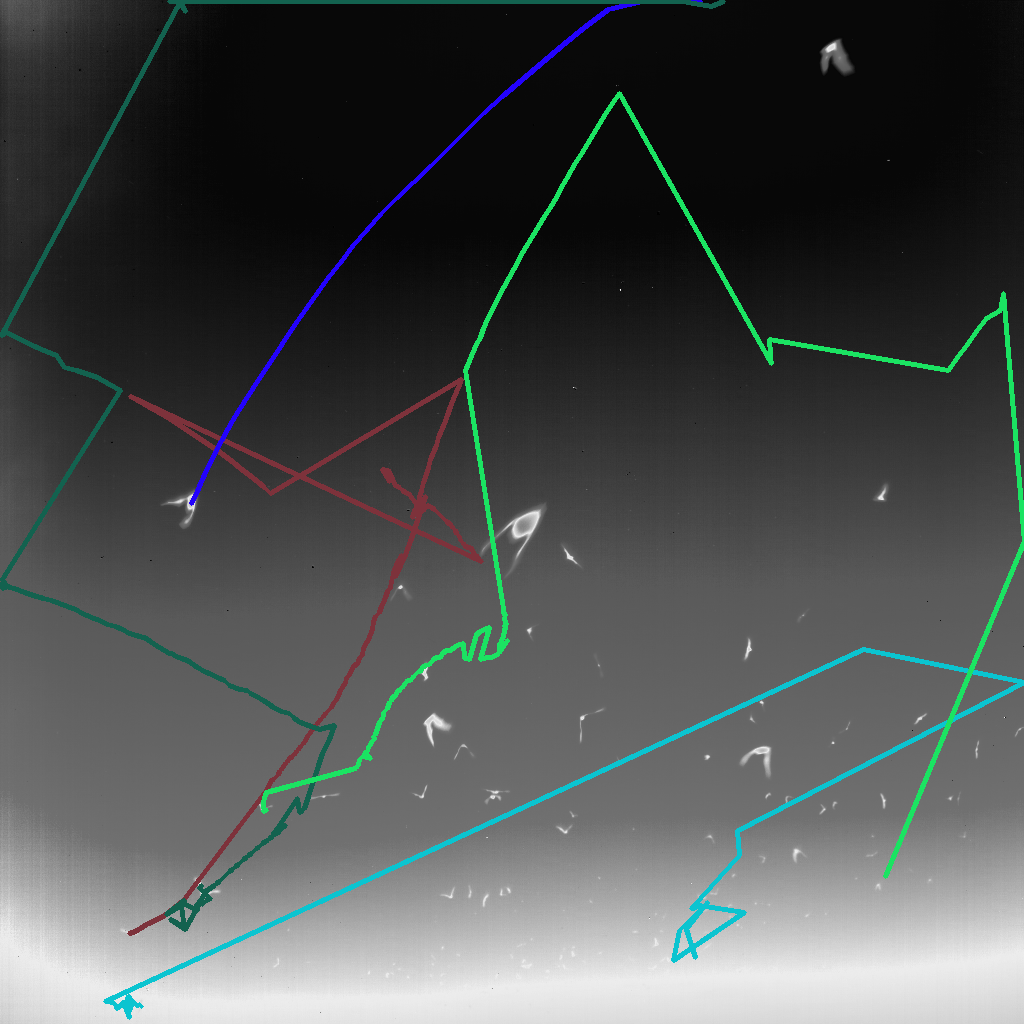

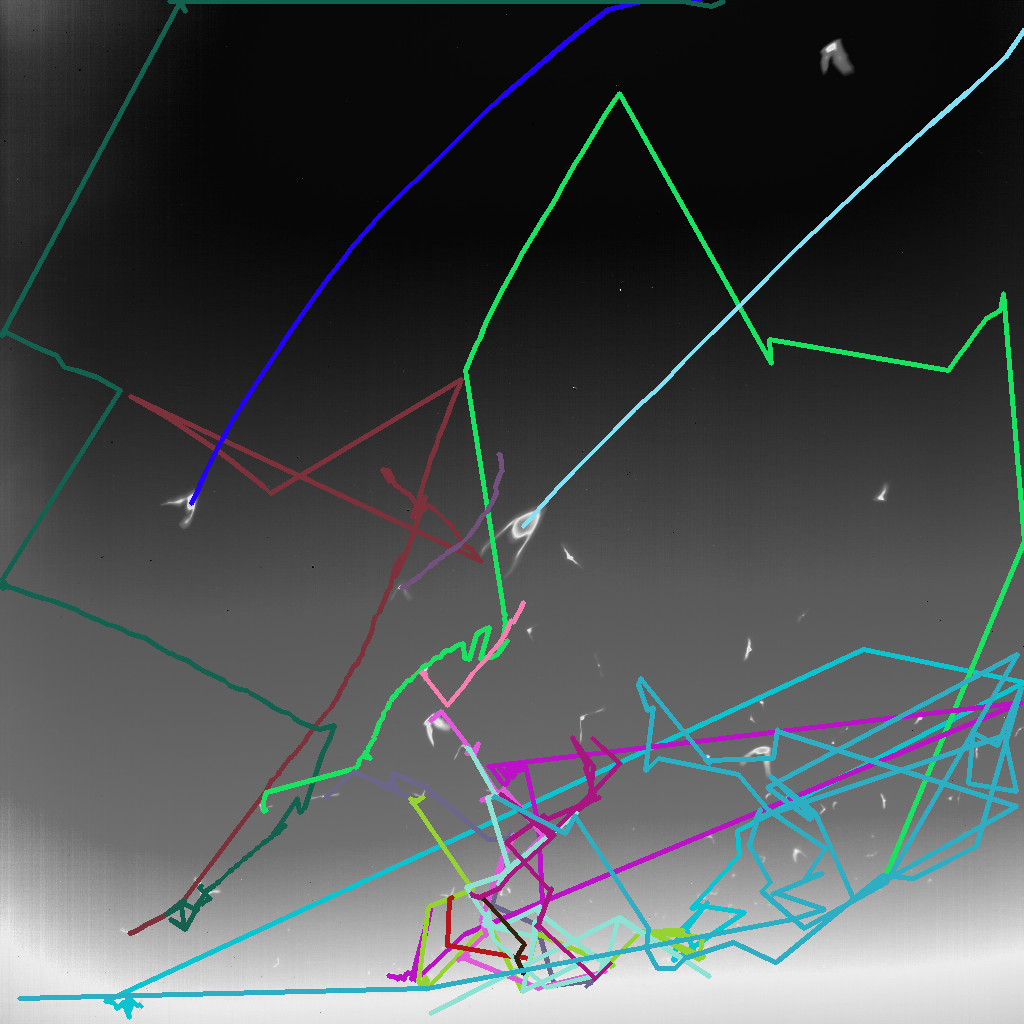

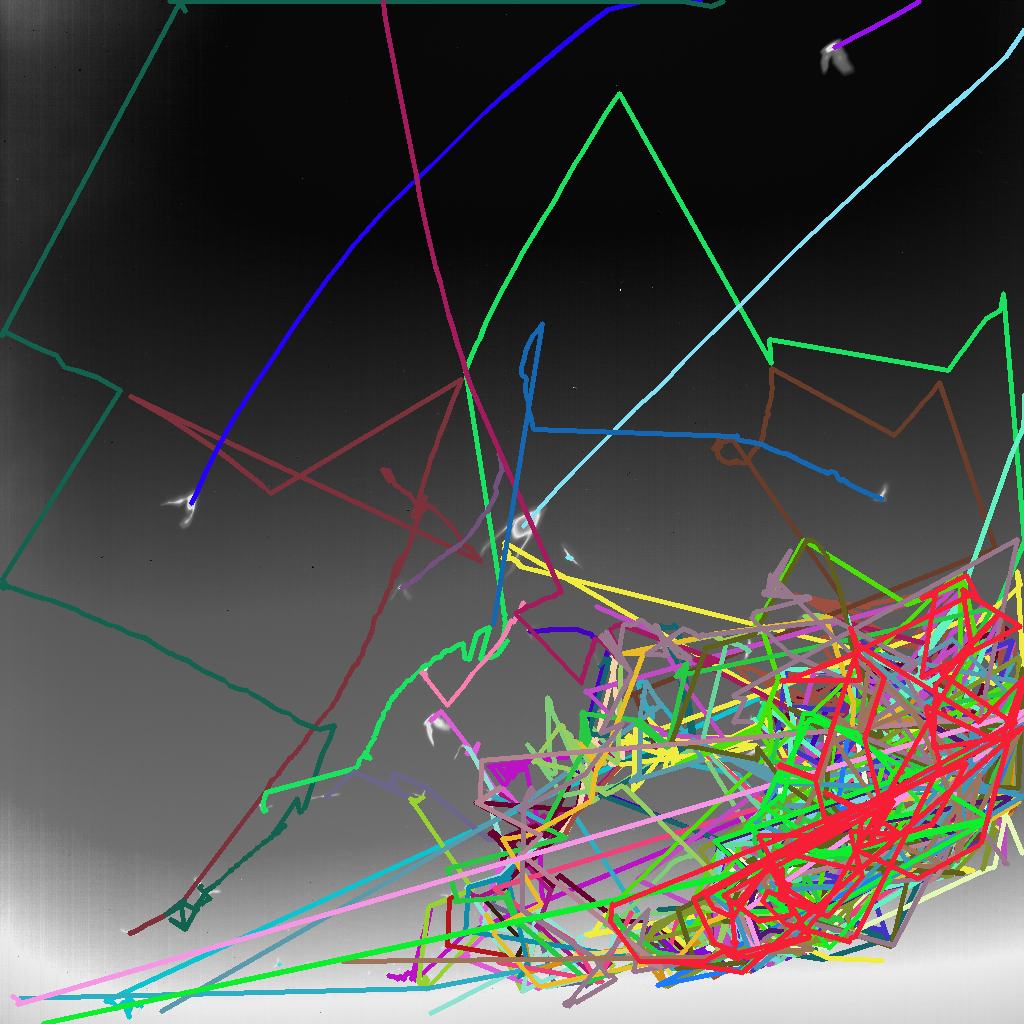

For the bat-tracking part, we used the bat segmentations provided in .txt files in “Localizations.” There is a separate .txt file per frame; the .txt file stores coordinates of the centroid of each bat. The underlying assumption is that, for each bat in the current, when we look at where that bat might be in the next frame, it cannot have moved that much away from its previous position. Consequently, we simply measured the L2-distance, using the coordinates, between each bat in the current frame and the closest coordinate to this bat in the next frame; this closest coordinate in the next frame is the same bat’s centroid location in that next frame. This allows us to create an initial track for (almost) each bat. Then, for each subsequent frame, for each bat that corresponds to an existing track, we first apply Kalman Filter on that bat’s track and obtain a predicted location for that bat in the next frame; we then find, for each bat in the next frame, the closest coordinate in the next frame, by again measuring the L2-distance between that bat’s predicted location and each actual coordinate in the next frame. The closest actual coordinate will be added to each bat’s existing tracks. In our implementation, every time a coordinate in the next frame has already been assigned, we delete that coordinate from that next frame so as to ensure that the same coordinate cannot be assigned to the same bat. Consequently, our approach is a greedy approach. Below are some examples of how each bat’s track is found by the algorithm, as well as the final version that contains all bats’ tracks:

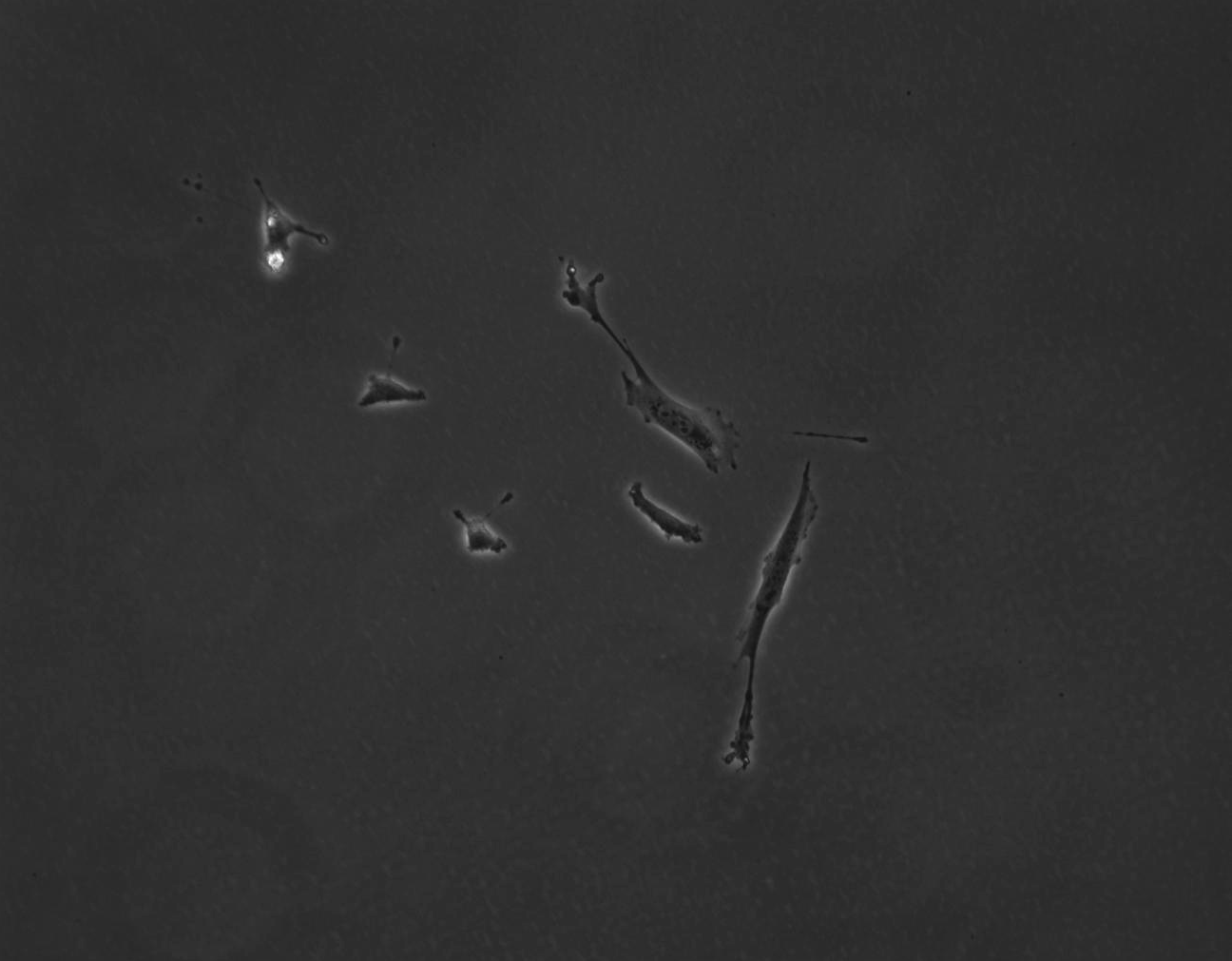

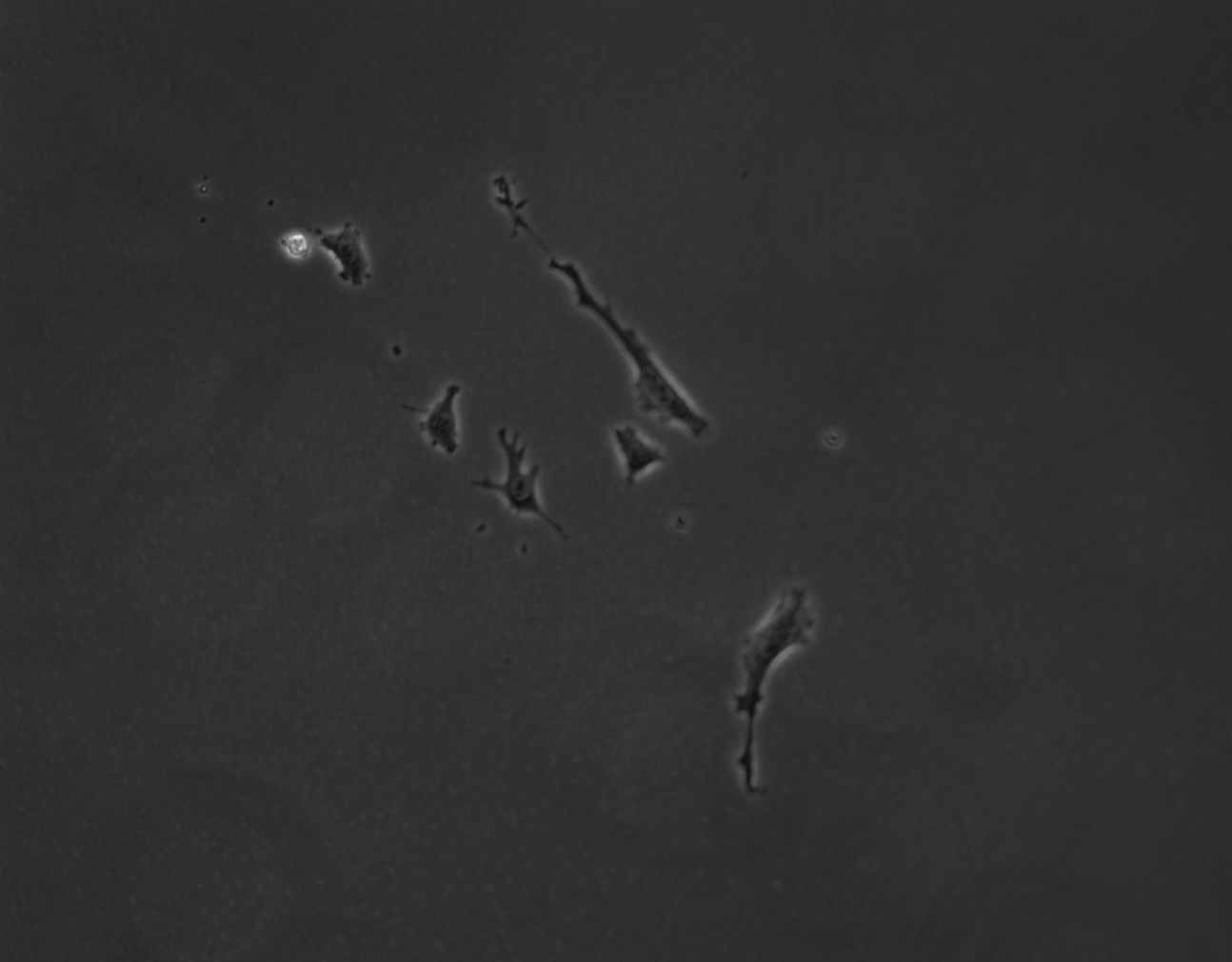

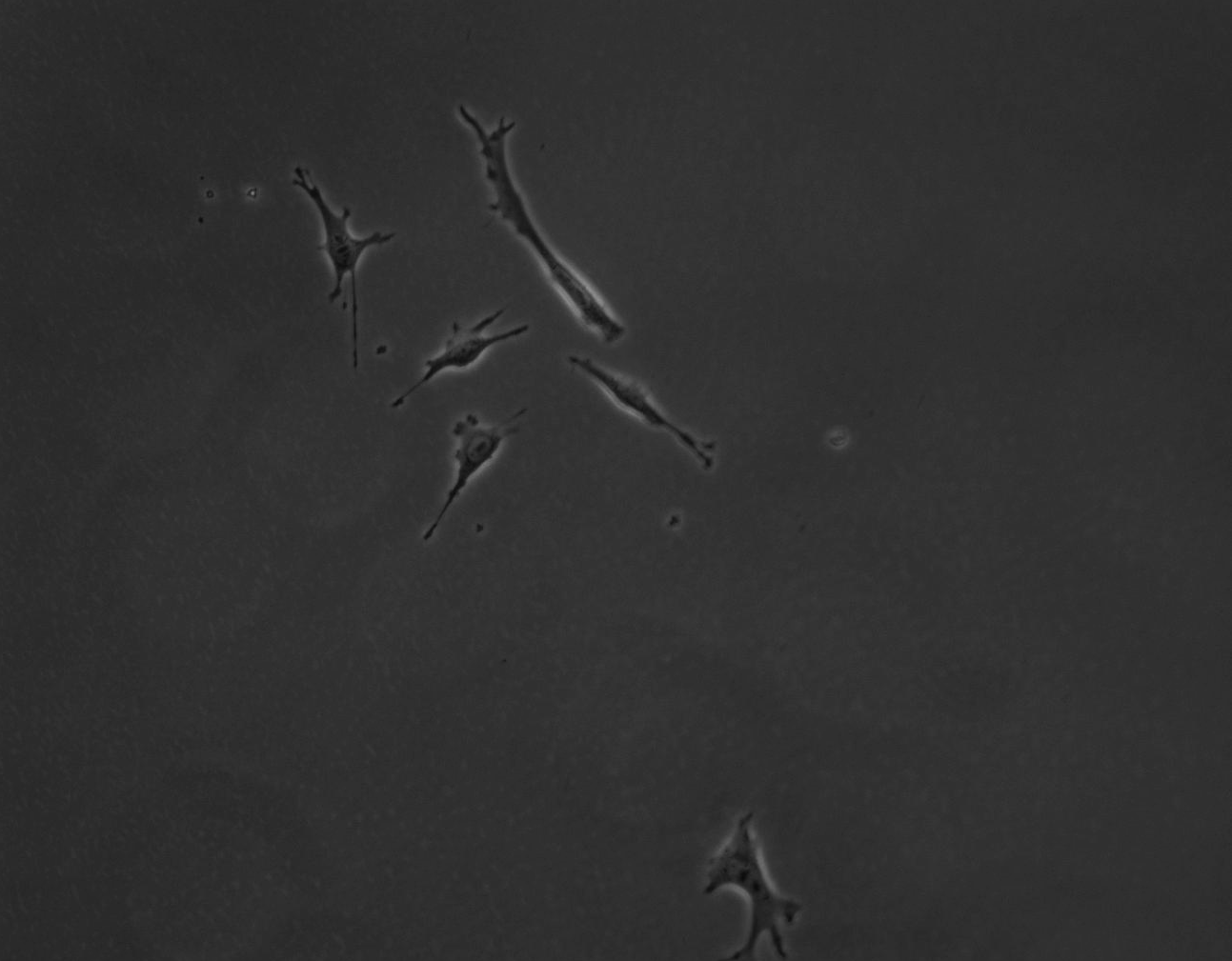

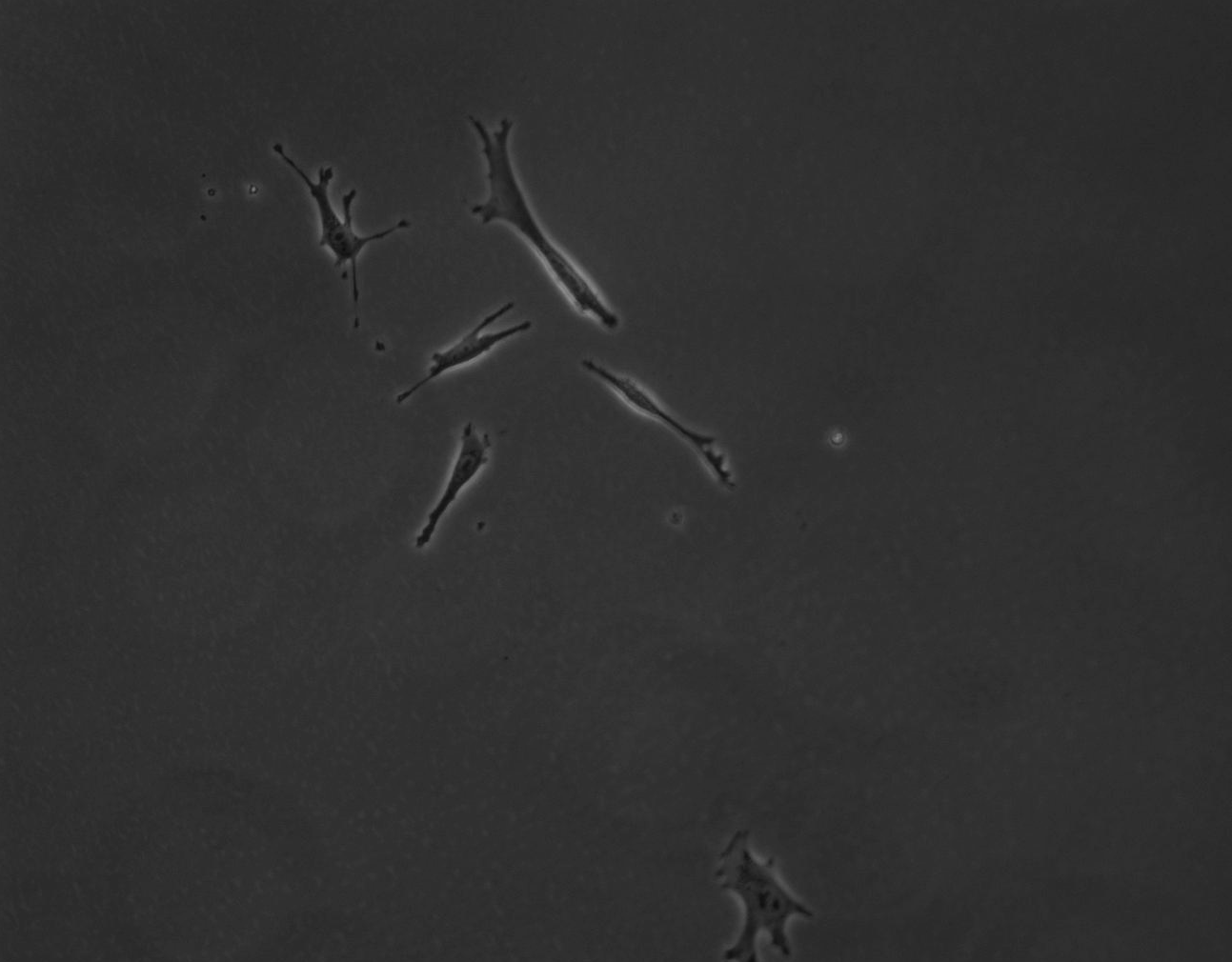

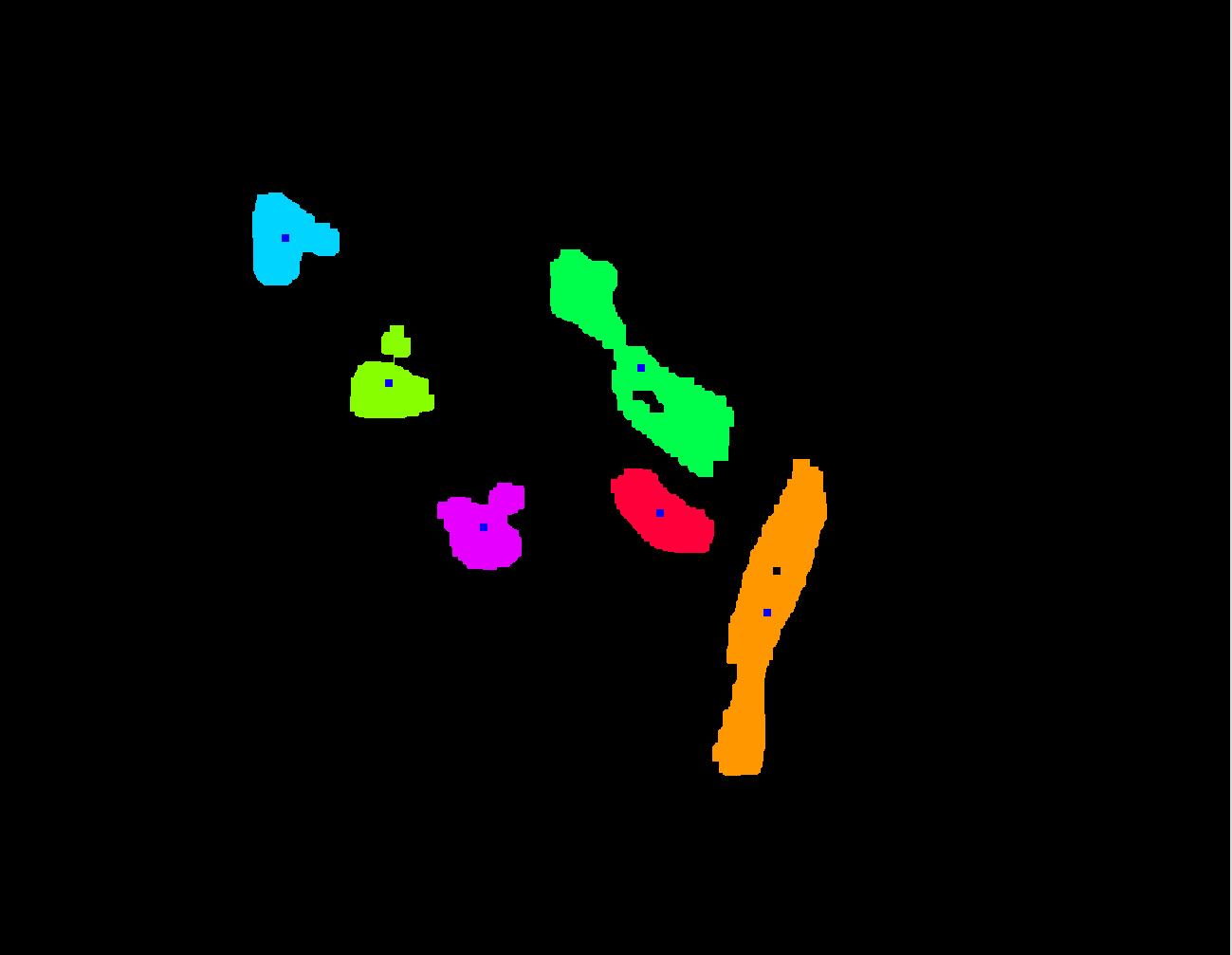

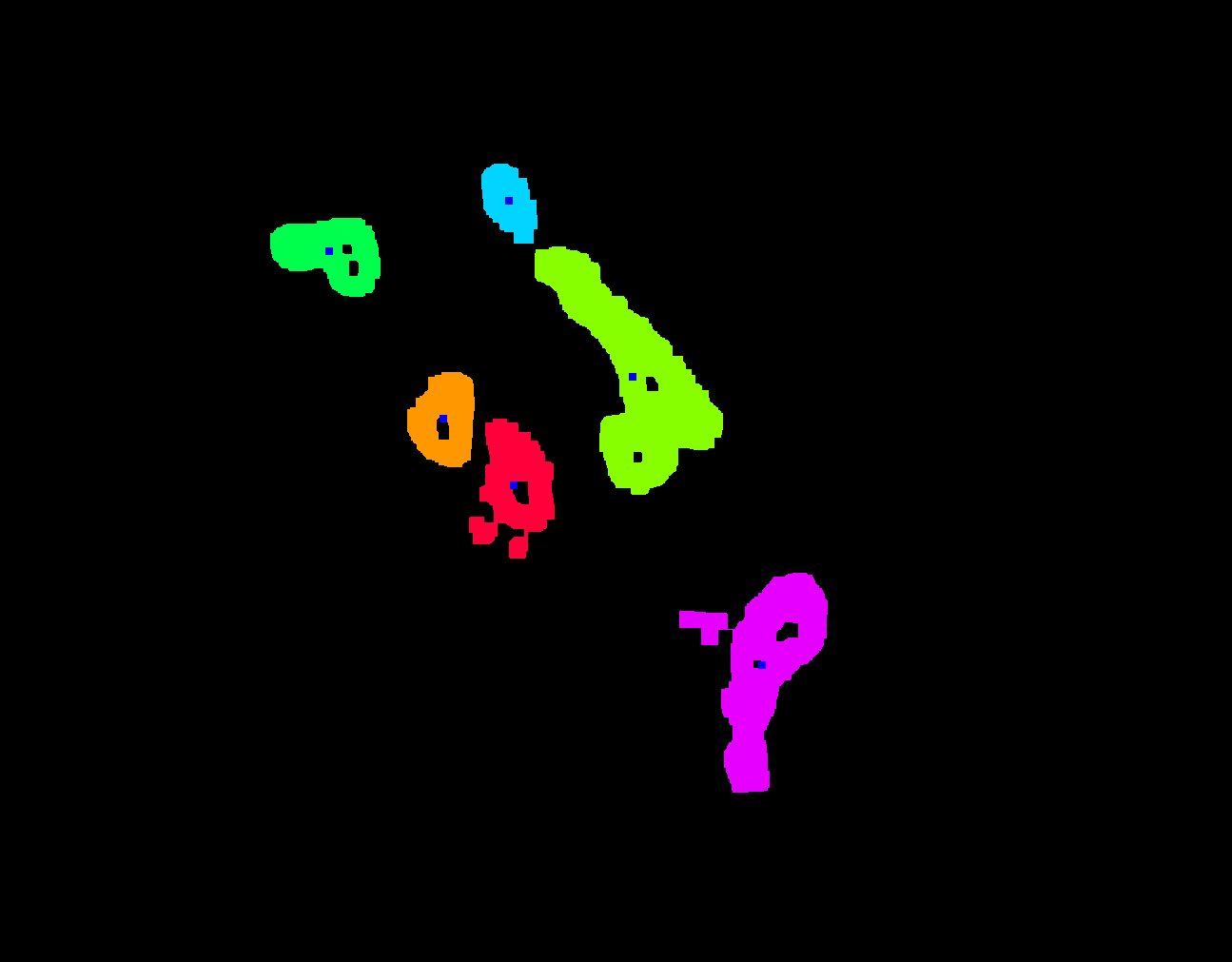

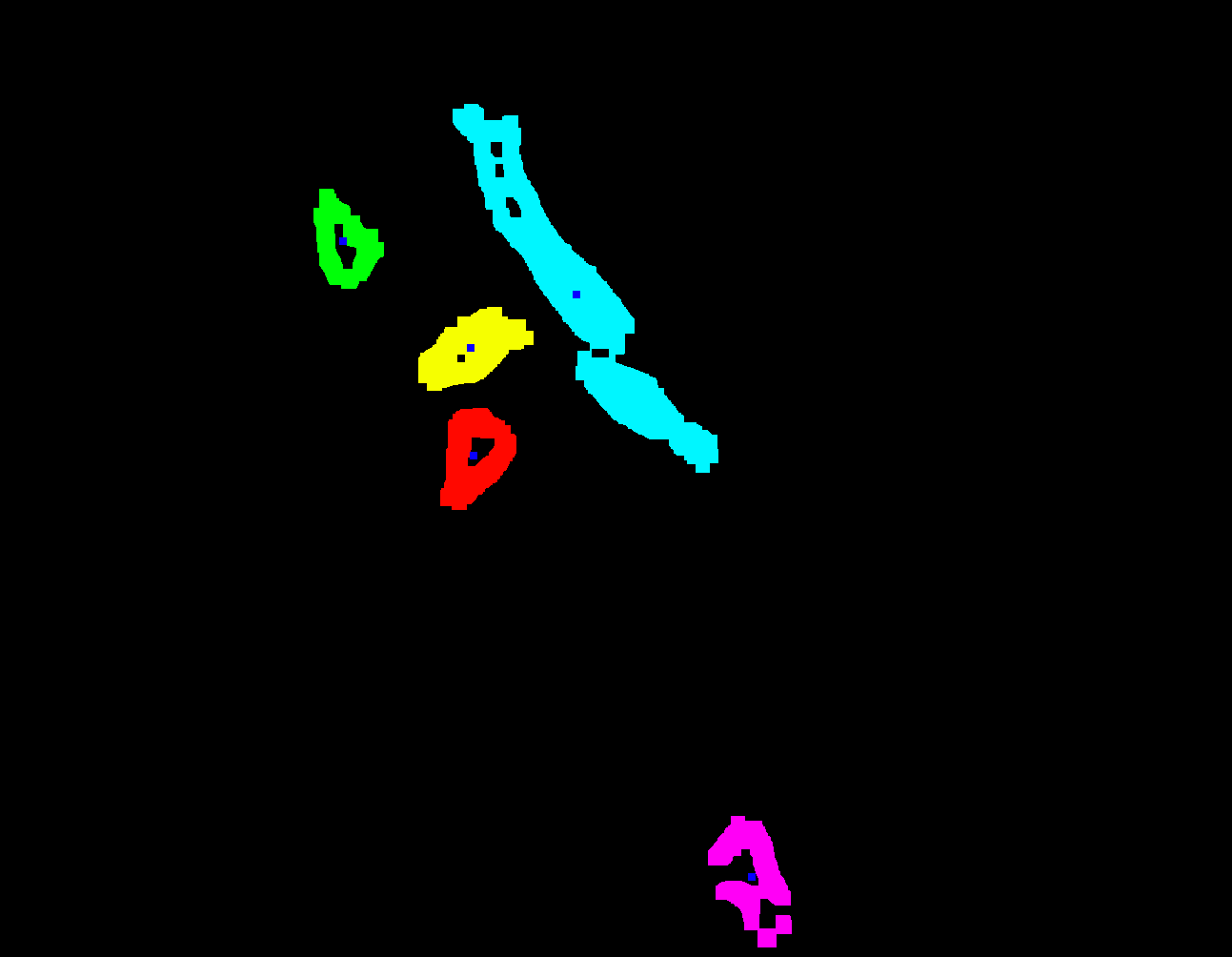

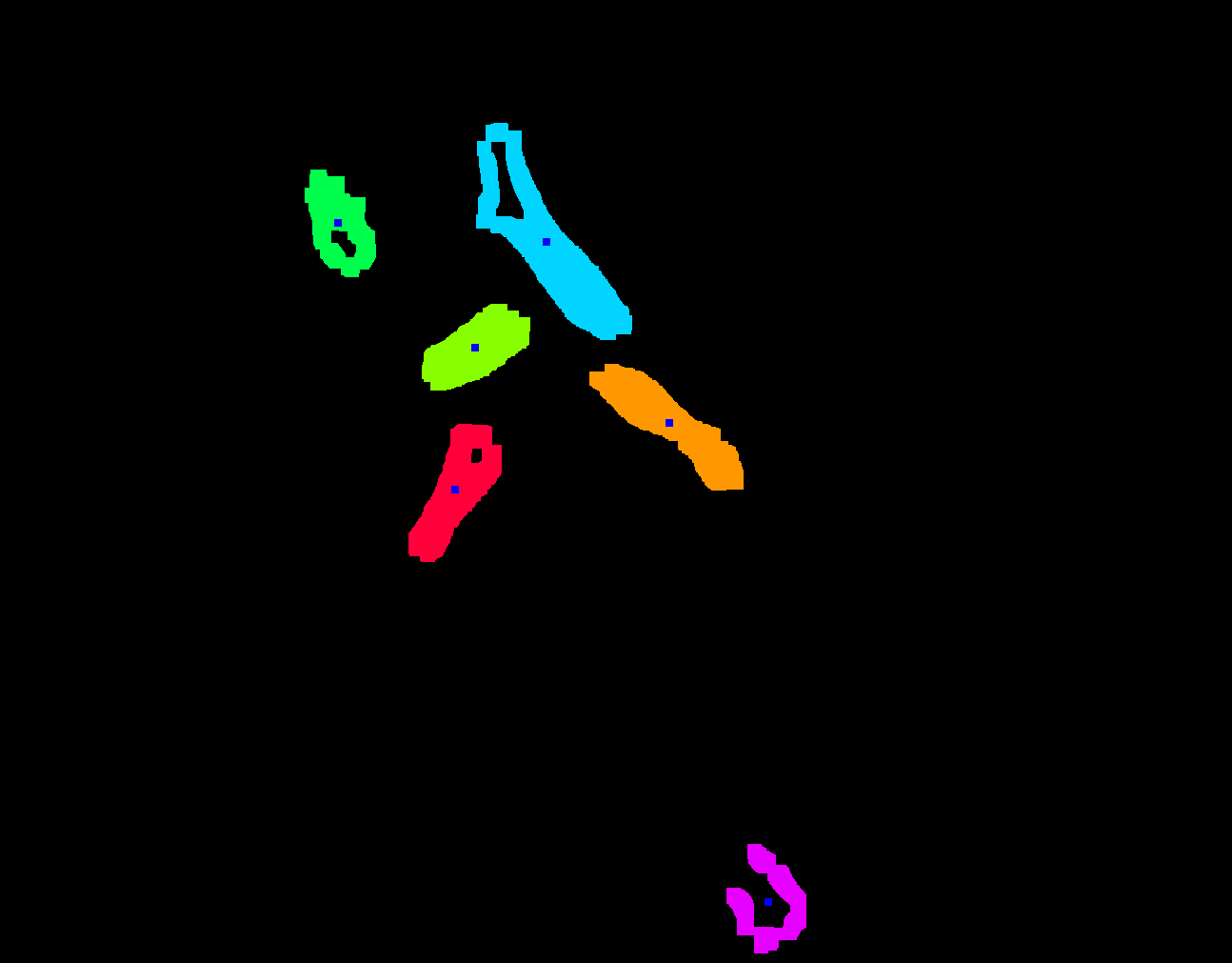

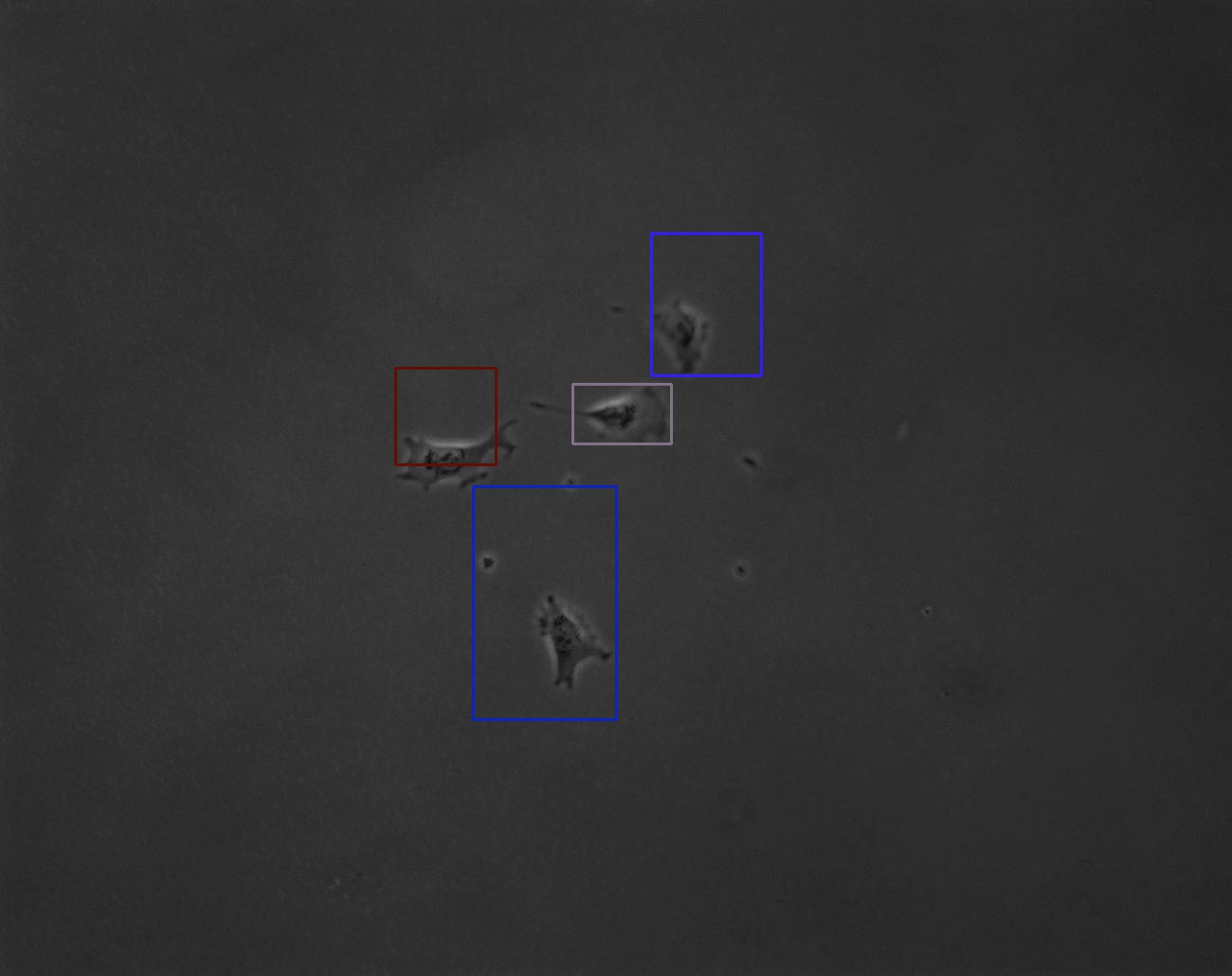

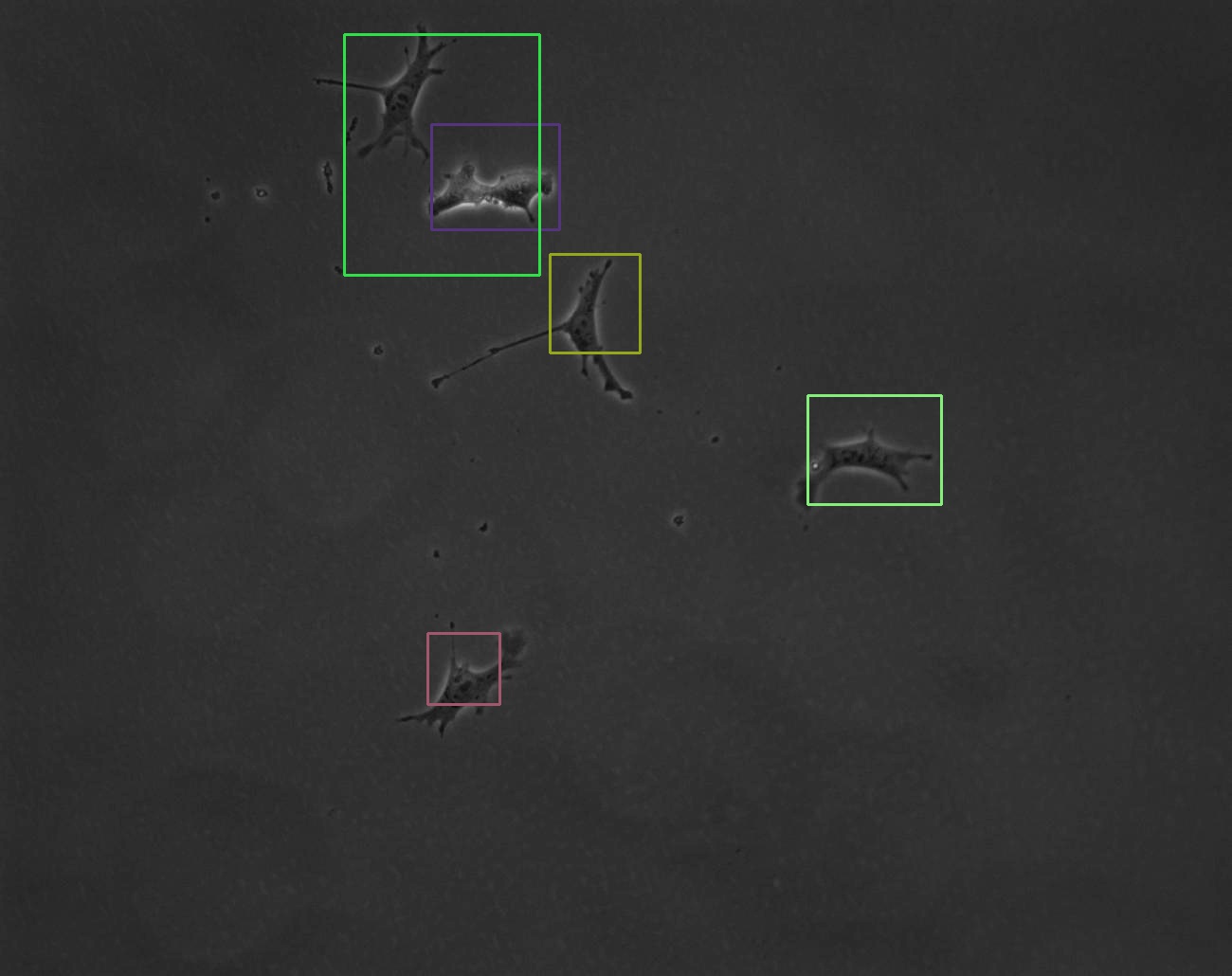

For the cell-detection part, we first detected the cells using the “halo”s around each cell, because the images are gray-scale and the cell bodies are hardly distinguishable from the background; this yields the preliminary detections. To denoise these preliminary detections, we first performed morphological closing, and then eliminated objects that were really small — with the underlying assumptions that (1) the sizes of important cells should be significant relative to the frame, and (2) if a detected object is very small and it is in fact a cell, then we probably do not care so much about it, anyways. This yields very clean cells for each frame, and we label these cells using different colors.

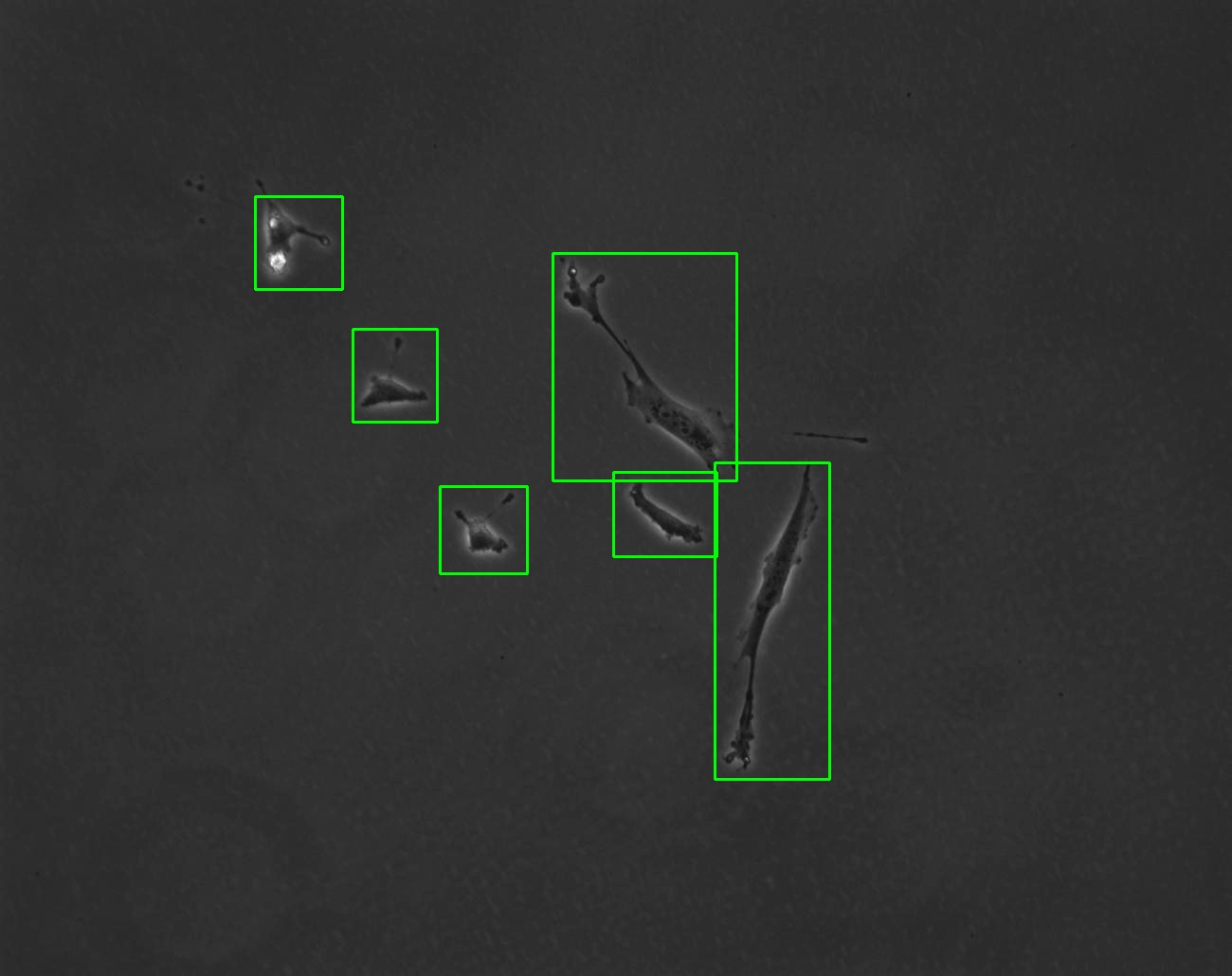

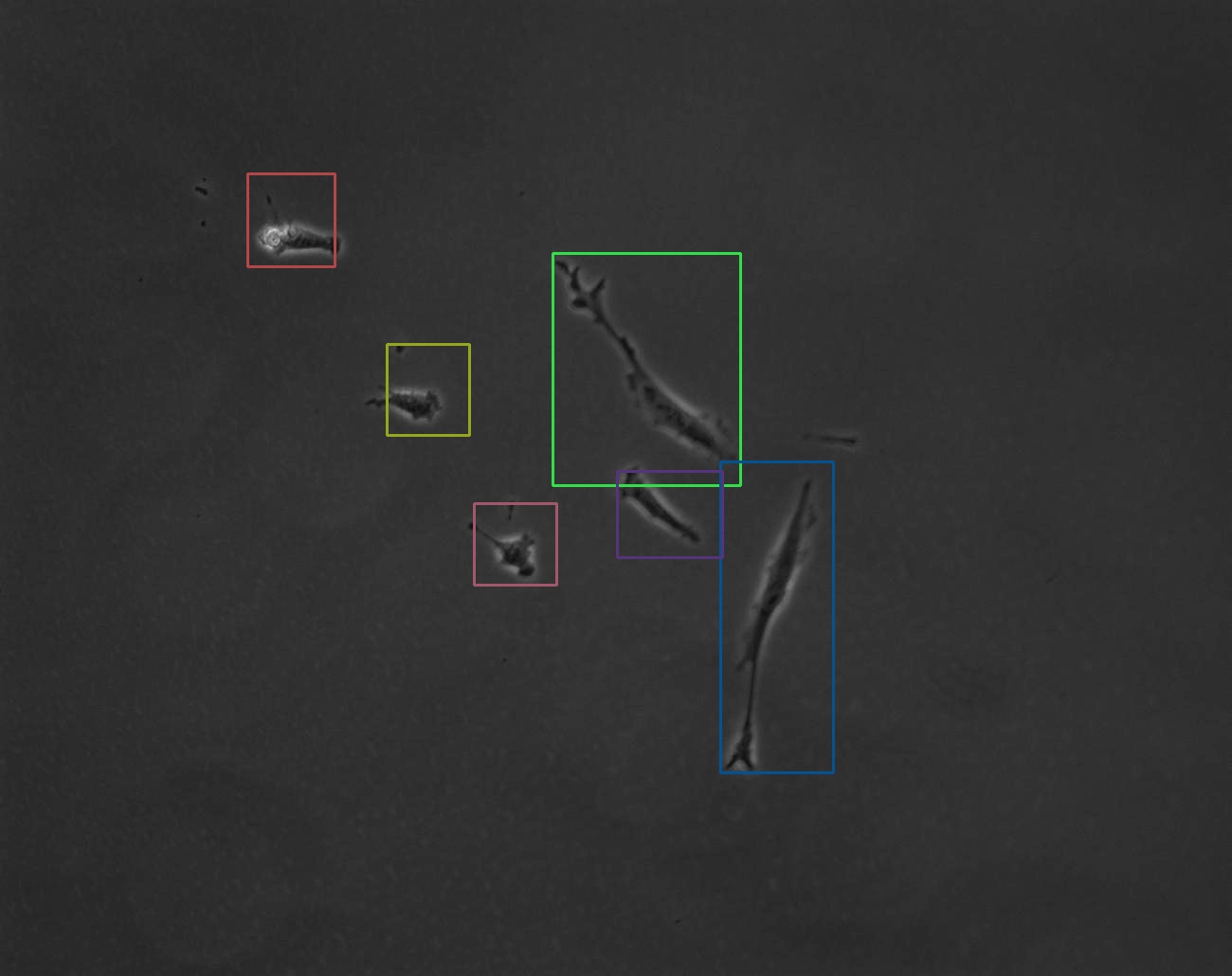

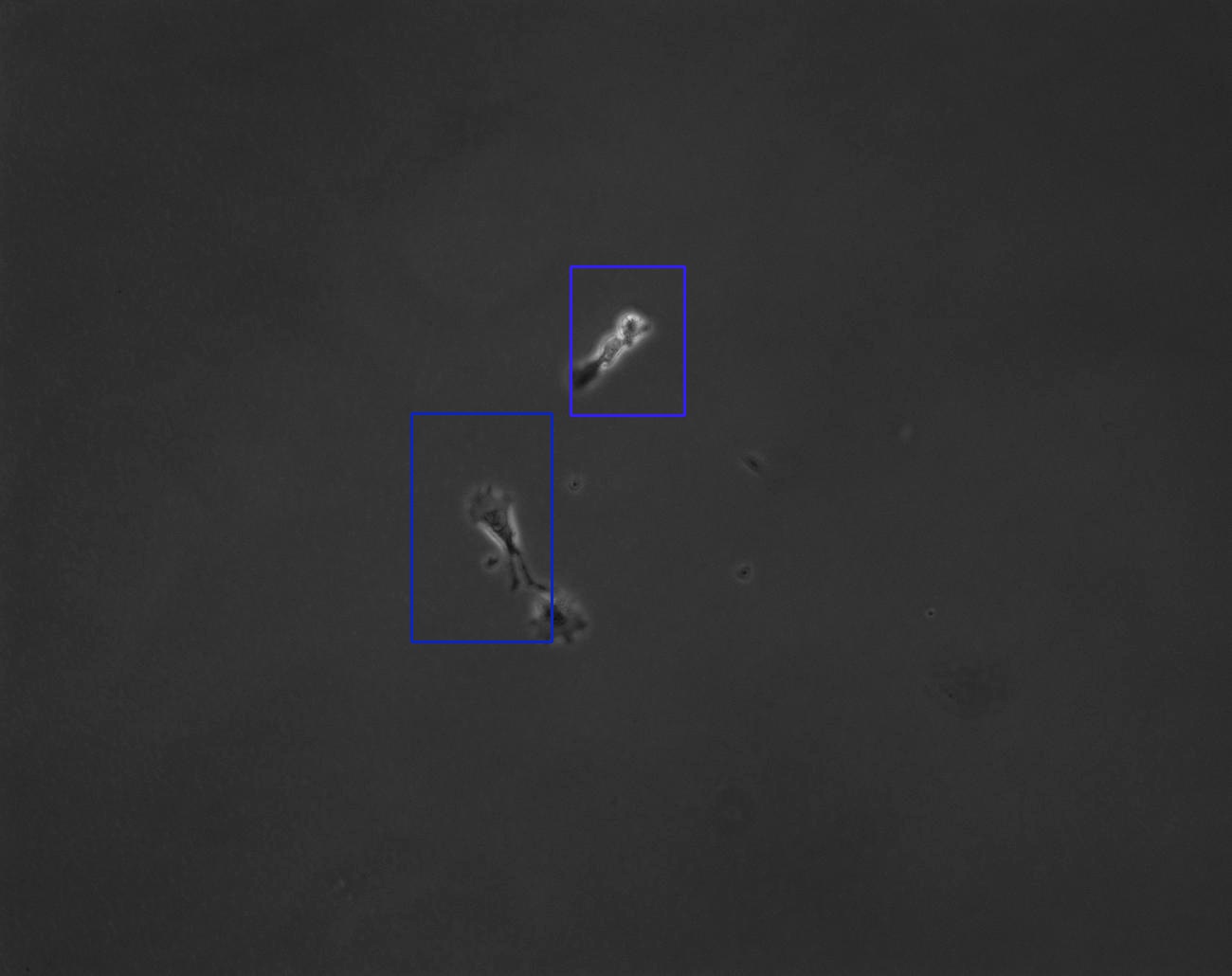

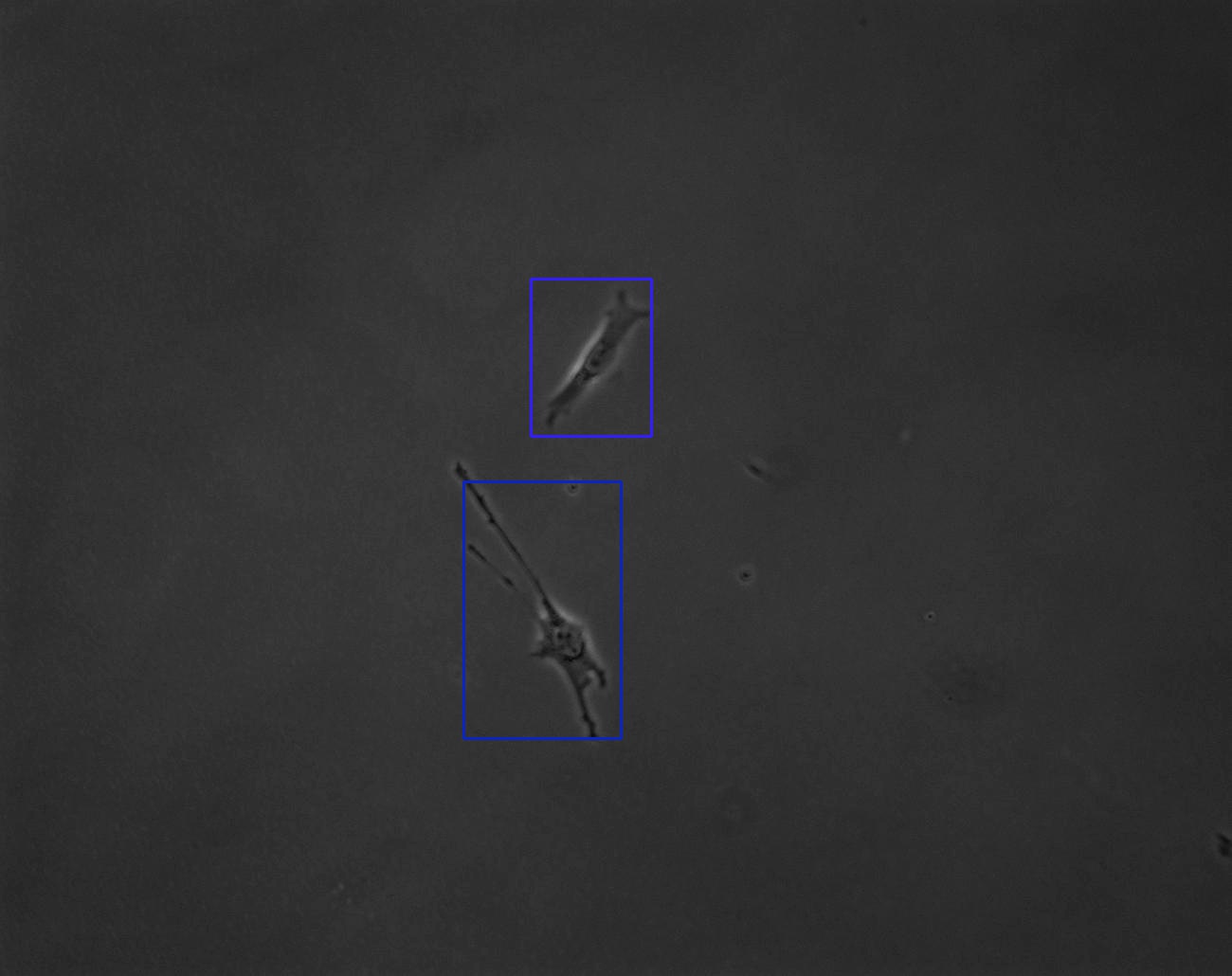

For the cell-tracking part, we first tried a technique called Discriminative Correlation Filter with Channel and Spatial Reliability (CSRT). However, provided that there are cells in the frames that divide into daughter cells, CSRT, an algorithm that basically “learns” a feature map of each bounding box in the current frame, sometimes fails to match this feature map with any of the feature maps in the next frame. We then tried using optical flow with Shi-Tomasi detection, but then, provided that there are cells in the frames that change their shapes over time, this approach also did not work quite as desired. Nevertheless, we still have some more-or-less decent results to report, as such:-

Examples of original cell frames:

-

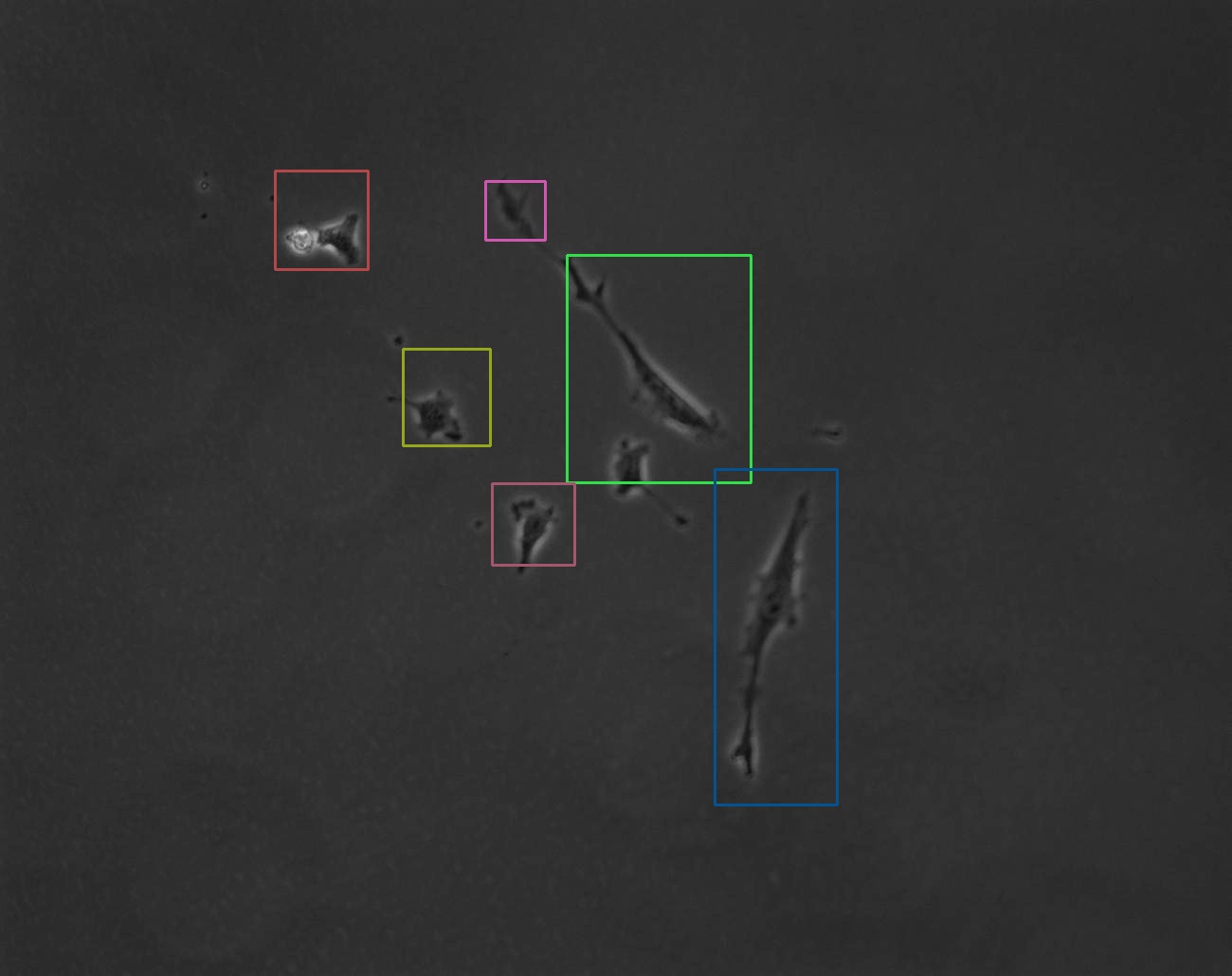

Examples of cell labels:

-

Examples of cell trackings:

-

Examples of original cell frames:

-

Discussions

-

> Show your tracking results on some portion of the sequence. In addition to showing your tracking results on an easy portion of the data,

> identify a challenging situation where your tracker succeeds, and a challenging situation where your tracker fails.

See above. -

> How do you decide to begin new tracks and terminate old tracks as the objects enter and leave the field of view?

In the code, whenever a bat or a cell disappears, that bat’s or cell’s track history is completely erased. This is, of course, not rigorous, but it simplifies the implementation. Whenever a new track begines, we simply store that new coordinate as a new list of list, so as to enable future “appending” into that outer list and formulate an actual track. -

> What happens with your algorithm when objects touch and occlude each other, and how could you handle this so you do not break track?

Kalman filter was introduced in order to deal with cases in which tracks overlap. -

> What happens when there are spurious detections that do not connect with other measurements in subsequent frames?

It just creates extra tracks that are not supposed to be there, but such extra tracks should not negatively affect detections of actual tracks, as long as we have a way to deal with overlaps, as stated in (3) above. -

> What are the advantages and drawbacks of different kinematic models: Do you need to model the velocity of the objects, or is it sufficient

> to just consider the distances between the objects in subsequent frames?

Kalman filter was introduced because it is insufficient to only measure those frame-pairwise L2-distances. It is insufficient, because one bat could be flying very far away in the next frame while a different bat could be flying in toward the first bat’s location in the current frame — numerous other screw cases are also called to mind. Consequently, we would like to make use of the velocity information, with the more reasonable underlying assumption that, from the current frame to the next frame, each bat’s velocity shouldn’t change that much.

-

> Show your tracking results on some portion of the sequence. In addition to showing your tracking results on an easy portion of the data,