- Facilitating Accessibility and Digital Inclusion Using Gaze-Aware Wearable Computing

KURAUCHI, A. T. N.; TULA, A. D.; MORIMOTO, C. H. Facilitating Accessibility and Digital Inclusion Using Gaze-Aware Wearable Computing. XIII Brazilian Symposium on Human Factors in Computer Systems, IHC'14. Foz do Iguaçu, Brazil, Oct. 2014.

Abstract...

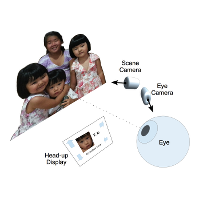

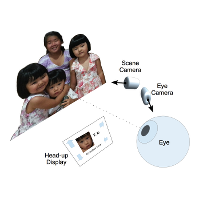

Gaze interaction has been helping people with physical disabilities for many years but its use is still limited, in part because it has been designed for desktop platforms. In this paper we discuss how gaze-aware wearable computing can be used to create new applications that can significantly enhance the quality of life of people with disabilities, including the elderly, and also be used to promote digital inclusion for illiterate people.

- Método de Calibração de Rastreadores de Olhar Móveis Utilizando 2 Planos Para Correção de Paralaxe (pt-BR)

KURAUCHI, A. T. N.; TULA, A. D.; MORIMOTO, C. H. Método de Calibração de Rastreadores de Olhar Móveis Utilizando 2 Planos Para Correção de Paralaxe. XXIV Brazilian Congress on Biomedical Engineering, CBEB'14. Uberlândia, Brazil, p.2683-2686, Oct. 2014.

Abstract...

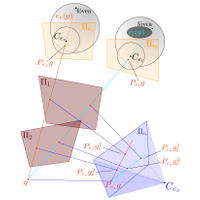

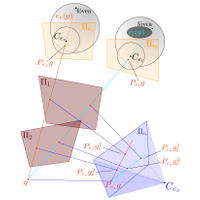

Most mobile eye trackers assume that the user is always looking at the same plane. This assumption is acceptable when the user’s gaze is limited to a computer screen. However it introduces an error when the eye tracker is used to collect gaze data over 3D objects in natural scenes. A significant error component is due to the parallax between the eye and the scene camera of the mobile eye tracker. In this work we present a new method based on invariant properties of projective geometry to compensate the parallax error using two calibration planes. Simulation results indicate that the method is sound and promising, being more robust to depth variations than traditional methods, with average errors up to 2.5 times smaller.

- Sistema Móvel de Baixo Custo Para Rastreamento do Olhar Voltado á Identificação de Disfunções Oculomotoras (pt-BR)

TULA, A. D.; KURAUCHI, A. T. N.; MORIMOTO, C. H.; Veitzman, S.; Ianof, J. N. Sistema Móvel de Baixo Custo Para Rastreamento do Olhar Voltado á Identificação de Disfunções Oculomotoras. XXIV Brazilian Congress on Biomedical Engineering, CBEB'14. Uberlândia, MG, Brazil, p.2830-2833, Oct. 2014.

Abstract...

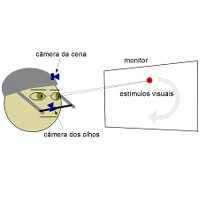

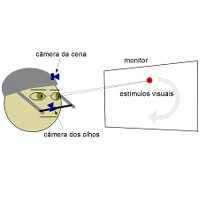

The study of eye movements is very important in the identification of oculomotor dysfunctions. Nonetheless, commercial eye tracking systems are very expensive and have limited mobility. In this paper we present a low cost, mobile head-mounted eye tracking platform for the study of eye movements, with the objective to help in the identification of oculomotor dysfunctions. We describe the hardware and software developed, as well as a simple case study of visual pursuits. The proposed system can help in the oculomotor evaluation of patients, aiming at improving their quality of life.

- Towards Wearable Gaze Supported Augmented Cognition

KURAUCHI, A. T. N.; MORIMOTO, C. H.; MARDANBEGI, D.; HANSEN, D. W. Towards Wearable Gaze Supported Augmented Cognition. Proc. of the CHI 2013 Workshop on Gaze Interaction in the Post-WIMP World, Paris, France, Apr. 2013.

Abstract...

Augmented cognition applications must deal with the problem of how to exhibit information in an orderly, understandable, and timely fashion. Though context have been suggested to control the kind, amount, and timing of the information delivered, we argue that gaze can be a fundamental tool to reduce the amount of information and provide an appropriate mechanism for low and divided attention interaction. We claim that most current gaze interaction paradigms are not appropriate for wearable computing because they are not designed for divided attention. We have used principles suggested by the wearable computing community to develop a gaze supported augmented cognition application with three interaction modes. The application provides information of the person being looked at. The continuous mode updates information every time the user looks at a different face. The key activated discrete mode and the head gesture activated mode only update the information when the key is pressed or the gesture is performed. A prototype of the system is currently under development and it will be used to further investigate these claims.

- Facilitating Gaze Interaction Using the Gap and Overlap Effects

TULA, A. D.; KURAUCHI, A. T. N.; MORIMOTO C. H. Facilitating Gaze Interaction Using the Gap and Overlap Effects. CHI '13 Extended Abstracts on Human Factors in Computing Systems. Paris, France, Apr. 2013.

Abstract...

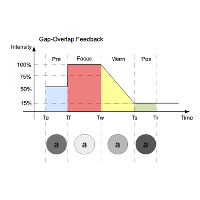

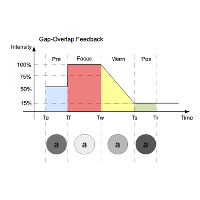

Many results from psychophysics have indicated that the latency of saccadic eye movements is affected by how new visual stimulus is presented. In this paper we show how two such results, known as the gap effect (GE) and overlap condition (OC), can be used to improve gaze interaction. We have chosen a dwell time based eye typing application, since eye typing can be easily modeled as a sequence of eye movements from one key to the next. By modeling how dwell time selection is performed, we show how the GE and OC can be used to generate visual feedback that facilitates the eye movement to the next key. A pilot experiment was conducted in which participants had to type short phrases on a virtual keyboard using 2 different visual feedback methods, one traditional feedback based on animation and a new feedback scheme using the GE and OC. Results show that using a feedback that exploits these phenomena facilitates eye movements and can improve eye typing user experience and performance.