Research – John Magee

I am researching with Prof. Margrit Betke. I am part

of the Image and Video Computing

(IVC) group. My main research interest human-computer interaction, accessible technology, and computer vision.

I am currently working on interfaces based on

head and eye gestures or motions, with applications towards accessibility.

|

Projects

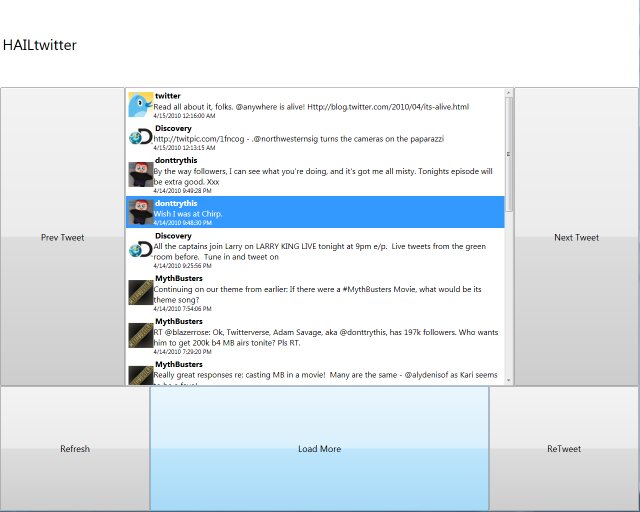

Hail: Hierarchical Adaptive Interface Layout

|

HAIL is a framework to adapt software to the needs of individuals with severe motion disabilities who use mouse substitution interfaces. The Hierarchical Adaptive Interface Layout (HAIL) model is a set of specifications for the design of user interface applications that adapt to the user. In HAIL applications, all of the interactive components take place on configurable toolbars along the edge of the screen. Paper: pdf. Abstract. |

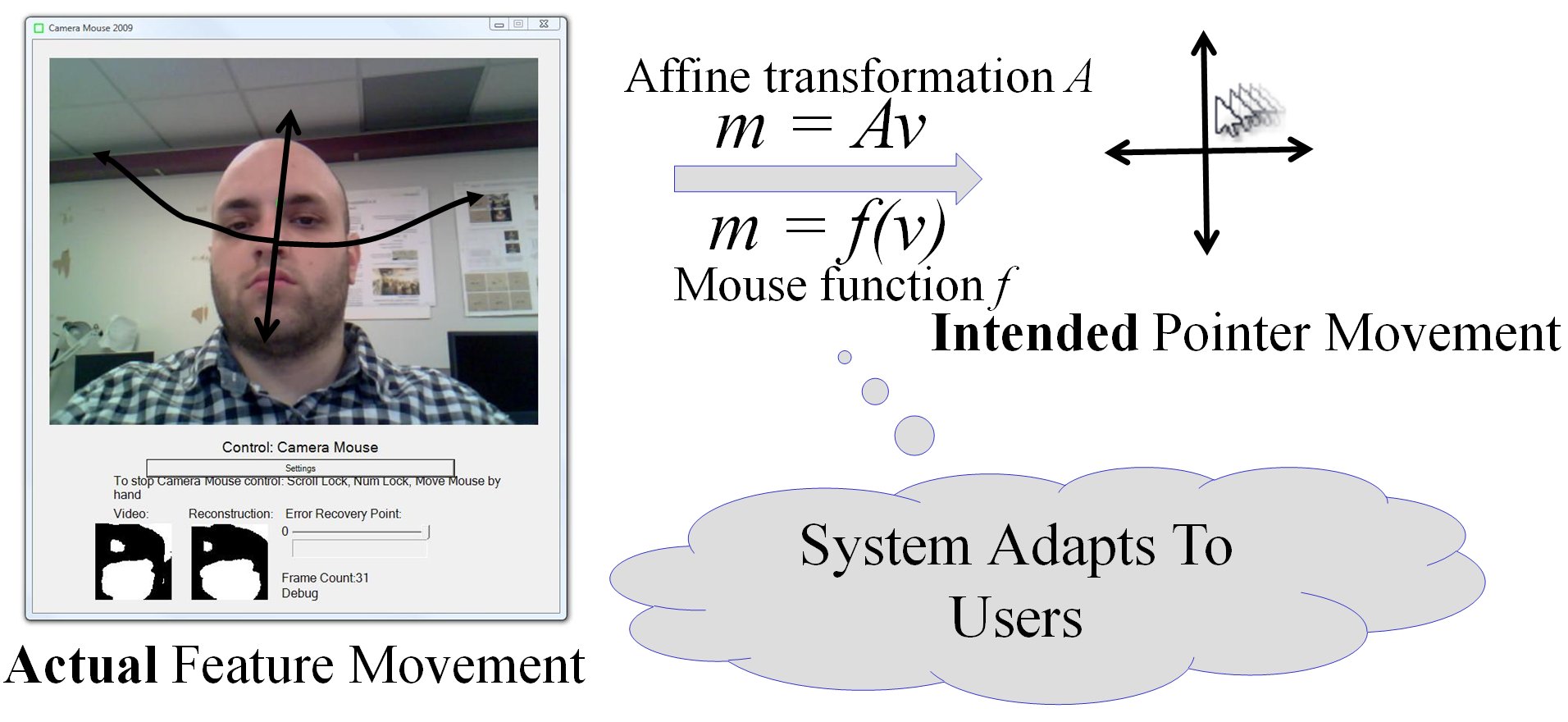

Adaptive Mouse Functions

|

Traditional approaches to assistive technology are often inflexible, requiring users to adapt their limited motions to the requirements of the system. Such systems may have static or difficult-to-change configurations that make it challenging for multiple users at a care facility to share the same system or for users whose motion abilities slowly degenerate. Current technology also does not address short-term changes in motion abilities that can occur in the same computer session. As users fatigue while using a system, they may experience more limited motion ability or additional unintended motions. To address these challenges, we propose adaptive mouse-control functions to be used in our mouse-replacement system. These functions can be changed to adapt the technology to the needs of the user, rather than making the user adapt to the technology. Paper: pdf. |

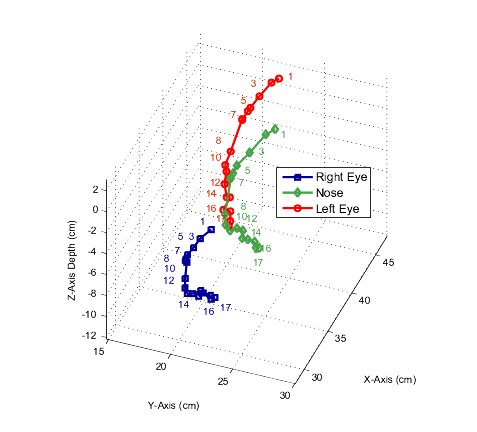

Multi-camera Interfaces

|

We are working on a multi-camera capture system that can record synchronized images from multiple cameras and automatically analyze the camera arrangement. In a preliminary experiment, 15 human subjects were recorded from three cameras while they were conducting a hands-free interaction experiment. The three-dimensional movement trajectories of various facial features were reconstructed via stereoscopy. The analysis showed substantial feature movement in the third dimension, which are typically neglected by single-camera interfaces based on two-dimensional feature tracking. Paper: pdf. |

EyeKeys: An Eye Gaze Interface

|

Some people are so severely paralyzed that they only have the ability to control the muscles that move their eyes. For these people, eye movements then become the only way to communicate. We developed a system called EyeKeys that detects whether the user is looking straight, or off to the left or right side. EyeKeys runs on consumer-grade computers with video input from inexpensive USB cameras. The face is tracked using multi-scale pyramid of template correlation. Eye motion is determined by exploiting the symmetry between left and right eyes. The detected eye direction can then be used to control applications such as spelling programs or games. We developed the game “BlockEscape” to gather quantitative results to evaluate EyeKeys with test subjects. Paper: Abstract. Pdf file. Video. |

Acknowledgements

This research is based upon work supported by the National Science Foundation (NSF) under Grants 0910908, 0855065, 0713229, 0093367. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.