|

I am a Ph.D. student at Boston University and am very lucky to be co-advised by Professor Kate Saenko and Professor Bryan Plummer. Prior to my Ph.D., I was an AI Resident on the Perception Team at Google Research, where I spent two amazing years working with Dr. Dilip Krishnan, Dr. Ce Liu, Professor Mike Mozer, and many others. Prior to that, I spent time on the ML team at Apple, and worked at a startup called Turi. I got my Bachelor's degree in Computer Science from Dartmouth College. Insipred by Wei-Chiu Ma (who was in turn inspired by Professors Kyunghyun Cho and Krishna Murthy), I am offering some office hours each week to talk about anything you'd like. I have recently gone through PhD admissions, so have some perspective on that, and am also happy to talk research and graduate student life. Please e-mail me with the subject line "OFFICE HOURS" and give me a brief overview of what you'd like to talk about and what time zone you're in. Email / CV / Google Scholar / Twitter |

|

|

I'm broadly interested in machine learning and computer vision, and am particularly interested in learning compact but semantically rich representations of our world. To me, this can mean finding better/cheaper/faster ways of training representations which generalize well to new distributions. It can also mean efficient adaptation to target data. Finally, it can mean finding ways to skip the pre-training step and efficently train on the target data. |

|

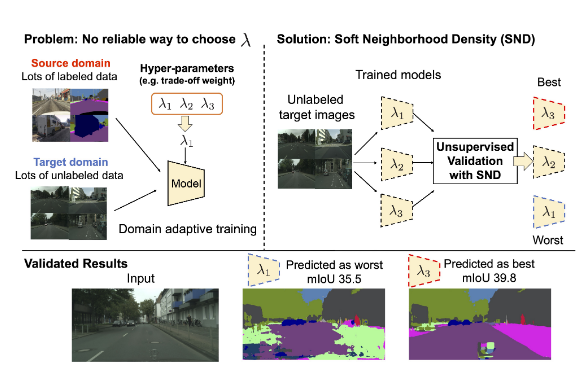

Kuniaki Saito, Donghyun Kim, Piotr Teterwak, Stan Sclaroff, Trevor Darrell, Kate Saenko ICCV, 2021 How to determine what hyperparameters to use for unsupervised domain adaptation, without cheating. |

|

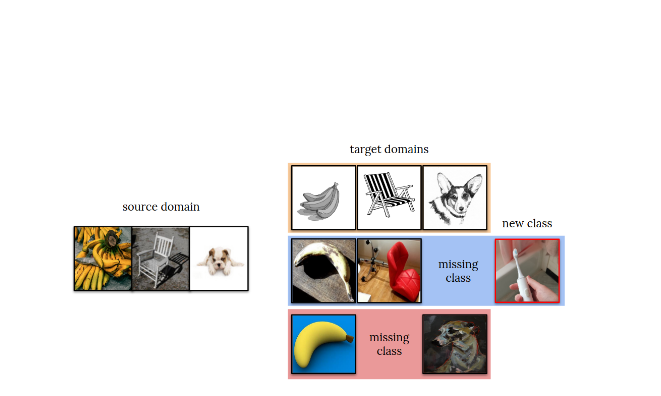

Dina Bashkirova*, Dan Hendrycks*, Donghyun Kim*, Samarth Mishra*, Kate Saenko*, Kuniaki Saito*, Piotr Teterwak* (equal contribution), Ben Usman* NeurIPS Competition Track, 2021 This challenge tests how well models can (1) adapt to several distribution shifts and (2) detect unknown unknowns. |

|

Piotr Teterwak, Chiyuan Zhang, Dilip Krishnan, Michael C. Mozer ICML, 2021 Understanding what information is preserved in classifier logits by training GAN-based inversion model. Surprisingly, we can reconstruct images well, though it depends on architecture and optimization procedure. |

|

Richard Strong Bowen, Huiwen Chang, Charles Herrmann, Piotr Teterwak, Ramin Zabih CVPR, 2021 Improving image extrapolation for objects. |

|

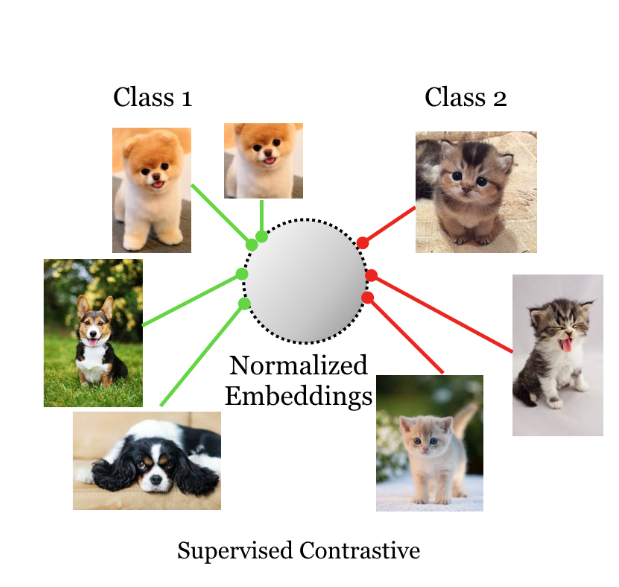

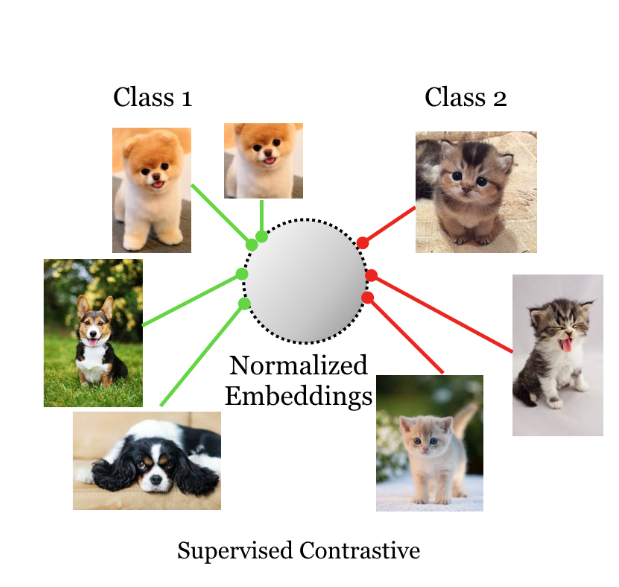

Prannay Khosla*, Piotr Teterwak* (equal contribution), Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, Dilip Krishnan NeurIPS, 2020 arXiv A new loss function to train supervised deep networks, based on contrastive learning. Our new loss performs significantly better than cross-entropy across a range of architectures and data augmentations. |

|

Piotr Teterwak, Aaron Sarna, Dilip Krishnan, Aaron Maschinot, David Belanger, Ce Liu, William T. Freeman ICCV 2019 Project Page Pretrained Models and Tutorial We adapt GAN's for the image extrapolation problem, and use a novel feature conditioning to improve results. |

|

|

|

NSF GRFP Honorable Mention Dean's Fellowship |

|

Template from Jon Barron's really cool website, |